OIN booklet: Difference between revisions

No edit summary |

No edit summary |

||

| Line 55: | Line 55: | ||

<div id="model_card" class="item"> | <div id="model_card" class="item"> | ||

{{:Model card}} | {{:Model card}} | ||

</div> | |||

<div id="pixel_space" class="item"> | |||

{{:Pixel space}} | |||

</div> | </div> | ||

Latest revision as of 14:59, 28 August 2025

Objects of interest and necessity

With the notion of an ‘object of interest’ a guided tour of a place, a museum or collection likely comes to mind. One may easily read this compilation of texts as a catalogue for such a tour in a social and technical system, where we stop and wonder about the different objects that, in one way or the other, take part in the generation of images by artificial intelligence (AI).

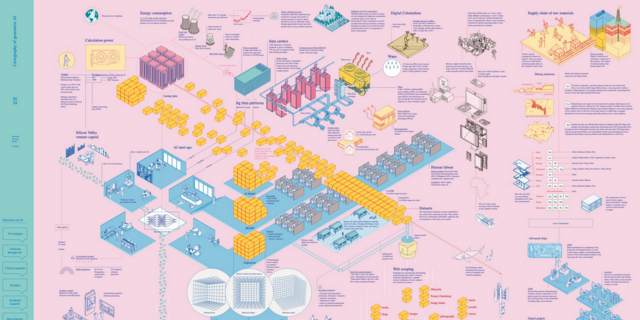

'A guided tour' perhaps also limits the understanding of what objects of interest are. In science, for instance, an object of interest sometimes refers to what one might call the potentiality of an object. Take for instance, the famous Kepler telescope whose mission was to search the Milky Way for exoplanets (planets outside our own solar system). Among all the stars, there are candidates for this, or so-called Kepler Objects of Interest (KOI) that are documented, indexed and catalogued. In similar ways, this catalogue is the outcome of an investigative process where we – by trying out different software, reading documentation and research, looking into communities of practice that experiment with AI image creation, and more – have sought to understand the things that make generative AI images possible; that is, the underlying dependencies on relations between communities, companies, models, technical units, and more in AI image creation. Within this system there is not just a functional apparatus, but also an ‘imaginary’; that is, there are underlying expectations and norms (for planetary existence, for instance) that are met in specific objects.

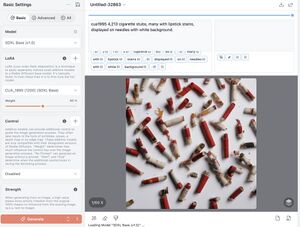

The catalogue, however, is strictly speaking not scientific, and should not be taken too seriously as such. It is not as if there is a set of defined parameters by which we have prioritized some objects over others. One can also think of an object of interest in a different way; as something that is not just the manifestation of an imaginary, but also what produces it. Take for instance Orhan Pamuk’s famous Museum of Innocence. It is a book by the Nobel Prize winning author, but also an entry ticket to a really existing museum in Istanbul, where one can find, amongst other items, a showcase of 4,213 cigarette butts, smoked by Füsun, the object of Kemal, the main character’s love.

During my eight years of going to the Keskins’ for supper, I was able to squirrel away 4,213 of Füsun’s cigarette butts. Each one of these had touched her rosy lips and entered her mouth, some even touching her tongue and becoming moist, as I would discover when I put my finger on the filter soon after she had stubbed the cigarette out.The stubs, reddened by her lovely lipstick, bore the unique impress of her lips at some moment whose memory was laden with anguish or bliss, making these stubs artifacts of a singular intimacy.[1]

The collection of objects are the things that makes the story, and also what makes the story real. Objects contain an associative power, that literally creates memories (the young Marcel in Proust’s In Search of Lost Times (À la recherche du temps perdu) who eats a madeleine cake to create a novel, is perhaps the most famous literary example of this). Therefore, this catalogue is not just a collection of the objects that make generative AI images, but also an exploration of an imaginary of AI image creation through the collection and exhibition of objects – and in particular, an imaginary of ‘autonomy’.

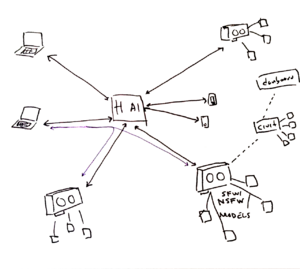

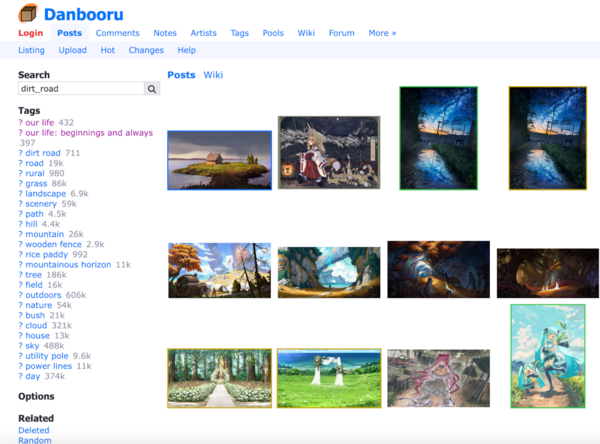

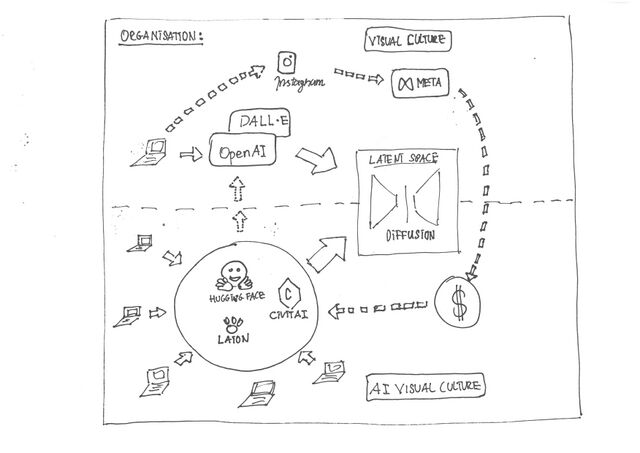

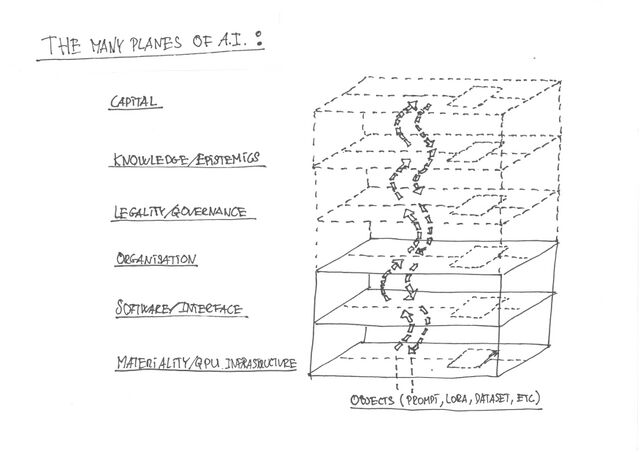

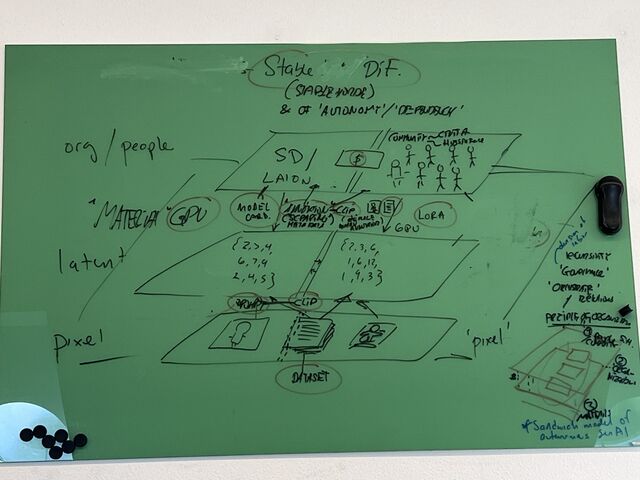

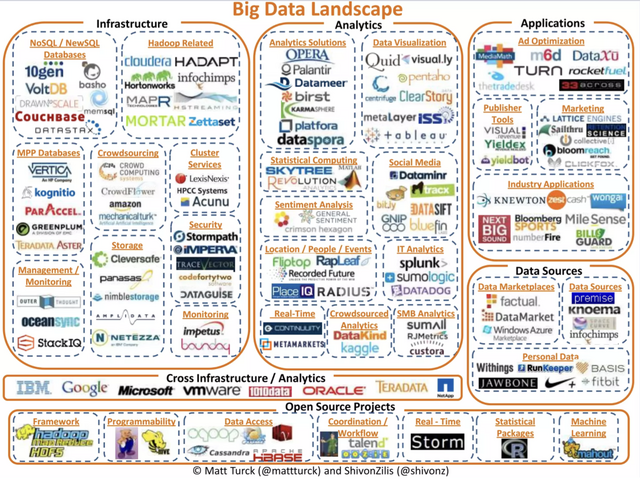

Most people’s experiences with generative AI image creation come from flagship platforms like OpenAI’s DALL-E, Adobe Firefly, Microsoft Image Creator, Midjourney, or other proprietary services. There is a whole ecology of services that are distinct yet often based on the same underlying models or techniques of so-called ‘diffusion’. Nevertheless, there are also communities who for different reasons seek some kind of independence and autonomy from the mainstream platforms. It may be that they are unsatisfied with the stylistic outputs; say, interested in not just manga style images, but a particular manga style (the English language image board or gallery Danbooru is an example of this, where much content is erotic). Others may have issues with the platform model itself, and how it compromises ideals of free/’libre’ and open-source software (aka F/LOSS). They want image generation to be more broadly available, free of costs, use less processing power, or open it up for new technical ideas and experimentation. For instance, OpenAI is not as open as the name indicates. It has a complicated history where commercialisation, partnerships and dependencies to other tech corporations (like Microsoft) have become increasingly central for its operations. For these reasons, autonomy from commercial and proprietary platforms of AI often imply visions of alternative infrastructures - more 'peer-to-peer' and decentralised than the platforms' 'client-server' relations between software and users. The objects presented in this catalogue all refer to autonomous practices of AI image generation, with different versions of what autonomy means and to various degrees of dependency; as autonomy in these pages is not simply understood as the possibility of a self-imposed law but more largely as an attempt to choose one's own attachments (or 'heteronomy'[2]). That is, rather than explaining how generative AI works (as many researchers and critics of AI call for[3]), our interest lies in opening up for an understanding of what it takes to make AI image generation work, and also to make it work separately from mainstream platforms and capital interests.

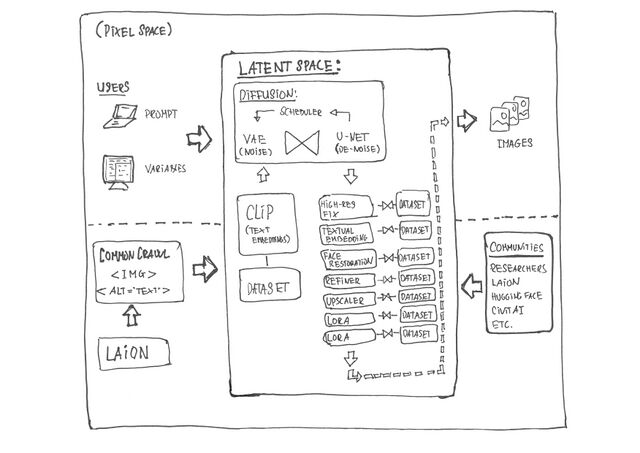

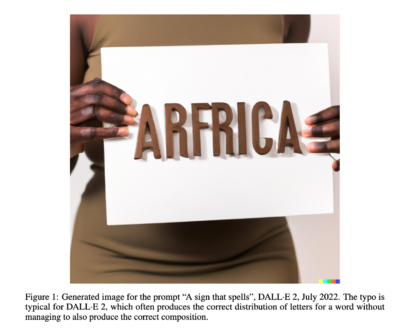

Our outset is ‘Stable Diffusion’, a generative AI model that produces images from text prompts. Characteristically, the company behind (Stability AI) uses the same ‘diffusion’ technology as many of the commercial services, but with heavily reduced labour costs in producing the data set behind, and the description of the images necessary to train the model. Indeed, this form of annotation is usually carried out by precarious and cheap 'data workers' in the 'gig economy' who describe images according to set categories. Although the labour is cheap, the annotation of images as a whole is an enormous and expensive task. However, explained a bit technically, Christoph Shumann, an independent high school teacher, found a way to ‘scrape’ the internet for links to images with alt text (text that describes images for people with disabilities) and filter out the non-sensical text (using Clip), thereby providing a fully annotated data set for only 5,000 USD (we have also included a text on both our own and others maps of this complex ecology in the catalogue, see also LAION). On this background, Stable Diffusion has been released under a community license that allows for research, non-commercial, and also limited commercial use.[4] That is, users can freely install and use Stable Diffusion under conditions similar to much other F/LOSS software.

Subsequently, there is range of other F/LOSS software that enables user interfaces to Stable Diffusion and a lively visual culture who uses and also builds on Stable Diffusion models. This includes, for instance, CivitAI that allows users to share and download AI models and, for its members, to both use its servers for currencies (virtual tokens called buzz) and show and sell their AI-generated images. DevianArt is another platform that functions in similar ways. Or, Hugging Face, which functions like a repository of user-created AI models that can be used in other F/LOSS applications (such as Draw Things or other interfaces to image generation and models) to generate images or ‘tweak’ the models, using so-called LoRAs. Some of these sites are heavily funded by venture capital. They are often communities of practice in one way or the other interested in autonomy and corporations geared towards maximizing value extraction. However, one also finds Stable Horde, that in a peer-to-peer fashion allows its community to access each other’s machines for processing power – contrary to conventional AI platforms where one depends on a corporate service and adopts an articulated approach to autonomy.

In other words, what autonomy is, and what it means to separate from capital interests is by no means uniform – the range of agents, dependencies, flows of capital, and so on, can be difficult to comprehend and is in constant flux. This, we have tried to capture in our description of the objects, guided by a set of questions that we address directly or indirectly in the different entries:

- What is the network that sustains this object?

- How does it evolve through time?

- How does it create value? Or decrease / affect value?

- What is its place/role in techno cultural strategies?

- How does it relate to autonomous infrastructure?

The murkiness of autonomous AI image generation implicates our interests in the objects, too. Therefore, the objects are not just of 'interest', but also of ‘necessity’. The Cuban artist and designer, Ernesto Oroza speaks of “objects that are at the same time an understanding of a need and the answer to it.”[5] Oroza speaks of, for instance, the Cuban phenomenon S-net, short for Street network, a form of wireless network that is community driven, but occurs in a situation where people want to play online games or access the internet for other reasons, but where internet access is limited and regulated by the government. S-net is autonomous and independent, and yet, in order to exist, it also accepts the official demands of, for instance, not discussing politics online.[6]

If one asks what qualifies as autonomy in AI image generation, and the intent is to catalogue what autonomous AI image generation is and consists of, we answer by showcasing what it looks like, by necessity – because it always exists in relation to a fluctuation set of correspondences, conditions and dependencies. In much the same way: the catalogue of ‘Kepler Objects of Interest’ might reflect the potential of objects to be something, but what something is always also looks like something; like love that might look like 4,213 cigarette butts exhibited in the Museum of Innocence. In this sense, the catalogue is also always in flux, and with its unfinished nature, we also invite others to continue its edition.

Further reading and writing (web/wiki-to-print)

The texts in this catalogue have all been written on a self-hosted wiki. From this, we have collated a PDF for print, using so-called 'web-to-print' techniques.

Some entries are kept short, some are longer and more elaborate. The wiki and this catalogue will eventually change over time, reflecting how the nature of 'objects of interest/necessity' in autonomous generative AI image creation are in flux, subject to both abstractions and negotiations by us as well as others. This 'wiki-style' of collaborative knowledge production is intrinsic to our collaboration in the project SHAPE - Knowledge Servers, funded by Aarhus University,[7] and also to the events and workshops around autonomous AI image generation that we have organised with The Royal Danish Library, and the community Code&Share in Aarhus, as part of the project.

On the wiki, we have included a 'Guestbook' where we invite visitors to leave comments an reflections.

https://ctp.cc.au.dk/w/index.php/Category:Objects_of_Interest_and_Necessity

––––– Christian Ulrik Andersen, Nicolas Malevé, Pablo Velasco

[1] Orhan Pamuk, The Museum of Innocence, trans. Maureen Freely (New York: Alfred A. Knopf, 2009), 501.

[2] Lund, Jacob. “Autonomy.” Artistic Practice under Contemporary Conditions, December 3, 2024. https://contemporaryconditions.art/text/autonomy.

[3] Feiyu Xu et al., “Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges,” in Natural Language Processing and Chinese Computing, ed. Feiyu Xu et al., Lecture Notes in Computer Science, vol. 11839 (Cham: Springer, 2019), 563–574, https://doi.org/10.1007/978-3-030-32236-6_51.

[4] Stability AI, “Stability AI License,” accessed August 11, 2025, https://stability.ai/license.

[5] Ernesto Oroza, “Technological Disobedience in Cuba,” Walker Art Center Magazine, accessed August 11, 2025, https://walkerart.org/magazine/ernesto-oroza-technological-disobedience-cuba/.

[6] Köhn, Steffen. Island in the Net: Digital Culture in Post-Castro Cuba. Princeton University Press, 2026. https://press.princeton.edu/books/paperback/9780691273143/island-in-the-net.

[7] SHAPE, “Knowledge Servers,” accessed August 11, 2025, https://shape.au.dk/en/themes/knowledge/knowledge-servers.

Guestbook

CivitAI

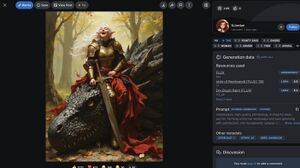

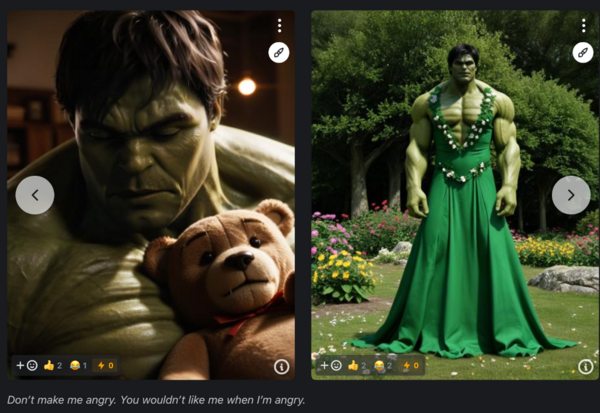

Like Hugging Face, CivitAI is a collaborative hub for AI development. But unlike Hugging Face which supports a large range of applications, CivitAI is dedicated to image generation only. The platform attracts a huge number of enthusiasts and amateurs. In contrast to the rather serious interface and atmosphere of Hugging Face (that looks more like GitHub), the platform resembles art platforms such as DeviantArt where users display their portfolios. With its labyrinth of image galleries, it celebrates the capabilities of generative AI to clone every style and cross every genre from cartoon to oil painting and fashion photography to extreme pornography, as if the users were on a mission to exhaust pixel space.

But the platform attracts more than image makers. Many Civitans also upload custom-made models, LoRAs, VAEs, highly detailed tutorials. A large population of anime fans is responsible for an endless list of models that specialize on a given manga character, as well as many versions of the infamous PONY models that started as a project to generate better images of the characters of My Little Pony and that has evolved in a complex constellation of models able to produce reliable body poses.[1] This makes CivitAI a bridge between fans and computer geeks (who are sometimes both) who enjoy the platform's very lax sense of moderation that unfortunately does little to prevent various forms of abuse.[2] These models are made available on the platform's own image generator as well as for download. The largest share of models are available for free, therefore finding their ways on desktops for private use and in peer-to-peer networks such as Stable Horde for communal production.

CivitAI has a large infrastructure at its disposal. As users train models, LoRAs and VAEs on the platform and generate impressive amounts of images, CivitAI needs capital investment. As a centralized service (in contrast to Stable Horde), it supports its operations through various commercial offers. Additionally, it raises eye boggling venture capital investment. In 2023, the company raised $5.1 million backed by the firm Andreessen Horowitz (a16z).[3] All in all, the company exemplifies the tensions and paradoxes of an autonomous AI and its attachments. It does indeed serve the bottom-up production of models and add-ons as well as the 'democratization' of AI technology in a way that goes beyond mere consumer usage. But it does so by converting the labour of love of a large population of enthusiasts into capital. On the one hand, it makes possible a relative delinking from the dominant players of the market (such as OpenAI) and nourishes an ecosystem of small actors from amateurs to hackers. On the other, it does it at the condition of capital accumulation and complicity with the dark matter of American finance.

[1] PurpleSmartAI. “Pony Diffusion V6 XL.” CivitAI, March 6, 2025. https://civitai.com/models/257749/pony-diffusion-v6-xl.

[2] Wei, Yiluo, Yiming Zhu, Pan Hui, and Gareth Tyson. “Exploring the Use of Abusive Generative AI Models on Civitai.” 2024. https://arxiv.org/abs/2407.12876.

[3] Perez, Sarah. “Andreessen Horowitz Backs Civitai, a Generative AI Content Marketplace with Millions of Users.” TechCrunch, November 14, 2023. https://techcrunch.com/2023/11/14/andreessen-horowitz-backs-civitai-a-generative-ai-content-marketplace-with-millions-of-users/.

Clip

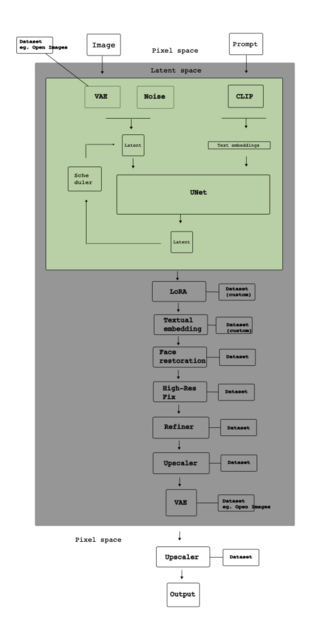

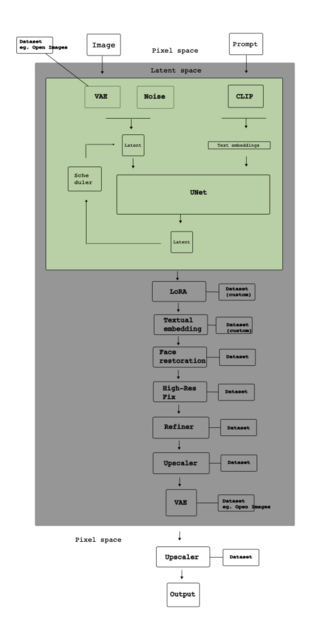

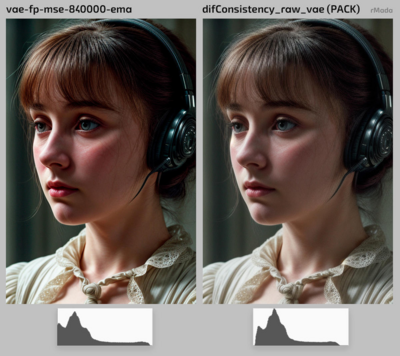

Like the variational autoencoder (VAE), the vision model CLIP (contrastive language-image pre-training) is largely unknown to the general public. As the VAE, it is used in the image generation pipeline as a component to encode input into embeddings, statistical representations, that can be operated upon in the latent space.[1]

The presence of CLIP in the pipeline illustrates the complexity of the relations between the various ecosystems of image generation. CLIP was first released in 2021 by OpenAI under an open source license,[2] just before the company changed its politics of openness. Subsequent products such as DALL-E are governed by a proprietary license.[3] CLIP is in its own right a foundational model and serves multiple purposes such as image retrieval and classification. Its use as a secondary component in the image generation pipeline shows the composite nature of these architectures where existing elements are borrowed from different sources and repurposed according to needs. If technically, CLIP bridges prompts and the latent space, politically it travels between proprietary and open source ecosystems.

Comparing CLIP to the VAE also shows how elements that perform similar technical functions allow for strikingly different forms of social appropriations. Amateurs train and retrain VAEs to improve image realism where as CLIP, that has been trained on four hundred million text-to-image pairs,[4] cannot be retrained without incurring exorbitant costs. Therefore, the presence of CLIP is due to its open licensing. The sheer cost of its production makes it a black box even for advanced users, and its inspection and customization out of reach.

[1] Offert, Fabian: On the Concept of History (in Foundation Models). In: IMAGE. Zeitschrift für interdisziplinäre Bildwissenschaft, Jg. 19 (2023), Nr. 1, S. 121-134.http://dx.doi.org/10.25969/mediarep/22316

[2] Openai. “GitHub - Openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the Most Relevant Text Snippet given an Image.” GitHub, n.d. Accessed August 22, 2025. https://github.com/openai/CLIP.

[3] Xiang, Chloe. “OpenAI Is Now Everything It Promised Not to Be: Corporate, Closed-Source, and For-Profit.” Vice, February 28, 2023. https://www.vice.com/en/article/openai-is-now-everything-it-promised-not-to-be-corporate-closed-source-and-for-profit/.

[4] Nicolas Malevé, Katrina Sluis; The Photographic Pipeline of Machine Vision; or, Machine Vision's Latent Photographic Theory. Critical AI 1 October 2023; 1 (1-2): No Pagination Specified. doi: https://doi.org/10.1215/2834703X-10734066

Currencies

₡ ₢ ₣ ₤ ₥ ₦ ₧ ₨ ₩ ₪ ₫ € ₭ ₮ ₯ ₰ ₱ ₲ ₳ ₴ ₵ ₶ ₷ ₸ ₹ ₺ ₻ ₼ ₽ ₾ ₿

In some countries, Zoos' ethical conduct does not allow them to sell or buy animals to from zoos. Arguably, animals are an object of necessity in this context, however zoos are institutions that avoid poachers and animal hunters, which historically were the main sources for wild animals. Zoos and aquariums around the world do not think, in practice, of animals as commodities (i.e., objects that can be bought with money).[1] Animals are not for sale, yet zoos require to gather new animals. This apparent conundrum is usually solved through barter (exchange one 'product' for another one, instead of using money). Again, however, this is a burdensome system, as it is not always the case that an elephant is readily available to be traded for 20 jellyfish. The association of zoos and aquariums works through a system where a zoo donates an animal getting nothing in return. The animals are send to one of the zoos with space, need and facilities. But while there is no money in this transaction, the donors gain recognition within the zoo network, thus enhancing the possibilities of being the recipients of future donations.

Currencies take odd shapes. The zoos example above is one of the many tales of the 'semantic volatility' associated with currencies. While we use fiat (euros, crowns, etc) for most of our daily lives, social structures work on a sometimes invisible and highly complex mesh of systems of value and exchange. In the example above, in reality, giving up an elephant does not equal to zero gains, but what is gained is less quantifiable than a sum of money. Within our objects of interest and necessity, these unusual, implicit, and explicit exchanges, take the form of time, expertise, platformisation, and graphical processing power.

What is the network that sustains this object?

Most currencies are based on a network of interest that agrees to assign value to an object or system. In the case of zoos, the network is the zoos and aquariums agreed to assign value to donations and to ignore metrics or quantification (e.g., an elephant is neither an amount in euros nor equivalent to 50 lemurs). Plenty of alternative currencies rely on their own agreed system and work based on trust (either in the system or in the social structure).

Ad-hoc currencies are common in certain digital platforms, this means they can be used only in a certain ecosystem. For example, in-game currencies like 'Gold' in the popular mobile game Crash of Clans, can be earned and used only in that game. They allow for in-app or on-site monetisation, as they are commonly bought using legal tender (i.e. US dollars, Danish crowns, etc). Plenty of digital systems develop their own in-app currencies, either tied or untied to legal tender. The platforms in this cases usually dictate the rules of exchange, and the users (consumers and/or producers of content) generate and share the currencies in between them or through other objects of value.

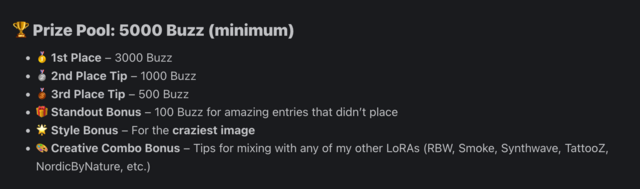

On an organisational plane, within AI-oriented platforms, currencies tie the user, the producer, and the platform. "Buzz", the currency for CivitAI (one of the largest marketplaces for generative AI content), acts as a reward for the user's interaction with content, as a tip for content creators, and even as a 'bounty' for specific requests. The currency is controlled by the platform, the rules of production and legality of exchange are defined by CivitAI. As such, this currency can be purchased using fiat money directly in the platform, but it can also be earned awarded in different forms: reactions to content provides some buzz; if one's model is added to a collection, buzz is also generated for the owner of the model; the currency can also be freely tipped; some specific bounties or rewards can offer buzz for creating a very specific model or LoRA (for example, to remove watermarks[2]); or one can even beg for currency.

The listed options above are a peek to a microcosmos of social arrangements in a very specific platform of AI image generation. Buzz allows any CivitAI user to generate images. That is, this currency is exchanged for computational power, expertise, or a combination of both. Legal tender transforms into a community-value, where GPUs ownership and modelling knowledge and skills become highly valuable.

How does it create value? Or decrease / affect value?

Protocol as currency

During the late 2000s, the birth of cryptocurrencies sparked the imagination about how money could be different in the 21st century. The creation of Bitcoin, the first cryptocurrency, open the door for a type of currency that was, arguably, defined more by its system than by their users. Instead of relying on a central, trustable, institution, like a bank or a government, bitcoin off-phased trust and accountability to a mathematically-governed distributed system. Technically, the system would guarantee accurate transactions between any party, without any central management. Some rules were attached to its code, for example, programmed scarcity, but no traditional financial organisation was involved in the creation of the currency's rules.

The importance of code and protocol in this new type of digital currencies not only brought software to the main stage. Due to the high requirements of computational power in the blockchain design (the technology behind most cryptocurrencies), crypto miners (the computers that generate new coins) started requiring GPUs to be profitable. The equation for this was simple, the more computing power, the more chances to 'find' a coin (i.e., to solve a mathematical puzzle and generate a valid new block on the chain). While CPUs were able to process the required computation, GPUs architecture just made this process faster. The crypto industry has thus generated massive mining facilities with thousands of GPUs to profit by generating new coins, producing also a scarcity of this type of hardware. The unexpected relationship between digital currencies and the need for fast processing power suddenly made GPUs an important actor in the currencies landscape.

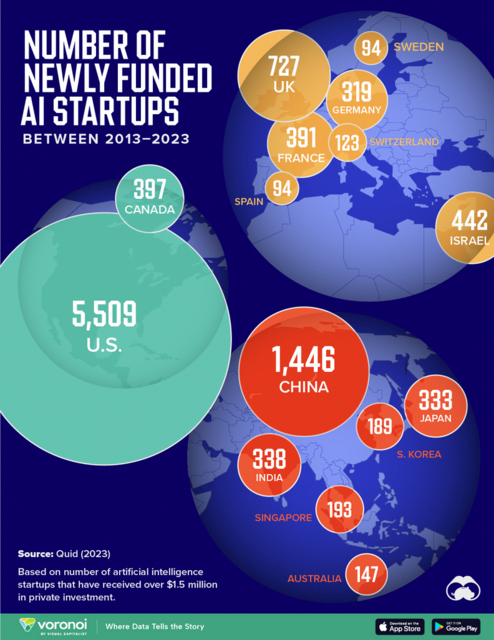

GPU as currency

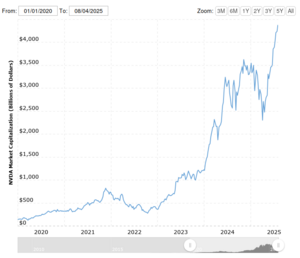

With the expansion of LLMs and AI-orientated platforms, scarcity has moved once again towards hardware capable of training, operating, and fine-tuning LLMs. The boom of LLMs in the last 5 years started a race for developing and bringing to the market the most advanced LLMs. Tech giants like Microsoft, OpenAI, and Meta, compete by offering state-of-the-art models and integrating them into their software. That has made the GPU a holy grail of hardware, and had a strong effect for the manufacturers: Nvidia, the company that produces the most popular GPUs for training and gaming, was valued at US$3 trillion in 2023, and has surpassed the 4 trillion mark in 2025. A whole economy based on the production of hardware for text and image generation.

In the CivitAI example, Buzz is also highly related to access to a GPU. Much like with cryptocurrencies, the ownership of this type of hardware allows for exchange computing power for currency. However, the economies of LLMs are not restricted to big tech and platform-driven lives. On a different place within this spectrum, the hordeAI (see GPU and Stable Horde entries) network acts as a barter system of sorts, with its own currency. Named 'Kudos' can be earned by sharing one's GPU in the network, that is, lending a graphical device to produce images for someone within the network. Then kudos can be spend by using others GPU cards (perhaps better and with access to more demanding diffusion LLMs), through any interface connected to the network, and/or having priority in the generation queue. Kudos, in this sense, value reciprocity, and the imaginary of infrastructural autonomy outside of the big tech LLMs offers.

We share our GPU with the hordeAI, allowing for requests from other users to use our processing power. Thus, not only earning and spending kudos, but most importantly, participating in economies of sharing. Even though the hordeAI network is not a tight community (it is, factually, a network of GPU individual users), it allows us to think about currency in terms of materiality and reciprocity, and offers an insight to the possibilities of autonomy in an LLM saturated context.

[1] NPR, “Episode 566: The Zoo Economy,” Planet Money, September 5, 2014, https://www.npr.org/sections/money/2014/09/05/346105063/episode-566-the-zoo-economy.

[2] Civitai Community, “ADetailer Model to Remove Watermarks from SDXL Models,” Civitai, accessed August 12, 2025, https://civitai.com/bounties/1168/adetailer-model-to-remove-watermarks-from-sdxl-models.

[3] Wikipedia, s.v. “Bitcoin,” last modified August 12, 2025, https://en.wikipedia.org/wiki/Bitcoin.

[4] Macrotrends, “NVIDIA Market Cap 2010–2025 | NVDA,” Macrotrends, accessed August 12, 2025, https://www.macrotrends.net/stocks/charts/NVDA/nvidia/market-cap.

Dataset

In the context of AI image generation, a dataset is a collection of a collection of image-text pairs (and sometimes other attributes such as provenance or an aesthetic score) used to train AI models. It is an object of necessity par excellence. Without dataset, no model could see the light of day. Iconic datasets include the LAION aesthetic dataset, Artemis, ImageNet, or Common Objects in Context (COCO). These collections of images, mostly sourced from the internet, reach dizzying scales. ImageNet became famous for its 14 millions images in the first decade of the century.[1] Today LAION-5B consists of 5,85 billion CLIP-filtered image-text pairs. [2]

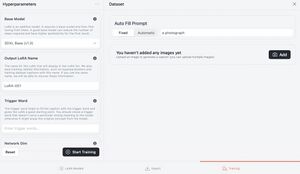

If large models such as Stable Diffusion require large scale datasets, various components such as LoRAs, VAEs, refiners, or upscalers can be trained with a much smaller amount of data. In practice, this means that for each of these components, a custom dataset is created. As each of these datasets reflects a particular aspect of visual culture, the components trained on them function as conduits for imaginaries and world views. Image generators are not simply produced through mathematics and statistics, they are programmed by images. Programming by images is a specific curatorial practice that involves a wide range of skills including a deep knowledge of the relevant visual domain, the ability to find the best exemplars, many practical skills such as scraping, image filtering, cleaning and cropping, and mastering the art of a coherent classification and annotation. In our tour, we discuss two examples of curatorial practices of different scales and purpose: the creation of the LAION dataset and the art of collecting the images that are necessary to "bake the LoRA cake."[3]

Further, behind each dataset there is an organisation - of people, corporate organisations, researchers, or others.[4] Even for individual users, collecting and sharing a dataset often means accepting and cultivating attachments to platforms. For instance, many datasets manually assembled by individuals are made freely available on platforms like Hugging Face, along with the large scale ones published by companies or universities, for others to build LoRAs or in other ways experiment with.

[1] Deng, Jia, Wei Dong, Richard Socher, Li-jia Li, Kai Li, and Li Fei-fei. “Imagenet: A Large-Scale Hierarchical Image Database.” CVPR 1 (2009): 248–55. https://doi.org/10.1109/CVPR.2009.5206848.

[2] Deng, Jia, Wei Dong, Richard Socher, Li-jia Li, Kai Li, and Li Fei-fei. “Imagenet: A Large-Scale Hierarchical Image Database.” CVPR 1 (2009): 248–55. https://doi.org/10.1109/CVPR.2009.5206848. Beaumont, Romain. “LAION-5B: A NEW ERA OF OPEN LARGE-SCALE MULTI-MODAL DATASETS.” LAION, March 31, 2022. https://laion.ai/blog/laion-5b/.

[3] knxo, “Making a LoRA Is Like Baking a Cake,” Civitai, published July 10, 2024, accessed August 18, 2025, https://civitai.com/articles/138/making-a-lora-is-like-baking-a-cake.

[4] JinsNotes. “Vision Dataset.” JinsNotes, August 1, 2024. Accessed August 26, 2025. https://jinsnotes.com/2024-08-01-vision-dataset.

Diffusion

Rather than a mere scientific object, diffusion is treated here as a network of meanings that binds together a technique from physics (diffusion), an algorithm for image generation, a model (Stable Diffusion), an operative metaphor relevant to cultural analysis and by extension a company (Stability AI) and its founder with roots in hedge fund investment.

In her text "Diffused Seeing", Joanna Zylinska aptly captures the multivalence of the term:

... the incorporation of ‘diffusion’ as both a technical and rhetorical device into many generative models is indicative of a wider tendency to build permeability and instability not only into those models’ technical infrastructures but also into our wider data and image ecologies. Technically, ‘diffusion’ is a computational process that involves iteratively removing ‘noise’ from an image, a series of mathematical procedures that leads to the production of another image. Rhetorically, ‘diffusion’ operates as a performative metaphor – one that frames and projects our understanding of generative models, their operations and their outputs.[1]

In complement to Zylinska's understanding of diffusion as a term operating at different levels with an emphasis on permeability, we inquire into the dialectical relation that opposes it to stability (as interestingly emphasized in the name Stable Diffusion), where the permeability and instability enclosed in the concept constantly motivates strategies of control, direction, capitalization or democratization that leverage the unstable character of diffusion dynamics.

What is the network that sustains this object?

From physics to AI, the diffusion algorithm

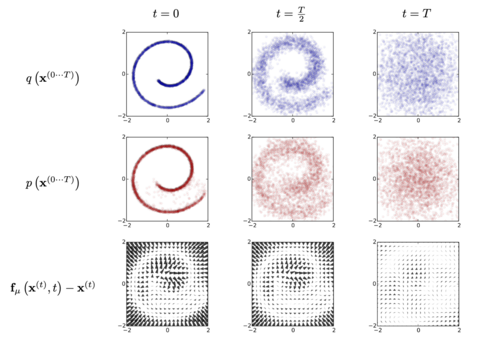

Our first move in this network of meanings is to follow the trajectory of the concept of diffusion from the 19th century laboratory to the computer lab. If diffusion had been studied since antiquity, Adolf Fink published the first laws of diffusion" based on his experimental work in 1855. As Wuhan and Princeton AI researchers Yuhan et al put it:

In physics, the diffusion phenomenon describes the movement of particles from an area of higher concentration to a lower concentration area till an equilibrium is reached. It represents a stochastic random walk of molecules.[2]

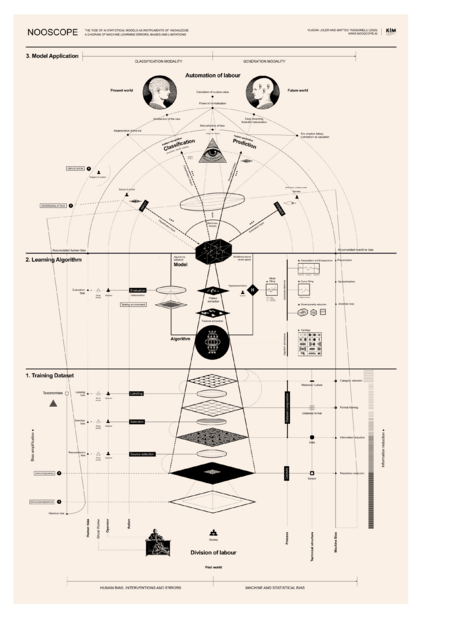

To understand how this idea has been translated in image generation, it is worth looking at the example given by Sohl-Dickstein and colleagues who authored the seminal paper on diffusion in image generation.[3] The authors propose the following experiment: take an image and gradually apply noise to it until it becomes totally noisy; then train an algorithm to 'learn' all the steps that have been applied to the image and ask it to apply them in reverse to find back the image (see illustration). By introducing some movement in the image, the algorithm detects some tendencies in the noise. It then gradually follows and amplifies these tendencies in order to arrive to a point where an image emerges. When the algorithm is able to recreate the original image from the noisy picture, it is said to be able to de-noise. When the algorithm is trained with billions of examples, it becomes able to generate an image from any arbitrary noisy image. And the most remarkable aspect of this process is that the algorithm is able to generalise from its training data: it is able to de-noise images that it never “saw” during the phase of training.

Another aspect of diffusion in physics is of importance in image generation can be seen at the end of the definition of the concept as stated in Wikipedia (emphasis is ours):

diffusion is the movement of a substance from a region of high concentration to a region of low concentration without bulk motion.[4]

Diffusion doesn't capture the movement of a bounded entity (a bulk, a whole block of content), it is a mode of spreading that flexibly accommodates structure. Diffusion is the gradual movement/dispersion of concentration within a body with no net movement of matter."[5] This characteristics makes it particularly apt at capturing multi level relations between image parts without having to identify a source that constraints these relations. It gives it access to an implicit structure. Metaphorically, this can be compared to a process of looking for faces in clouds (or reading signs in tea leaves). We do not see immediately a face in a cumulus, but the faint movement of the mass stimulates our curiosity until we gradually delineate the nascent contours of a shape we can identify.

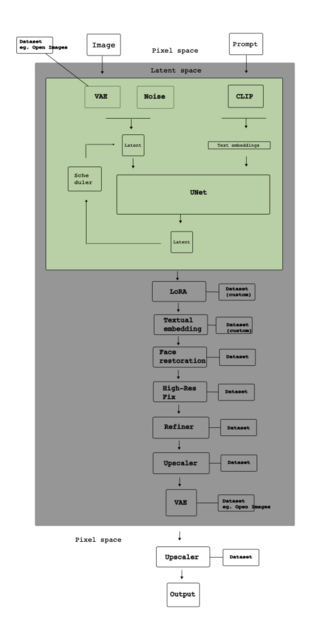

Stabilising diffusion

Diffusion as presented by Sohl-Dickstein and colleagues is at the basis of many current models for image generation. However, no user deals directly with diffusion as demonstrated in the paper.[7] It is encapsulated into software and a whole architecture mediates between the algorithm and its environment (see diagram of the process). For instance, Stable Diffusion is a model that encapsulates the diffusion algorithm and makes it tractable at scale. Rombach et al., the brains behind the Stable Diffusion model, popularize the diffusion technique by porting it in the latent space.[8] Instead of working on pixels, the authors performed the computation on compressed vectors of data and managed to reduce the computational cost of training and inference. They thereby popularised the use of the technique, making it accessible to a larger community of developers, and also added important features to the process of image synthesis:

By introducing cross-attention layers into the model architecture, we turn diffusion models into powerful and flexible generators for general conditioning inputs such as text or bounding boxes and high-resolution synthesis becomes possible in a convolutional manner.[9]

But if diffusion is relatively stabilized in technical terms (input control and infrastructure), its adoption by increasingly large circles of users and developers has contributed to different forms of disruption for the best and the worst: parodies and deepfakes, political satyre and revenge porn. Once in circulation, it moves both as a technical product and as images.

Furthermore, rhetorically, it becomes a metaphor within a set of nested metaphors that include the brain as computer, concepts such as 'hallucinations' or deep 'dreams' that respond to a more general cultural condition. As Zylinska notes:

We could perhaps suggest that generative AI produces what could be called an ‘unstable’ or ‘wobbly’ understanding – and a related phenomenon of ‘shaky’ perception. Diffusion [...] can be seen as an imaging template for this model.[10]

Still according to Zylinska, this metaphor posits instability as an organizing concept for the image more generally:

Indeed, it is not just the perception of images but their very constitution that is fundamentally unstable.[11]

As a concept, it is in line with a general condition of instability due to the extensive disruptions brought on by the flows of capital. The wobbly, risky, financial and logistical edifice that supports Stable Diffusion's development testifies to this. The company Stability AI, funded by former edge fund manager Emad Mostaque, helped finance the transformation of the "technical" product into a software available to users and powered by an expensive infrastructure. It also made it possible to sell it as a service. To access large scale computing facilities, Mostaque raised $100 millions in venture capital.[12] His experience in the financial sector helped convince donors and secure the financial base. The investment was sufficient to give a chance to Stability to enter the market. Moving from the computer lab to a working infrastructure required to ground the diffusion algorithm into another material environment comprising Amazon servers, the JUWELS Booster supercomputer, tailor made data centers around the world.[13] This scattered infrastructure corresponds to the global distribution of the company's legal structure: one leg in the UK and one leg in Delaware. The latter offering a welcoming tax environment for companies. Dense networks of investors and servers supplement code. In that perspective, the development of the Stable Diffusion algorithm is inseparable from risk investment. These risks take the concrete form of a long string of controversies and lawsuits, especially for copyright infringement and the eventual firing of Mostaque from his position of CEO after aggressive press campaigns against his management. Across all its dimensions, the shaky nature of this assemblage mirrors the physical phenomenon Stable Diffusion's models simulate.

In short, stabilising diffusion means attending a huge range of problems happening simultaneously that require extremely different skills and competences such as algorithmic design, statistical optimization, identifying faulty GPUs, decide on batch sizes in training, and the impact of different floating-point formats on training stability, securing investment and managing delays in payment, pushing against legal actions, and, last but not least, aligning prompts and images.

How does diffusion create value? Or decrease / affect value?

The question of value needs to be addressed at different levels as we have chosen to treat diffusion as a complex of techniques, algorithm, software, metaphors and finance.

First, we can consider diffusion as an object concretised in a material form: the model. The model is at the core of a series of online platforms that monetize access to the model. With a subscription fee, users can generate images. Its value stems from the model's ability to generate images in a given style (i.e., Midjourney), with a good prompt adherence, reasonably fast. It is a familiar value form for models: AI as a service that generates revenue and capitalize on the size of a userbase.

As the model is open source, it can also be shared and used in different ways. For instance, users can use the model locally without paying a fee to Stability AI. Alternatively, it can be integrated in peer-to-peer systems of image generation such as Stable Horde or shared installations through non-commercial APIs. In this case, the model gains value with adoption. And as interest grows, users start to build things with it as LoRAs, bespoke models, and other forms of conditioning. Through this burgeoning activity, the model's affordances are growing. Its reputation increases as it enters different economies of attention where users gain visibility by tweaking it, or generating 'great art'.

In scientific circles, the model's value is measured by different metrics. Here, the object of necessity that travels across platforms and individual computers becomes an object of interest. What is at stake is a competition for scientific relevance where diffusion is a solution to a series of ongoing intellectual problems. Yet, we should not forget that computer science lives in symbiosis with the field of production and that many scientists are also involved in commercial ventures. For instance the above mentioned Robin Rombach gained a scientific reputation that can be evaluated through a citation index, but he was also involved in the company Stability AI. In the constant movement from academic research to production, the ability to experiment emerges as a shared value.[1]. This is well captured by Patrick Esser, a lead researcher on diffusion algorithms, who defined the ideal contributor as someone who would “not overanalyze too much” and “just experiment” [14] The valorization of experimentation even justifies the open source ethos prevalent in the diffusion ecosystem:

“It’s not that we're running out of ideas, we’re mostly running out of time to follow up on them all. By open sourcing our models, there's so many more people available to explore the space of possibilities.” [15]

Finally, if we consider their impact on the currencies of images, diffusion-based algorithms contribute significantly to a decrease of the value of the singular image. If this trend started earlier and had been diagnosed several times (ie. Steyerl [16]), the capacity of models to churn out endless visual outputs has accelerated it substantially. As Munster and McKenzie wrote in their seminal piece "Platform Seeing," the value of the image ensemble (i.e., the model) grows at the expense of the singular image: "images both lose their stability and uniqueness yet gather aggregated force". [17] Their difference in value is implied in the algorithmic training process. To learn how to generate images, algorithms such as Stable Diffusion, Flux, Dall-e or Imagen need to be fed with examples. These images are given to the algorithm one by one. Through its learning phase, the algorithm treats them as one moment of an uninterrupted process of variation, not as a singular specimens. At this level, the process of image generation is radically anti-representational. It treats the image as a mere moment: a variation among many. Hence, it is the model that gains singularity.

What is its place/role in techno cultural strategies?

As a concept that traverses multiple dimensions of culture and technology, diffusion begs questions about strategies operating on different planes. In that sense, it constitutes an interesting lens to discuss the question of the democratization of generative AI. As a premise, we adopt the view set forth in the paper "Democratization and generative AI image creation: aesthetics, citizenship, and practices" [19] that the relation between genAI and democracy cannot be reduced either as one of apocalypse where artificial intelligence signals the end of democracy nor that we inevitably move towards a better optimized future where a more egalitarian world emerges out of technical progress. Both democracy and genAI are unaccomplished projects, and both are risky works in progress. Instead of simply lament genAI 's "use for propaganda, spread of disinformation, perpetuation of discriminatory stereotypes, and challenges to authorship, authenticity, originality", we should be see it as an opportunity to situate "the aestheticization of politics within democracy itself".[20] [2] In short, we think that the relation between democracy and genAI should not be framed as one of impact (where democracy as a fully achieved project pre-exists; AI's impact on democracy), but one where democracy is still to come. And, in the same movement, we should firmly oppose the view that AI is a fully formed entity awaiting to be governed, to be democratized. That is, the making of AI should in itself be an experiment in democracy. In this view, both entities inform each other. Diffusion as a transversal concept is a device to identify key elements of this mutual 'enactment'. They pertain to different dimensions of experience, sociality, technology and finance; to different levels of logistics and different scales. The dialectics of diffusion and stability we tried to characterize is therefore marked by loosely coordinated strategies that include (in no particular order):

- providing concrete resources such as the model's weights and source code without fee and under a free license (democracy as equal access to resources)

- producing and disseminating different forms of knowledge about AI: papers, code, tutorials (democratization of knowledge)

- offering different levels of engagement: as a user of a service, as a dataset curator, as a LoRA creator, as a Stable Horde node manager (democratization as increase of participation)

- freedom of use in the sense that the platform's censorship is up for debate or can be bypassed locally (democracy as (individual) freedom of expression and deliberation)

And, more polemically, the dialectics of diffusion and stability so far teach us the hard challenge to do these things under the constraints of the capitalist mode of production and its financial attachments.

[1] Joanna Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” Articles, Media Theory 8, no. 1 (2024): 230, 1.

[2] Pei, Yuhan, Ruoyu Wang, Yongqi Yang, Ye Zhu, Olga Russakovsky, and Yu Wu. “SOWing Information: Cultivating Contextual Coherence with MLLMs in Image Generation.” 2024. https://arxiv.org/abs/2411.19182.

[3] Sohl-Dickstein, Jascha, Eric A. Weiss, Niru Maheswaranathan, and Surya Ganguli. “Deep Unsupervised Learning Using Nonequilibrium Thermodynamics.” Proceedings of the 32nd International Conference on International Conference on Machine Learning - Volume 37 (Lille, France), ICML’15, JMLR.org, 2015, 2256–65.

[4] Wikipedia, s.v. “Diffusion,” last modified August 12, 2025, https://en.wikipedia.org/wiki/Diffusion.

[5] “Diffusion.”

[6] Sohl-Dickstein et al., “Deep Unsupervised Learning Using Nonequilibrium Thermodynamics.”

[7] Sohl-Dickstein et al., “Deep Unsupervised Learning Using Nonequilibrium Thermodynamics.”

[8] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer, “High-Resolution Image Synthesis with Latent Diffusion Models,” arXiv preprint arXiv:2112.10752, last revised April 13, 2022, https://arxiv.org/abs/2112.10752.

[9] Rombach et al., “High-Resolution Image Synthesis with Latent Diffusion Models,” 1.

[10] Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” 244.

[11] Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” 247.

[12] Kyle Wiggers, “Stability AI, the Startup behind Stable Diffusion, Raises $101M,” Tech Crunch, October 17, 2022, https://techcrunch.com/2022/10/17/stability-ai-the-startup-behind-stable-diffusion-raises-101m/.

[13] Jülich Supercomputing Centre, JUWELS Booster Overview, accessed August 12, 2025, https://apps.fz-juelich.de/jsc/hps/juwels/booster-overview.html> .

[14] Sophia Jennings, “The Research Origins of Stable Diffusion,” Runway Research, May 10, 2022, https://research.runwayml.com/the-research-origins-of-stable-difussion.

[15] Jennings, “The Research Origins of Stable Diffusion.”

[16] Hito Steyerl, “In Defense of the Poor Image,” in The Wretched Of The Screen (Sternberg Press, 2012).

[17] Adrian MacKenzie and Anna Munster, “Platform Seeing: Image Ensembles and Their Invisualities,” Theory, Culture & Society 36, no. 5 (2019): 3–22, https://doi.org/10.1177/0263276419847508.

[17] Maja Bak Herrie et al., “Democratization and Generative AI Image Creation: Aesthetics, Citizenship, and Practices,” AI & SOCIETY 40, no. 5 (2025): 3495–507, https://doi.org/10.1007/s00146-024-02102-y.

[18] Bak Herrie et al., “Democratization and Generative AI Image Creation: Aesthetics, Citizenship, and Practices,” 3497.

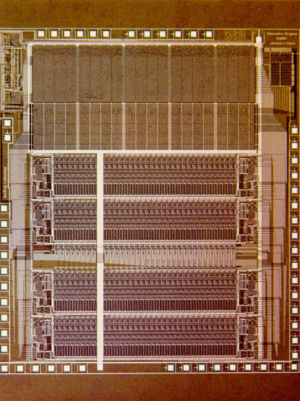

GPU (Graphics Processing Unit)

Gefion – Denmark's new AI supercomputer, launched in October 2024 – is powered by 1,528 H100's, a GPU developed by Nvidia. This object, the Graphics Processing Unit, is a key element that, arguably, paves the way for Denmark's sovereignty and heavy ploughing in the AI world. Beyond all the sparkles, this photo shows the importance of the GPU object not only as a technical matter, but also a political and powerful element of today's landscape.

Since the boom of Large Language Models, Nvidia's graphic cards and GPUs have become somewhat familiar and mainstream. The GPU powerhouse, however, has a long history that predates their central position in generative AI, including the stable diffusion ecosystem: casual and professional gaming, cryptocurrencies mining, and just the right processing for n-dimensional matrices that translate pixels and words into latent space and viceversa.

What is a GPU?

A graphics processing unit (GPU) is an electronic circuit focused on processing images in computer graphics. Originally designed for early videogames, like arcades, this specialised hardware performs calculations for generating graphics in 2D and later for 3D. While most computational systems have a Central Processing Unit (CPU), the generation of images, for example, 3D polygons, requires a different set of mathematical calculations. GPUs gather instructions for video processing, light, 3D objects, textures, etc. The range of GPUs is vast, from small and cheap processors integrated into phones and smaller devices, to state of the art graphic cards piled in data centres to calculate massive language models.

What is the network that sustains the GPU?

From the earth to the latent space

Like many other circuits, GPUs require a very advance production process, that starts with mineral mining for both common and rare minerals (silicon, gold, hafnium, tantalum, palladium, copper, boron, cobalt, tungsten, etc). Their life-cycle and supply chain locate GPUs into a material network with the same issues of other chips and circuits: conflict minerals, labour rights, by-products of manufacturing and distribution, and waste. When training or generating AI responses, the GPU is the object that consumes the most energy in relation to other computational components. A home-user commercially available GPU like the GeForce RTX 5090 can consume 575 watts, almost double than a CPU in the same category. Industry GPUs like the A100 use a similar amount of energy, with the caveat that they are usually managed in massive data centres. Allegedly, the training of GPT4 used 25,000 of the latter, for around 100 days (i.e., approximately 1 gigawatt, or 100 million led bulbs). This places GPUs in a highly material network that is for the most part invisible, yet enacted with every prompt, LoRA training, and generation request.

Perhaps the most important part of the GPU , is that this object is a, if not the, translation piece: most of the calculations to and from 'human-readable' objects, like a painting, a photograph or a piece of text in pixel space, into an n-number of matrices, vectors, and coordinates, or latent space, are made possible by the GPU. In many ways, the GPU is a transition object, a door into and out of a, sometimes grey-boxed, space.

The GPU cultural landscape and its recursive publics

It is also a mundane object, historically used by gamers, thus owning much of its history and design to the gaming culture. Indeed, the development of computer games fuelled the design and development of the current GPU capabilities. In own our study, we use an Nvidia RTX 3060ti super, bought originally for gaming purposes. When talking about current generative AI, populated by major tech players and trillion-valued companies and corporations, we want to stress the overlapping history of digital cultures, like gaming and gamers, that shape this object.

Being a material and mundane object also allows for paths towards autonomy. While our GPU was originally used for gaming, it opened a door for detaching from Big Tech industry players. With some tuning and self-managed software, GPUs can process queries on open, mid-size and large, language models, including stable diffusion. That is, we can generate our own images, or train LoRA's, without depending on chatGPT, Copilot, or similar offers. Indeed, plenty of enthusiast follow this path, running, tweaking, branching, and reimagining open source models thanks to GPUs. CivitAI is a great example of a growing universe of models, with different implementations of autonomy: from niche communities of visual culture working on representation, communities actively developing prohibited, censored, fetishised, and specifically pornographic images. CivitAI hosts alternative models for image generation responding to specific cultural needs, like a specific manga style or anime character, greatly detached from the interests of Silicon Valley's AI blueprints or nation's AI sovereignty imaginaries.

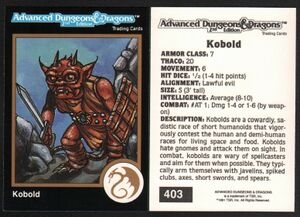

A horde of graphic cards

The means of production of these communities, alongside with collaboratively labelled data, is the GPU. AI Horde (also known as Stable Horde), for example, is a distributed cluster of 'workers', i.e. GPUs, that use open models, including many community-generated ones from CivitAI. The volunteer and crowd-sourced project acts as a hub that directs image generation requests from different interfaces (such as ArtBot, Mastodon, or other platforms) towards individual GPU workers in the network. As part of our project, our GPU is (sometimes) connected to this network, offering image generation (using selected models) to any request from the interfaces (websites, APIs, etc).

"Why should I use the Horde instead of a free service?

Because when the service is free, you're the product! Other services running on centralized servers have costs—someone has to pay for electricity and infrastructure. The AI Horde is transparent about how these costs are crowdsourced, and there is no need for us to change our model in the future. Other free services are often vague about how they use your data or explicitly state that your data is the product. Such services may eventually monetize through ads or data brokering. If you're comfortable with that, feel free to use them. Finally, many of these services do not provide free REST APIs. If you need to integrate with them, you must use a browser interface to see the ads."[4]

Projects like this one show that there is an explicit interest in alternatives to the mainstream generative AI landscape, based on collaboration strategies rather than a surveillance/monetisation model, also known as "surveillance capitalism", an extractive model that has become the economic standard of many digital technologies (in many cases with the full support of democratic institutions).[5] In this sense, Horde AI is a project that deviates towards a form of technical collaboration, producing its own models of exchange in the form of kudos currency, which resembles a barter system, where the main material is GPU processing power.

But perhaps more importantly, Horde AI not only shows the necessity and the role of alternative actors and processes in the AI ecosystem, but also the importance of the cultural upbringings of the more wild AI landscape. In a similar fashion to the manga and anime background of the CivitAI population, AI horde is a project that evolved from groups interested in role-playing. The name horde reflects this imprinting, and the protocol comes from a previous project named "KoboldAI", in reference to the Kobold monster from the role-playin game Advanced Dungeons & Dragons. The material infrastructure of the GPU overlaps with a plethora of cultural layers, all with their own politics of value, collaboration, and ethics, influencing alternative imaginaries of autonomy. And much of the aspects of the recursive publics in this landscape are technically operationalized through the GPU object.

How did GPUs evolve through time?

The geometry engine

Artist and researcher Ben Gansky asks in a performance project: "Do graphic processing units have politics?" [7] He gracefully narrates 1961's Ivan Sutherland attempt to create an interactive and responsive relation to computers. Together with David Evans, they create a research group specialising in computer graphics. Evans and Sutherlands students would become founders of important digital images organisations like Pixar, Adobe, and Atari, among others.[8] One of these students, James H. Clark became a key piece at Xerox PARC, an innovation hub widely known for the creation of the graphical user interface (GUI), the computer mouse and desktop, electronic paper, and a long etcetera. Clark also pioneered the first GPU, called "the geometry engine" and founded Silicon Graphics Inc or SGI, a company focusing on producing 3D graphics workstations.[9]

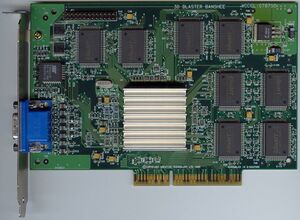

Plenty of companies followed and pushed the development of computer hardware for graphics. The first arcade systems and videogame consoles used ad-hoc hardware and software, eventually producing compatible hardware components known as video cards. The 1990's saw the adoption of real-time 3D graphic cards, such as the popular Voodoo models from 3dfx Interactive. A company that was eventually acquired by Nvidia in the 2000's.

To the moon

The title of Gansky's performance is a reference to Norbert Wiener classic paper "Do artifacts have politics?"[10] Winner asks about the inherent politics of technological implementations and design in the famous example of bridges too short to allow access to public transport, therefore acting as a techno-political device that subtly, but deterministically, controls access to particular demographics in New York city.

During the late 2000s GPUs became an unexpected bridge between computer enthusiasts and alternative economies. The idea of a digital currency detached from banks and other central institutions took shape in the form a of a decentralised protocol, Bitcoin, completely regulated by mathematical calculations. The invention of Bitcon, the first cryptocurrency based on blockchain technology, altered the offer and demand game for GPUs. Suddenly, this object was not only a gaming artefact, but also a calculation machine to 'mine' cryptocurrency, and for some, to become rich in a few years timeline. This made the GPU object not only a device to, technically, create economic value, but also a political means for production outside of the regular economic channels. In between libertarian and rebel economic dreams, the GPU became a bridge for a new imaginary of monetary autonomy.

The prediction engine

The design of the GPU was, although not by design, a also very good fit for the math problems machine learning researchers were facing, in particular for the training of neural networks. Parallel processing made GPUs a perfect candidate for this type of work, and Nvidia released an API in 2007 to allow researchers (as well as game developers) to expand the interaction and programmability of their GPU cards.

A canonical convolutional neural network for image classification, "alexnet", was trained using 2 GPUs in 2012. This network was co-developed by one of the founders and key figures of OpenAI, Ilya Sutskever, and inaugurated the mainstream use of GPUs for machine learning. Some years later, 10,000 of these objects of interest were used to train GPT-3 using a dataset of 3 trillion words. Its immediate successor, GPT-3.5 would be the first model behind the massively popular chatGPT interface. All major LLMs are trained in massive hubs of GPUs (usually cloud-based), and Nvidia now produces GPUs both aimed at casual and professional gamers, as well as the industrial AI market. Its A- and H-series, marketed towards cloud and datacentres for AI development and training, feed the current demand in the extended AI industry.

Today, the GPU is very much an object of necessity in the AI landscape. Governments, companies, and every institution that attempts to incorporate or participate in this technology requires access to GPUs in one form or another. The GPU, however, remains an object brought by distinct cultural needs, politics, and curiosity.

[1] Adil Lheureux, “A Complete Anatomy of a Graphics Card: Case Study of the NVIDIA A100,” Paperspace Blog, 2022, https://blog.paperspace.com/a-complete-anatomy-of-a-graphics-card-case-study-of-the-nvidia-a100/ .

[2] David Hogan, “Denmark Launches Leading Sovereign AI Supercomputer to Solve Scientific Challenges With Social Impact,” NVIDIA Blog, October 23, 2024, https://blogs.nvidia.com/blog/denmark-sovereign-ai-supercomputer/ .

[3] Design Life-Cycle, accessed August 18, 2025, http://www.designlife-cycle.com/.

[4] AI Horde, “Frequently Asked Questions,” accessed August 18, 2025, https://aihorde.net/faq.

[5] Shoshana Zuboff, “Surveillance Capitalism or Democracy? The Death Match of Institutional Orders and the Politics of Knowledge in Our Information Civilization,” Organization Theory 3, no. 3 (2022): 26317877221129290, https://doi.org/10.1177/26317877221129290.

[6] Kobold TSR card, accessed August 18, 2025. https://www.bonanza.com/listings/1991-TSR-AD-D-Gold-Border-Fantasy-Art-Card-403-Dungeons-Dragons-Kobold-Monster/1756878302?search_term_id=202743485

[7] Ben Gansky (director), Do Graphics Processing Units Have Politics?, video recording, December 15, 2022, https://www.youtube.com/watch?v=pK_mHfpug8I.

[8] Jacob Gaboury, Image Objects: An Archaeology of Computer Graphics (Cambridge, MA: The MIT Press, 2021), https://doi.org/10.7551/mitpress/11077.001.0001.

[8] J. H. Clark, “The Geometry Engine: A VLSI Geometry System for Graphics,” SIGGRAPH Computer Graphics16, no. 3 (1982): 127–133, https://doi.org/10.1145/965145.801272.

[10] Langdon Winner, “Do Artifacts Have Politics?” Daedalus 109, no. 1 (1980): 121–136.

[11] The Geometry Engine of James H. Clark (Wikimedia commons), accessed August 20, 2025.

[12] 3D blaster (voodoo) banshee graphic card (Wikimedia commons), accessed August 20, 2025. https://en.wikipedia.org/wiki/3dfx

[13] Bitcoin mining farm (Wikimedia commons), accessed August 20, 2025. https://en.wikipedia.org/wiki/Bitcoin

[14] NVIDIA, NVIDIA H100 Tensor Core GPU, accessed August 18, 2025, https://www.nvidia.com/en-us/data-center/h100/.

Hugging face

Hugging Face is a central cohesive source of support and stability when exploring autonomous AI image creation. It is, simply put, a collaborative hub for AI development – not specifically targeted at AI image creation, but generative AI more broadly (including speech synthesis, text-to-video. image-to-video, image-to-3D, and much more). It attracts amateur developers who use the platform to experiment with AI models, as well as professionals who use the expertise of the company or take the platform as an outset for entrepreneurship. By making AI models, datasets and also processing power widely available, it can be labelled as an attempt to democratise AI and delink from the key commercial platforms, yet at the same time Hugging Face is deeply intertwined with numerous commercial interests. It is therefore suspended between more autonomous and peer-based communities of practice, and a need for more 'client-server' relations in model training, which generally is dependent on 'heavy' resources (stacks of GPUs) and specialised expertise.

What is the network that sustains Hugging Face?

Hugging Face is a platform, but what it offers is more resembling an infrastructure for, in particular, training models. As such, Hugging Face is an object that operates in a space that is not typically seen by users of conventional generative AI. It is a pixel space for developers (amateurs or professionals) to use and interact with the computational models of a latent space (see Maps), and specify advanced settings for model training (see LoRA), but also to access a material infrastructure of GPUs.

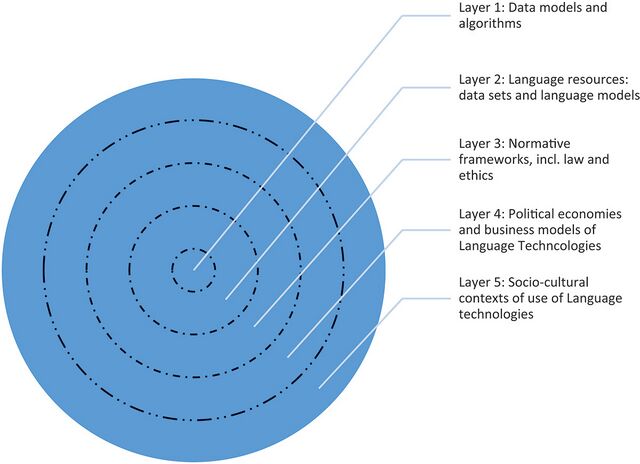

Companies involved in training foundation models have their own infrastructures (specialised racks of hardware and expertise), but they may make their models available on Hugging Face. This includes both Stability AI, but also the Chinese DeepSeek, and others. Users often upload their own datasets to experiment with the many models in Hugging Face, and typically, these datasets are freely available on the platform for other users. But users also experiment in other ways. They ‘post train’ the models and create LoRAs for instance. Others create 'pipelines' of models, meaning that the outcome of one model can become the input for another model, At the time of writing there are nearly 500,000 datasets and 2,000,000 models freely available. Amidst this vertiginous expansion, there is a growing need to document the products exchanged on the platform and standardize the information. The uneven adoption of model cards describing the contents of a model emerges as a response to the needs of a lively community where users share their creations and experiences. It is safe to say that this community has fostered specialised developer knowledge of how to experiment with computational models.

How has Hugging Face evolved through time?

Hugging Face initially set out in 2016 by French entrepreneurs Clément Delangue, Julien Chaumond, and Thomas Wolf. Already in 2017 they received their first round of investment of $1,2 million[2], and as it was stated in the press release, Hugging Face is a "new chatbot app for bored teenagers. The New York-based startup is creating a fun and emotional bot. Hugging Face generates a digital friend so you can text back and forth and trade selfies."[3] In 2021 they received a $40 million investment to develop its "open source library for natural language processing (NLP) technologies." There were (in 2021) 10,000 forks (i.e., branches of development projects) and "around 5,000 companies [...] using Hugging Face in one way or another, including Microsoft with its search engine Bing."[4]

This trajectory shows how the company has gradually moved from providing a service (a chatbot) to becoming a major (if not the) platform for AI development – now, not only in language technologies, but also (as mentioned) in speech synthesis, text-to-video. image-to-video, image-to-3D and much more. But it also shows an evolution of generative AI. Like today, early ChatGPT (developed by OpenAI and released in 2022) used large language models (LLMs), but offered very few parameters for experimentation: the prompt (textual input) and the temperature (the randomness and creativity of the model's output). Today, there are all kinds of components and parameters. This also explains the present-day richness of Hugging Face’ interface: many of the commercial platforms do not offer this richness, and an intrinsic part of the delinking from them seems to be attached to a fascination of settings and advanced configurations (see also Interfaces).

How does Hugging Face affect the creation of value?

Hugging Face has an estimated market value of $4.5 billion (as of 2023).[5] What does the exorbitant value of a platform unknown from the general public reflect?

On the one hand, the company has capitalised on the various communities of developers in, for instance, image and vision who experiment on the platform and share their datasets and LoRAs, but this is only a partial explanation.

Hugging Face is not only for amateur developers. On the platform one also finds an 'Enterprise Hub' where Hugging Face offers, for instance, advanced computing at higher scale with a more dedicated hardware setup ('ZeroGPU', see also GPU), and also 'Priority Support'. For this more commercial use, access is typically more restricted. In this sense, the platform has become innately linked to a plane of business innovation and has also teamed up with Meta to boost European startups in an "AI Accelerator Program".[6]

Notably, Hugging Face also collaborates with other key corporations in the business landscape of AI. For instance, Amazon Web Services (ASW), allowing users to make the trained models in Hugging Face available through Amazon SageMaker.[7] Nasdaq Private Market also lists a whole range of investors in Hugging Face (Amazon, Google, Intel, IBM, NVIDIA, etc.).[8]

The excessive (and growing) market value of Hugging Face reflects, in essence, the high degree of expertise that has accumulated within a company that consistently has sought to accommodate a cultural community, but also a business and enterprise plane of AI. Managing an infrastructure of both hardware and software for AI models at this large scale is a highly sought expertise.

What is the role of Hugging Face in techno-cultural strategies?

Regardless of the Enterprise Hub, Hugging Face also remains a hub for amateur developers who do experimentation with generative AI, beyond what the commercial platforms conventionally offer – and also share their insights in the platforms's 'Community' section. An example is the user 'mgane' who has shared a dataset of "76 cartoon art-style video game character spritesheets." The images are "open-source 2D video game asset sites from various artists." mgane has used them on Hugging Face to build LoRAs on Stable Diffusion, that is "for some experimental tests on Stable Diffusion XL via LORA and Dreambooth training methods for some solid results post-training."[10]

A user like mgane is arguably both embedded in a specific 2D gaming culture, and also has the developer skills necessary to access and experiment with models in the command line interface. However, users can also access the many models in Hugging Face through more graphical user interfaces like Draw Things that allows for accessing and combining models and LoRAs to generate images, and also to train one's own LoRAs (see Interfaces).

How does Hugging Face relate to autonomous infrastructures?

Looking at Hugging Face, the separation of community labour from capital interests (i.e., 'autonomy') in generative AI does not seem to be an either-or. Rather, the dependencies of autonomous generative AI seem to be in a constant movement, gravitating from 'peer-to-peer' communities, towards 'client-server relations' that are more easily capitalised. This may be due to the need for infrastructures that demand a high level of expertise and technical requirements involved in generative AI, but is not without consequence.

When, as noted by the European Business Review, most tech-companies in AI want to collaborate with Hugging Face, it is because the company offers an infrastructure for AI.[11] Or, rather, it offers a platform that performs as an infrastructure for AI – a "linchpin" that keeps everything in the production in position. As also noted by Paul Edwards, a platform seems to be, in a more general view, the new mode of handling infrastructures in the age of data and computation.[12] Working with AI models is a demanding task that requires both expertise, hardware and organisation of labour, and what Hugging Face offers is speed, reliability, and not least agility in a world of AI that is in constant flux, and where new models and techniques are introduced almost at a monthly basis.

With their 'linchpin status' Hugging Face build on already existing infrastructures such as the flow of energy or water, necessary to make the platform run. They also rely on social and organisational infrastructures, such as those of both start-ups and cultural communities. At the same time, however, they also reconfigure these relations – creating cultural, social and commercial dependencies on Hugging Face as a new 'platformed' infrastructure for AI.

[1] Aryan V S (@a-r-r-o-w), “Caching Is an Essential Technique Used in Diffusion Inference Serving,” Hugging Face, last modified August 2025, https://huggingface.co/posts/a-r-r-o-w/278025275110164>

[2] “Hugging Face,” Wellfound, accessed August 11, 2025, https://wellfound.com/company/hugging-face/funding.

[3] Romain Dillet, “Hugging Face Wants to Become Your Artificial BFF,” TechCrunch, March 9, 2017, https://techcrunch.com/2017/03/09/hugging-face-wants-to-become-your-artificial-bff/.

[4] Romain Dillet, “Hugging Face Raises $40 Million for Its Natural Language Processing Library,” TechCrunch, March 11, 2021, https://techcrunch.com/2021/03/11/hugging-face-raises-40-million-for-its-natural-language-processing-library/.

[5] “Hugging Face,” Sacra, accessed August 11, 2025, https://sacra.com/c/hugging-face/.

[6] “META Collaboration Launches AI Accelerator for European Startups,” Yahoo Finance, March 11, 2025, https://finance.yahoo.com/news/meta-collaboration-launches-ai-accelerator-151500146.html.

[7] Hugging Face. “Amazon.” Hugging Face. Accessed August 11, 2025. https://huggingface.co/amazon.

[8] “Hugging Face,” Nasdaq Private Market, accessed August 11, 2025, https://www.nasdaqprivatemarket.com/company/hugging-face/.

[9] “Hugging Face: Why Do Most Tech Companies in AI Collaborate with Hugging Face?” The European Business Review, accessed August 11, 2025, https://www.europeanbusinessreview.com/hugging-face-why-do-most-tech-companies-in-ai-collaborate-with-hugging-face/.

[10] mgane, “2D_Video_Game_Cartoon_Character_Sprite-Sheets,” Hugging Face, accessed August 11, 2025, https://huggingface.co/datasets/mgane/2D_Video_Game_Cartoon_Character_Sprite-Sheets.

[11] “Hugging Face: Why Do Most Tech Companies in AI Collaborate with Hugging Face.”

[12] Paul N. Edwards, “Platforms Are Infrastructures on Fire,” in Your Computer Is on Fire, ed. Thomas S. Mullaney, Benjamin Peters, Mar Hicks, and Kavita Philip (Cambridge, MA: MIT Press, 2021), 197–222. https://doi.org/10.7551/mitpress/10993.003.0021

Guestbook

Extension:EtherpadLite: -- Error: The pad "https://ctp.cc.au.dk/pad/p/Objects_of_interest_and_necessity_Guestbook" has already been used before on this page; you can have many pads on a page, but only if they are different pads.

Interfaces to autonomous AI

Interfaces to generative AI come in many forms. There are graphical user interfaces to the models of generative AI; interfaces between the different types of software, as for instance an API (Application Programming Interface) where one can integrate a model into other software; and on a material plane, there are also interfaces to the racks of servers that run the models, or between them.

What is of particular interest here – when navigating the objects of interest and necessity – is, however, the user interface to autonomous AI image generation: the ways in which a user (or developer) accesses the 'latent space' of computational models (see Maps). A computational model is not visible as such. Therefore, the user's first encounter with AI is typically through an interface that renders the flow of data tangible in one form or the other. How does one access and experiment with Stable Diffusion and autonomous AI?

What is the network that sustains the interface?

Most people who have experience with AI image creation will have used flagship generators such as Microsoft's Bing Image Creator, OpenAI's DALL-E or Adobe Firefly. Here, the image generator interface is often integrated into other corporate services. Bing, for instance, is not merely a search engine, but also integrates all the other services offered by Microsoft, including Microsoft Image Creator. The Image Creator is, as expressed in the interface itself, capable of making the users "surprised", "inspired", or for them to "explore ideas" (i.e., be creative). There is, in other words, an expected affective smoothness in the interface – a simplicity and low entry threshold for the user that perhaps also explains the widespread preference for these commercial platforms. What is noticeable in this affective smoothness (besides the integration into the platform universes of Microsoft, Adobe or OpenAI), is that users are offered very few parameters in the configuration; basically, the interaction with the model is reduced to a prompt. Interfaces to autonomous AI vary significantly from this in several ways.

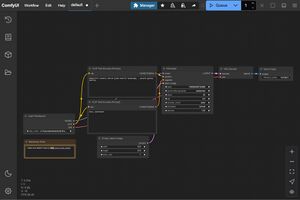

First of all, not all of them offer a web-based interface. The interfaces for generating images with Stable Diffusion therefore also vary, and there are many options depending on the configuration of one's own computer. ComfyUI, for instance is commonly used with models you can run locally and employs a node based workflow, making it particularly suitable to visually reproduce 'pipelines' of models (see also Hugging Face). It works for both Windows, MacOS and Linux users. Draw Things is suitable for MacOS users. ArtBot is another example that has a web interface as well as integration with Stable Horde, allowing users to generate images in a peer-based infrastructure of GPUs (as an alternative to the commercial platforms' cloud infrastructure).