Variational Autoencoder, VAE

Variational Autoencoder, VAE

With the variational autoencoder (VAE), we enter the nitty-gritty part of our guided tour and the intricacies of the generation process. Let's get back to an alternative version of the map of latent space, found in the maps entry.

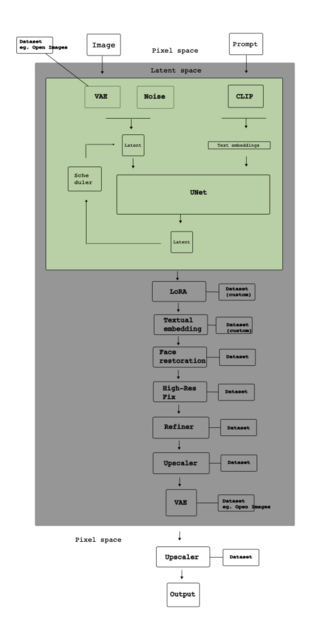

There are various possible inputs to Stable Diffusion models. As represented above, the best known are text and images. When a user selects an image and a prompt, they are not sent directly to the diffusion algorithm per se (the grey area in the diagram). They are first encoded into meaningful variables. The encoding of text is often performed by an encoder named CLIP, and the encoding of images is carried on by a variational autoencoder (VAE). You can see in the diagram that a lot of operations are happening inside the grey area. This is where the work of generation proper happens. Inside this area, the latent space, the operations are not made directly on pixels, but on lighter statistical representations called latents. Before leaving that area, the generated images go again through a VAE. This time the VAE acts a decoder and is responsible to translate the result of the diffusion process, the latents, back to pixels. Encoding into latents and decoding back into pixels, VAEs are, as Efrat taig puts it, bridges between spaces, between pixel and latent spaces. [1]

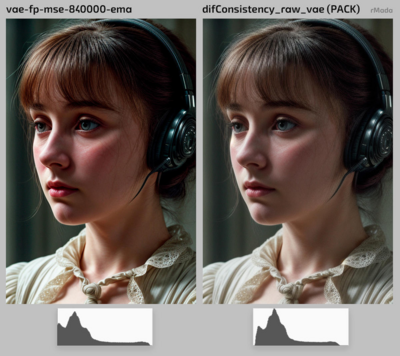

On platforms such as CivitAI, users train and share bespoke VAEs. Like in the case of LoRAs, VAEs are components that users can act upon in order to improve the behaviour of a model and make it fit their needs and increase the value of their creations. The user rMada likens the effects of a VAE to overexposure or underexposure in photography. When a VAE is too coarse, it results in the loss of details. Photographers, rMada says, "use the histogram to avoid such loss".[3] Indeed, creators of synthetic images have understood very well the importance of such components of the generation pipeline. They realize how they affect one of the most sought after quality of an image: how it can increase a sense of realism by incorporating as many detailed features as possible in an image such as a portrait.

The active exchange of VAEs on genAI platforms testifies to the flexibility of the image generation pipeline when it is open source. The likes and the attention VAEs gather show how they act as currencies among communities of image creators. Their constant refinement also testifies to the platforms' success in turning the enthusiasm of amateurs into technical expertise.

[1] taig, Efrat. “VAE. The Latent Bottleneck: Why Image Generation Processes Lose Fine Details.” Medium, May 31, 2025. https://medium.com/@efrat_37973/vae-the-latent-bottleneck-why-image-generation-processes-lose-fine-details-a056dcd6015e.

[2] rMada. “VAE RAW to Obtain Greater Detail.” CivitAI, June 17, 2023. https://civitai.com/articles/462/vae-raw-to-obtain-greater-detail.

[3] rMada, “VAE RAW to Obtain Greater Detail.”

Guestbook