Variational Autoencoder, VAE: Difference between revisions

No edit summary |

No edit summary |

||

| Line 4: | Line 4: | ||

[[File:Diagram-process-stable-diffusion-002.png|none|thumb|400x400px|Diagram of pixel space and latent space in AI image generation with Stable Diffusion]] | [[File:Diagram-process-stable-diffusion-002.png|none|thumb|400x400px|Diagram of pixel space and latent space in AI image generation with Stable Diffusion]] | ||

There are various possible inputs to Stable Diffusion models. | There are various possible inputs to Stable Diffusion models. As represented above, the best known are text and images. When a user selects an image and a prompt, they are not sent directly to the diffusion algorithm per se. They are first encoded into meaningful variables. The encoding of text is often performed by an encoder named CLIP and a popular means to encode images is the variational autoencoder (VAE). You can see in the diagram that a lot of operations are happening inside the grey area. This is where the work of generation happens. Inside this area, the latent space, the operations are not made directly on pixels but on lighter statistical representations called latents. Before leaving that area, the generated image goes again through a VAE. The VAE is also a decoder and is responsible to translate the result of the diffusion process, the latents, back to pixels. | ||

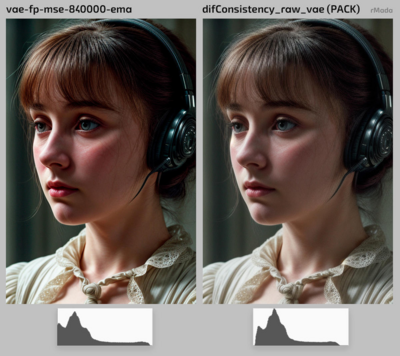

[[File:Image by rMada.png|none|thumb|400x400px|Encoders and the loss of details]] | [[File:Image by rMada.png|none|thumb|400x400px|Encoders and the loss of details]] | ||

Revision as of 18:07, 18 August 2025

With the VAE, we enter the nitty-gritty part of our guided tour and the intricacies of the generation process. Let's get back to our diagram of the maps page.

There are various possible inputs to Stable Diffusion models. As represented above, the best known are text and images. When a user selects an image and a prompt, they are not sent directly to the diffusion algorithm per se. They are first encoded into meaningful variables. The encoding of text is often performed by an encoder named CLIP and a popular means to encode images is the variational autoencoder (VAE). You can see in the diagram that a lot of operations are happening inside the grey area. This is where the work of generation happens. Inside this area, the latent space, the operations are not made directly on pixels but on lighter statistical representations called latents. Before leaving that area, the generated image goes again through a VAE. The VAE is also a decoder and is responsible to translate the result of the diffusion process, the latents, back to pixels.