Maps: Difference between revisions

No edit summary |

|||

| (120 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Mapping 'objects of interest and necessity' == | |||

If one considers generative AI as an object, there is also a world of ‘para objects’, surrounding AI and shaping its reception and interpretation in the form of maps or diagrams of AI. They are drawn by both amateurs and professionals who need to represent processes that are otherwise sealed off in technical systems, but more generally reflect a need for abstraction – a need for conceptual models of how generative AI functions. However, as [https://www.raggeduniversity.co.uk/wp-content/uploads/2018/08/sands-sup3.pdf Alfred Korzybski famously put it], one should not confuse the map with the territory: the map is not how reality is, but a representation of reality. | |||

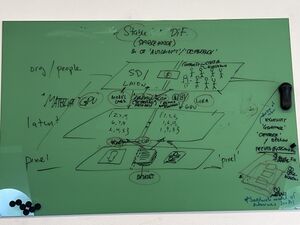

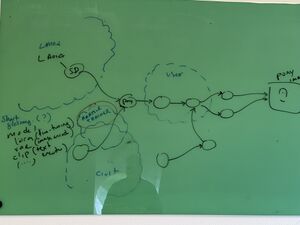

[[File: | Following on from this, mapping the objects of interest in autonomous AI image creation is not to be understood as a map of what it 'really is'. Rather, it is a map of encounters of objects; encounters that can be documented and catalogued, but also positioned in a spatial dimension – representing a 'guided tour', and an experience of what objects are called, how they look, how they connect to other objects, communities or underlying infrastructures (see also [[Objects of interest and necessity]]). Perhaps, the map can even be used by others to navigate autonomous generative AI and create their own experiences? But, importantly to note, what is particular about the map of this catalogue of objects of interest and necessity, is that it is an attempt to map autonomous generative AI. It does, in other words, not map what is otherwise concealed in, say, Open AI's DALLE-E or Adobe Firefly. In fact, we know very little of how to navigate such more proprietary systems, and one might speculate if there even exists a complete map of their relations and dependencies. | ||

[[File:Map pony image process.jpg|thumb|Map of genereting pony image]] | |||

Perhaps because of this lack of over-view and insight, maps and cartographies are not just shaping the reception and interpretation of generative AI, but can also be regarded as objects of interest and necessity in themselves – intrinsic parts of AI’s existence. Generative AI depends on an abundance of cartography to model, shape, navigate, and also negotiate and criticise its being in the world. There seems to be an inbuilt cultural need to 'map the territory', and the collection of cartographies and maps is therefore also what makes AI a reality – making AI real by externalising its abstraction onto a map, so to speak | |||

=== A map of 'objects of interest and necessity' (autonomous AI image generation) === | |||

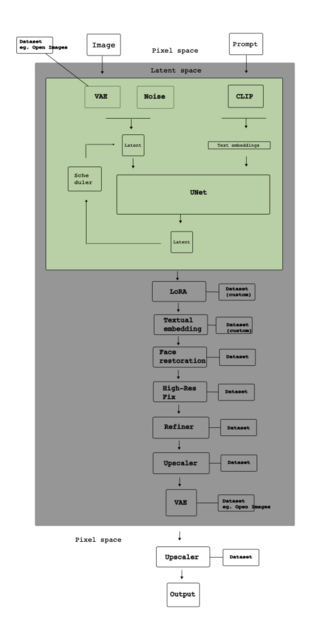

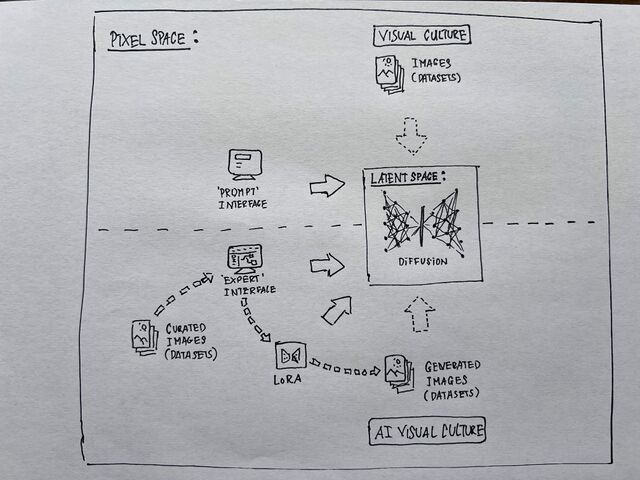

To enter the objects of autonomous AI image generation, a map that separates the territories of ‘pixel space’ from ‘latent space’ can be useful as a starting point – that is, a map that separates the objects you see from those that cannot be seen because they exist in a more abstract, computational space. | |||

==== Latent space ==== | |||

[[Latent space]] consists of computational models and is a highly abstract space where it can be difficult to explain the behaviour of the different computational models. Very briefly put, they encode images with noise (using a [[Variational Autoencoder, VAE]]), and then learn how to de-code them back into images, also known as [[diffusion]]. | |||

In the process of model training, [[Dataset|datasets]] are central. Many of the datasets that are used to train models are made by 'scraping' the internet. [https://commoncrawl.org Common Crawl], as an example, is a non-profit organisation that has built a repository of 250 billion web pages. [https://storage.googleapis.com/openimages/web/index.html Open Images] and [https://www.image-net.org ImageNet] are also commonly used as the backbone of visually training generative AI. | |||

Contrary to common belief, there is not just one dataset used to make a model work, but multiple models and datasets to, for instance, reconstruct missing facial or other bodily details (such as too many fingers on one hand), 'upscale' images of low resolution or 'refine' the details in the image. Importantly, when it comes to autonomous AI image creation, there is typically an organisation and a community behind each dataset and training. [[LAION]] (Large-scale-Artificial Intelligence Open Network) is a good example of this. It too is a non-profit community organisation that develops and offers free models and datasets. Stable Diffusion was trained on datasets created by LAION. | |||

For a model to work, it needs to be able to understand the semantic relationship between text and image. Say, how a tree is different from a lamp post, or a photo is different from a 18th century naturalist painting. The software [[Clip|CLIP]] (by OpenAI) is widely used for this, and also by LAION. CLIP is capable of predicting what images that can be paired with which text in a dataset. The annotation of images is central, here. That is, for the dataset to be useful there needs to be descriptions of what is on the images, what style they are in, their aesthetic qualities, and so on. Whereas ImageNet, for instance, crowdsources the annotation process, LAION uses Common Crawl to find html with <code><img></code> tags, and then use the Alt Text to annotate the images (Alt Text is a descriptive text acts as a substitute for visual items on a page, and is sometimes included in the image data to increase accessibility). This is a highly cost-effective solution, which has enabled its community to produce and make publicly available a range of datasets and models that can be used in generative AI image creation. | |||

[[File:Diagram-process-stable-diffusion-002.png|none|thumb|640x640px|A diagram of AI image generation separating 'pixel space' from 'latent space' - what you see, and what cannot be seen (by Nicolas Maleve)]] | |||

==== Pixel space ==== | |||

In pixel space, you find a range of visible objects that a typical user would normally meet. This includes the [[interfaces]] for creating images. In conventional interfaces like DALL-E og Bing Image Creator, users [[prompt]] in order to generate images. What is particular for autonomous and decentralised AI image generation is that the interfaces have many more parameters and ways to interact with the models that generate the images. It functions more like an 'expert' interface. | |||

In pixel space one finds many objects of visual culture. Apart from the interface itself, this includes both all the images generated by AI, and all the images used to train the models behind. These images are, as described above, used to create [[Dataset|datasets]], compiled by crawling the internet and scraping images that all belong to different visual cultures – ranging, e.g., from museum collections of paintings to criminal records with mug shots. | |||

Many users also have specific aesthetic requirements to the images they want to generate. Say, to generate images in a particular manga style or setting. The expert interfaces therefore also contains the possibility to combine different models and even to post-train one's own models, also known as a [[LoRA]] (Low-Rank Adaptation). When sharing the images on platforms like [https://danbooru.donmai.us Danbooru] (on of the first and largest image boards for manga and anime) images are typically well categorised – both descriptively ('tight boots', 'open mouth', 'red earrings', etc.) and according to visual cultural style ('genshin impact', 'honkai', 'kancolle', etc.). Therefore they can also be used to train more models. | |||

A useful annotated and categorised dataset - be it for a foundation model or a LoRA – typically involves specialised knowledge of both the technical requirements of model training (latent space) and the aesthetics and cultural values of visual culture itself. For instance of common visual conventions, such as realism, beauty, horror, and also (in the making of LoRAs) of more specialised conventions – say a visual style that an artist, wants to generate (see e.g. the generated images of Danish Hiphop by [https://oerum.org/pico/projects/253 Kristoffer Ørum]). | |||

[[File:Pixel-latent-space.jpg|none|thumb|640x640px|The map reflects the separation of pixel space from latent space; i.e., what is seen by users from the more abstract space of models and computation. Pixel space is the home of both conventional visual culture and a more specialised visual culture. Conventionally, image generation will involve simple 'prompt' interfaces, and models will be built on accessible image, scraped from archives on the Internet, for instance. The specialised visual culture takes advantage of the openness of Stable Diffusion to, for instance, generate specific manga or gaming images with advanced settings and parameters. Often, users build and share their own models, too, so-called <nowiki>''</nowiki>LoRAs'. (by Christian Ulrik Andersen, Nicolas Maleve, and Pablo Velasco)]] | |||

==== An organisational plane ==== | |||

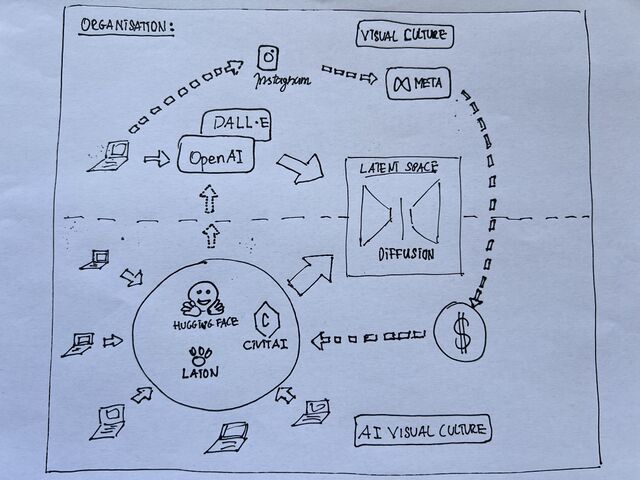

AI generated images as well as other objects of pixel space and latent space (like software, interfaces, datasets, or modesl involved in image generation) are not just products of a technical system, but also exist in a social realm, organised on platforms that are driven by the users themselves or commercial companies. | |||

In mainstream visual culture the organisation is structured as a relation between a user and a corporate service. For example, users use Open AI's DALL-E to generate images, and may also share them afterwards on social media platfoms like Meta's Instagram. In this case, the social organisation is more or less controlled by the corporations who typically allow little interaction between their many users. For instance, DALL-E does not have a feature that allows one to build on or reuse the prompt of other users, or of users to share their experiences and insights with generative AI image creation. Social interaction between users only occurs when they share their images on platforms such as, say, Instagram. Rarely are users involved in the social, legal, technical or other conditions for making and sharing AI generated images. | |||

Conversely, on the platforms for generating and sharing images in more autonomous AI involve users and communities are deeply involved in the conditions for AI image generation. LAION is a good example of this. It is run by a non-commercial organisation or 'team' of around 20 members, led by Christoph Shumann, but their many projects involve a wider community of AI specialists, professionals and researchers. They collaborate on principles of access and openness, and their attempt to 'democratise' AI stands in contrast to the policies of Big Tech AI corporations. In many ways, LAION resembles what the anthropologist Chris Kelty has also labelled a '[https://www.dukeupress.edu/two-bits recursive publics]' – a community that care for and self-maintain the means of its own existence. | |||

However, such openness is not to be taken for granted, as also [https://www.deeplearning.ai/the-batch/the-story-of-laion-the-dataset-behind-stable-diffusion/ noted] in debates around LAION. There are many platforms in the ecology of autonomous AI ( see also [[CivitAI]] and [[Hugging Face]]) that easily become valuable resources. The datasets, models, communities, and expertise they offer may therefore also be subject to value extraction. [[Hugging Face]] is a prime example of this - a community hub as well as a $4.5 billion company with investments from Amazon, IBM, Google, Intel, and many more; as well as collaborations with Meta and Amazon Web Services. This indicates that in the organisation of autonomous AI there are dependencies on not only communities, but often also on corporate collaboration and venture capital. | |||

[[File:Organisation.jpg|none|thumb|640x640px|The map reflects the organisation of autonomous AI. In conventional visual culture, users will access cloud-based services like OpenAI's DALL-E, and may also share their images on social media. In autonomous AI visual culture, the platforms are more democratic in the sense that moodels or datasets are freely available for training LoRAs or other development. Users also share their own models, datasets, images, and knowledge between on dedicated platforms, like CivitAI or Hugging Face. Many of the organisations from conventional visual culture (like Meta, who owns Instagram) also invest in the platforms of autonomous AI, and openness is not to be taken for granted (by Christian Ulrik Andersen, Nicolas Maleve, and Pablo Velasco).]] | |||

==== A material plane (GPU infrastructure) ==== | |||

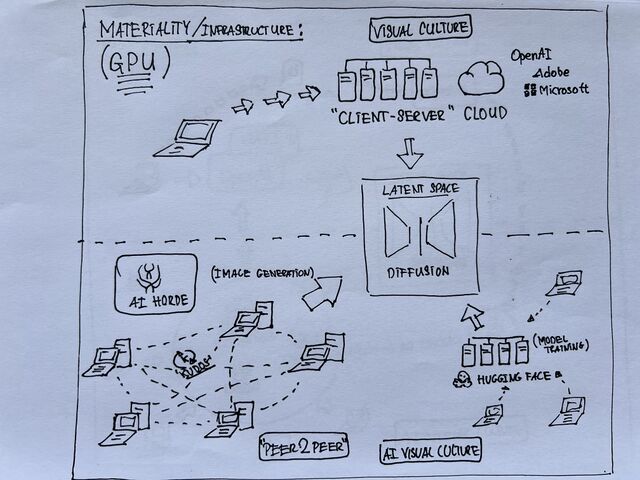

Just like the objects of autonomous AI depend on a social organisation (and also one of capital and labour), they also depend on a material infrastructure – and are, so to speak, always suspended between many different planes. First of all on hardware and specifically the [[GPU|GPUs]] that are needed to generate images as well as the models behind. Like in the social organisation of AI image generation, infrastructures too are organised differently. | |||

The mainstream commercial services are set up as what one might call a 'client-server' relation. The users of DALL-E or similar service access a main server (or a 'stack' of servers). Users have little control of the conditions for generating models and images (say, the way models are reused or their climate impact) as this happens elsewhere, in 'the cloud'. | |||

Where autonomous AI distinguishes itself from mainstream AI is typically the decentralisation organisation of processing power. Firstly, people who generate images or develop LoRAs with Stable Diffusion can use their own GPU. Often a simple laptop will work, but individuals and communities involved with autonomous AI image creation will often have expensive GPUs with high processing capability (built for gaming). Secondly, there is a decentralised network that connects the community's GPUs. That is, using the so-called [[Stable Horde]] (or AI Horde), the community can directly access each other's GPUs in a peer-to-peer manner. Granting others access to one's GPU is rewarded with [[currencies]] that in turn can be used to skip the line when waiting to access other members' GPUs. This social organisation of a material infrastructure allows the community to generate images almost with the same speed as commercial services. | |||

To be dependent on the distribution of resources, rather than a centralised resource (e.g., a platform in 'the cloud'), points to how dependencies are often deliberately chosen in autonomous AI. One chooses to be dependent on a community because, for instance, one wants to reduce the consumption of hardware, because it is more cost-effective than one's own GPU, because one cannot afford the commercial services, or simply because one prefers this type of organisation of labour (separated from capital) that offers an alternative to Big Tech. That is, simply because one wants to be autonomous. | |||

At this material plane, there are many other dependencies. For instance, energy consumption, the use of expensive minerals for producing hardware, or the exploitation of labour in the production of hardware. | |||

[[File:Materiality gpu.jpg|alt=A photo of an illustration. On the one side a cloud client server use of resources (open ai, adobe, microsoft). On the other a peer-to-peer based (Stable Horde)|none|thumb|640x640px|The map reflects the material organisation, or infrastructure, of autonomous AI. This particularly concerns the use of GPU and the processing power needed to generate images and train models and LoRAs. In conventional visual culture, users will access cloud-based services (like OpenAI's DALL-E, Adobe Firefly or Microsoft Image Creator) to generate images. In this client-server relation, users do not know where the service is (in the 'cloud'). In autonomous AI visual culture, users benefit from each others' GPU's in Stable/AI Horde's peer-to-peer network - exchanging GPU for the currency 'Kudos'. Knowing the location of the GPU is central. Users also train models, for instance on Hugging Face. Here, the infrastructure resembles more that of a platform (by Christian Ulrik Andersen, Nicolas Maleve, and Pablo Velasco).]] | |||

=== Mapping the many different planes and dependencies of generative AI === | |||

What is particular about the map of this catalogue of objects of interest and necessity, is that it purely attempts to map autonomous and decentralised generative AI, serving as a map for a guided tour and experience of autonomous AI. However, both Hugging Face' dependency in venture capital and Stable Diffusion's dependency on hardware and infrastructure point to the fact that there are several planes that are not captured in the above map of this catalogue, but which are equally important. For instance, The [https://artificialintelligenceact.eu EU AI Act] or laws on copyright infringement, which Stable Diffusion (like any other AI ecology) will also depend on, point to a plane of governance and regulation. AI, including Stable Diffusion, also connects to the depends on the organisation of human labour, or the extraction of resources. | |||

In describing the plane of objects of interest and necessity, we attempt to describe how Stable Diffusion and autonomous AI image generation build on dependencies to these different planes, but an overview of the many planes of AI and how it 'stacks' can of course also be the centre of a map in itself. One example of this is Kate Crawford's ''[https://katecrawford.net/atlas Atlas of AI]'', a book that displays different maps (and also images) that link AI to 'Earth' and the exploition of energy and minerals, or 'Labour' and the workers who do micro tasks ('clicking' tasks) or the workers in Amazon's warehouses. In continuation, Crawford's book also contains chapters on 'Data', 'Classification', 'Affect', 'State' and 'Power'. | |||

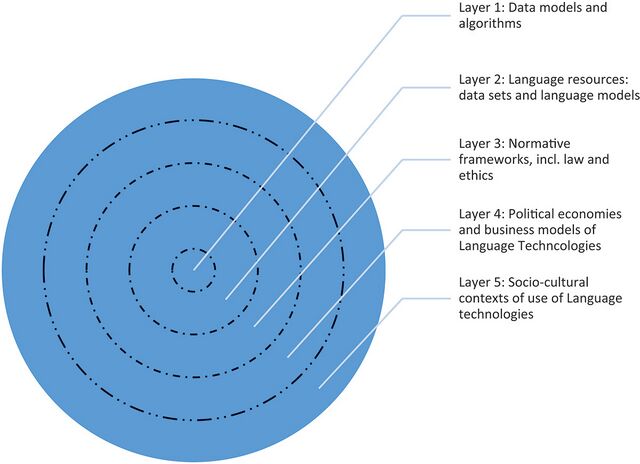

Also [https://doi.org/10.1080/15710882.2024.2341799 Gertraud Koch] has drawn a map of all the different layers that she connects to "technological activity", and which would also pertain to AI. On top of a layer of technology (the 'data models and algorithms') one will find other layers that are interdependent, and which contribute to the political and technological qualities of AI. As such, the map is also meant for navigation – to identify starting points for rethinking its concepts or reimagining alternative futures (in their work, particularly in relation to a potential delinking from a colonial past, and reimagining a pluriversality of technology) | |||

[[File:Gertraud Koch layers activity.jpg|none|thumb|640x640px|Five sets of technological activities. The layers wrap around technology as a material entity with its own agency, but the layers are permeable and interdependent. Map by Gertraud Koch (2024).]] | |||

Within the many planes and stacks of AI one can find many different maps that build other types of overviews and conceptual models of AI – perhaps pointing to how maps themselves take part in making AI a reality. | |||

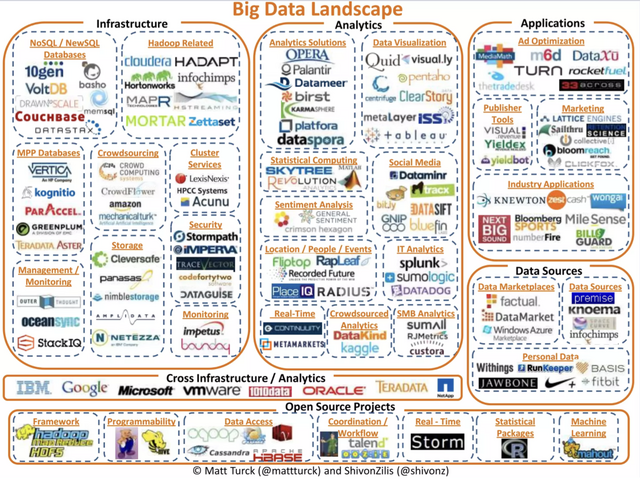

==== The corporate landscape ==== | |||

The entrepreneur, investor and pod cast host Matt Turck has made the “ultimate annual market map of the data/AI industry”. Since 2012 hehas [https://firstmark.com/team/matt-turck/ documented the corporate landscape of AI] not just to identify key corporate actors, but also developments of trends in business. As Matt Turk also [https://mattturck.com/MAD2024/ notes] in his blog, the first map from 2012 has merely 139 logos, whereas the 2024 version has 2,011 logos. This reflects the massive investment in AI entrepreneurship, following first 'big data' and now 'generative AI' (and machine learning) - how AI has become a business reality. Comparing the 2012 version with [https://mad.firstmark.com the most recent map from 2024], one can see the corporate landscape of AI how the division of companies dealing with infrastructure, data analytics, applications, data sources, and open source AI becomes fine grained over the years, forking out into, for instance, applications in health, finance and agriculture; or how privacy and security become of increased concern in the business of infrastructures – clearly, AI reconfigures and intersects with many other realities. [[File:Big Data Landscape 2012.png|none|thumb|640x640px|The corporate landscape of Big Data in 2012, by Matt Turck and Shivon Zilis. ]] | |||

==== Critical cartography in the mapping of AI ==== | |||

In mapping AI there are also [https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://acme-journal.org/index.php/acme/article/download/723/585/2396&ved=2ahUKEwja7NnqpKCOAxXDi8MKHZpyLvYQFnoECBMQAQ&usg=AOvVaw1TvCP2mYCcxObcv_55dFBs 'counter maps' or 'critical cartography'.] Conventional world maps are built on set principles of, for instance, North facing up, and Europe at the centre. The map is therefore not just a map for navigation, but also a map of more abstract imaginaries and histories originating in colonial times, where maps was the outset of Europe and an intrinsic part of the conquest of territories. In this sense, a map always also reflects hierarchies of power and control that can be inverted or exposed (for instance by turning the map upside down, letting the south be a point of departure). Counter-mapping technological territories would, following this logic, involve what the French research and design group Bureau d´Études has called "[https://bureaudetudes.org maps of contemporary political, social and economic systems that allow people to inform, reposition and empower themselves.]" They are maps that reveal underlying structures of social, political or economic dependencies to expose what ought to be of common interest, or the hidden grounds on which a commons rests. [https://files.libcom.org/files/Anti-Oedipus.pdf Félix Guattari and Gilles Deleuze' notion of 'deterritorialization'] can be useful, here, as a way to conceptualise the practices that expose and mutate the social, material, financial, political, or other organisation of relations and dependencies. The aim is ultimately not only to destroy this 'territory' of relations and dependencies, but ultimately a 'reterritorialization' – a reconfiguration of the relations and dependencies. | |||

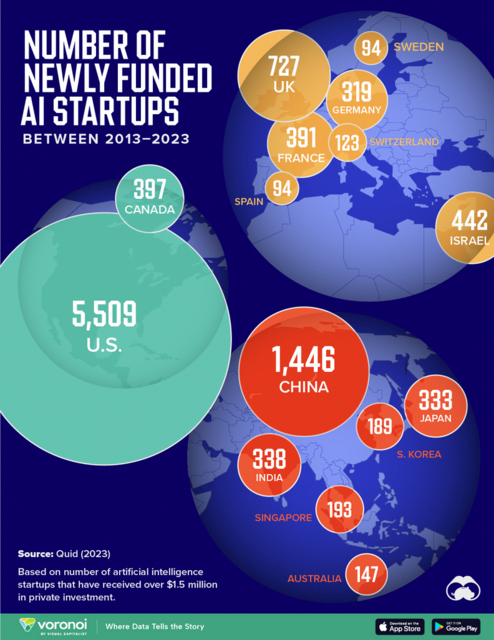

Utilising the opportunities of info-graphics in mapping can be a powerful tool. At the plane of financial dependencies, one can map, as Matt Turck, the corporate landscape of AI, but one can also draw a different map that reveals how the territory of 'startups' does not compare to a geographical map of land and continents. Strikingly, The United States is double the size of Europe and Asia, whereas there are whole countries and continents that are missing (such as Russia and Africa). This map thereby not only reflects the number of startups, but also how venture capital is dependent on other planes, such as politics and the organisation of capital, or infrastructural gaps. In Africa, for instance, [https://stias.ac.za/2024/04/from-digital-divide-to-ai-divide-fellows-seminar-by-jean-louis-fendji/ the AI divide is very much also a 'digital divide',] as argued by AI researcher Jean-Louis Fendji. | |||

[[File:Numbers of newly funded AI startups.png|none|thumb|640x640px|Numbers of newly funded AI startups per country (Image by Visual Capitalist, https://www.visualcapitalist.com/mapped-the-number-of-ai-startups-by-country/)]] | |||

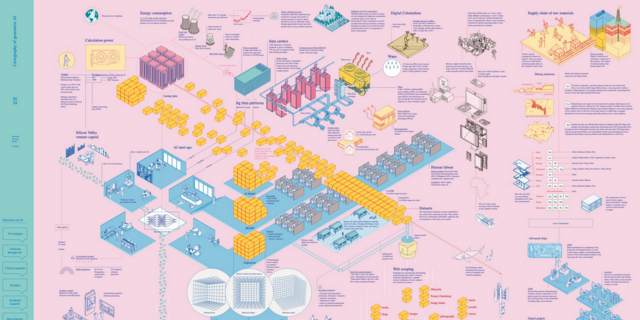

Counter-mapping the organisation of relations and dependencies is also prevalent in the works of the Barcelona-based artist collective Estampa, which exposes how generative AI depends on different planes: venture capital, energy consumption, a supply chain of minerals, human labour, as well as other infrastructures, such as the internet, which is 'scraped' for images or other media, using e.g. software like [[Clip]]). [[File:Taller Estampa, map of generative AI.png|none|thumb|640x640px|Taller Estampa, map of generative AI, 2024]] | |||

==== Epistemic mapping of AI ==== | |||

Maps of AI often also address how AI functions as what [https://www.politybooks.com/bookdetail?book_slug=problem-spaces-how-and-why-methodology-matters--9781509507931 Celia Lury] has called an 'epistemic infrastructure'. That is, AI is an apparatus that builds on knowledge, creates knowledge, but also shapes what knowledge is and we consider to be knowledge. To Lury, the question of 'methods' here becomes central - not as a neutral, 'objective' stance, as one typically regards good methodology in science, but as a cultural and social practice that help articulate the questions we ask and what we consider to be a problem in the first place. When one for, instance, criticises the social, racial or other biases in generative AI (such as all doctors being white males in generative AI image creation), we are not just dealing with bias in the dataset that can be fixed with '[[negative prompts]]' or other technical means. Rather, AI is fundamentally – in its very construction and infrastructure – based in a Eurocentric history of modernity and knowledge production. For instance, as pointed out by [https://journals.sagepub.com/doi/abs/10.1080/03080188.2020.1840225 Rachel Adams], AI belongs to a genealogy of intelligence, and one also ought to ask, whose intelligence and understanding of knowledge is modelled within the technology – and whose is left out? | |||

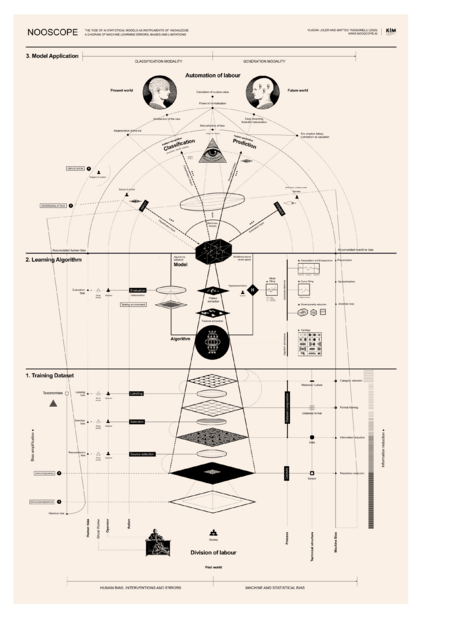

There are several attempts to map this territory in the plane of knowledge production, and its many social, material, political or other relations and dependencies. Sharing many of the concerns of Lury and Adams, Vladan Joler and Matteo Pasquinelli's '[https://fritz.ai/nooscope/ Nooscope]' is a good example of this. In their understanding AI belongs to a much longer history of knowledge instruments ('nooscopes', from the Greek ''skopein'' ‘to examine, look’ and ''noos'' ‘knowledge’) that would also include optical instruments, but which in AI is a form of knowledge magnification of patterns and statistical correlations in data. The nooscope map is an abstraction of how AI functions as "Instrument of Knowledge Extractivism". It is therefore not a map of 'intelligence' and logical reasoning, but rather of a "regime of visibility and intelligibility" whose aim is the automation of labour, and of how this aim rests on (as other capitalist extractions of value in modernity) a division of labour – between humans and technology, between for instance historical biases in the selection and labelling of data, and their formalisation in sensors, databases and metadata. The map also refers to how selection, labelling and other laborious tasks in the training of models is done by "ghost workers" thereby referring to a broader geo-politics and body-politics of AI where human labour is often done by subjects of the Global South (although they might oppose being referred to as 'ghosts'). | |||

[[File:Nooscope.png|none|thumb|640x640px|alt=A map of AI as an instrument of knowledge by Vladan Joler and Matteo Pasquinelli (2020)|thumb|A map of AI as an instrument of knowledge by Vladan Joler and Matteo Pasquinelli (2020) ]] | |||

[[Category:Objects of Interest and Necessity]] | |||

++++++++++++++++++++++++++++++++++++++++++++++++++++++ | |||

== [CARD TEXT – possibly needs shortening] == | |||

== A Map of 'Objects of Interest and Necessity' == | |||

There is little knowledge of what AI really looks like. This might explain the abundance of maps that in each their own way 'maps' AI. Like any of these, a map of 'Objects of Interest and Necessity', is not to be confused with the actual thing - 'the map is not the territory', as the saying goes. The map presented here, is an attempt to abstract the different objects that one may come across when entering the world of autonomous and decentralised AI image creation (and in particular Stable Diffusion). It can serve as a useful guide to experience what the objects of this world are called, how they connect each other, to communities or underlying infrastructures – perhaps also as an outset for one's own exploration. | |||

There is a fundamental distinction between three territories. | |||

Firstly, there is 'pixel space'. In this space one encounters, for instance, all the images that are generated by AI 'prompts') in one of the many user 'interfaces' to Stable Diffusion, but also the many other images of visual culture that serve as the outset (or, 'datasets') for generating AI models. Images are 'scraped' from the Internet or different repositories using software (such as 'Clip') that can automatically capture images and generate categories and descriptions of the images. | |||

Secondly, there is a 'latent space'. Image latency refers to the invisible space in between the capture of images in datasets and the generation of new images. It is a algorithmic space of computational models where images are, for instance, encoded with 'noise', and the machine then learns how to how to de-code them back into images (aka 'image diffusion'). | |||

In autonomous and decentralised AI image generation, there is also a third space of objects that are usually not seen. As an intrinsic part of a visual culture communities, for instance, create a so-called 'LoRAs' – a 're-modelling' of latent space, in order to meet specific visual requirements. Both image-creations and LoRAs are usually shared on designated platforms (such as 'CivitAI'). There are many other objects in this space, too, such as 'Stable Horde' that is used to create a distributed network where users can use each other's hardware ('GPUs') for 'virtual currencies' in order to speed up the process of generating images. | |||

The many objects that one would encounter in on or the other of the three 'territories' connect to many different planes. They deeply depend on (and sometimes also reconfigure) material infrastructures, capital and value, the automation and extraction of labour, the organisation of knowledge, politics, regulation, and much more. | |||

==== [Images: 'Our' map(s) - perhaps surround by other maps] ==== | |||

++++ | |||

OLD MAPS | |||

[[File:Map objects and planes.jpg|none|thumb|A map of how objects are suspended between different planes - not only a technical one, but also an organisational one, and a mat<nowiki/>erial one. It reflects the separation of pixel space from latent space, Underneath one finds a second plane of material infrastructures (such as GPU processing power and electricity), and one can potentially also add more planes, such as for instance regulation or governance of AI (by Christian Ulrik Andersen, Nicolas Maleve, and Pablo Velasco) // NEEDS REDRAWING]] | |||

[[File:Map pony image process.jpg|none|thumb|Map of genereting pony image]] | |||

[[Category:Objects of Interest and Necessity]] | |||

Latest revision as of 11:31, 9 August 2025

Mapping 'objects of interest and necessity'

If one considers generative AI as an object, there is also a world of ‘para objects’, surrounding AI and shaping its reception and interpretation in the form of maps or diagrams of AI. They are drawn by both amateurs and professionals who need to represent processes that are otherwise sealed off in technical systems, but more generally reflect a need for abstraction – a need for conceptual models of how generative AI functions. However, as Alfred Korzybski famously put it, one should not confuse the map with the territory: the map is not how reality is, but a representation of reality.

Following on from this, mapping the objects of interest in autonomous AI image creation is not to be understood as a map of what it 'really is'. Rather, it is a map of encounters of objects; encounters that can be documented and catalogued, but also positioned in a spatial dimension – representing a 'guided tour', and an experience of what objects are called, how they look, how they connect to other objects, communities or underlying infrastructures (see also Objects of interest and necessity). Perhaps, the map can even be used by others to navigate autonomous generative AI and create their own experiences? But, importantly to note, what is particular about the map of this catalogue of objects of interest and necessity, is that it is an attempt to map autonomous generative AI. It does, in other words, not map what is otherwise concealed in, say, Open AI's DALLE-E or Adobe Firefly. In fact, we know very little of how to navigate such more proprietary systems, and one might speculate if there even exists a complete map of their relations and dependencies.

Perhaps because of this lack of over-view and insight, maps and cartographies are not just shaping the reception and interpretation of generative AI, but can also be regarded as objects of interest and necessity in themselves – intrinsic parts of AI’s existence. Generative AI depends on an abundance of cartography to model, shape, navigate, and also negotiate and criticise its being in the world. There seems to be an inbuilt cultural need to 'map the territory', and the collection of cartographies and maps is therefore also what makes AI a reality – making AI real by externalising its abstraction onto a map, so to speak

A map of 'objects of interest and necessity' (autonomous AI image generation)

To enter the objects of autonomous AI image generation, a map that separates the territories of ‘pixel space’ from ‘latent space’ can be useful as a starting point – that is, a map that separates the objects you see from those that cannot be seen because they exist in a more abstract, computational space.

Latent space

Latent space consists of computational models and is a highly abstract space where it can be difficult to explain the behaviour of the different computational models. Very briefly put, they encode images with noise (using a Variational Autoencoder, VAE), and then learn how to de-code them back into images, also known as diffusion.

In the process of model training, datasets are central. Many of the datasets that are used to train models are made by 'scraping' the internet. Common Crawl, as an example, is a non-profit organisation that has built a repository of 250 billion web pages. Open Images and ImageNet are also commonly used as the backbone of visually training generative AI.

Contrary to common belief, there is not just one dataset used to make a model work, but multiple models and datasets to, for instance, reconstruct missing facial or other bodily details (such as too many fingers on one hand), 'upscale' images of low resolution or 'refine' the details in the image. Importantly, when it comes to autonomous AI image creation, there is typically an organisation and a community behind each dataset and training. LAION (Large-scale-Artificial Intelligence Open Network) is a good example of this. It too is a non-profit community organisation that develops and offers free models and datasets. Stable Diffusion was trained on datasets created by LAION.

For a model to work, it needs to be able to understand the semantic relationship between text and image. Say, how a tree is different from a lamp post, or a photo is different from a 18th century naturalist painting. The software CLIP (by OpenAI) is widely used for this, and also by LAION. CLIP is capable of predicting what images that can be paired with which text in a dataset. The annotation of images is central, here. That is, for the dataset to be useful there needs to be descriptions of what is on the images, what style they are in, their aesthetic qualities, and so on. Whereas ImageNet, for instance, crowdsources the annotation process, LAION uses Common Crawl to find html with <img> tags, and then use the Alt Text to annotate the images (Alt Text is a descriptive text acts as a substitute for visual items on a page, and is sometimes included in the image data to increase accessibility). This is a highly cost-effective solution, which has enabled its community to produce and make publicly available a range of datasets and models that can be used in generative AI image creation.

Pixel space

In pixel space, you find a range of visible objects that a typical user would normally meet. This includes the interfaces for creating images. In conventional interfaces like DALL-E og Bing Image Creator, users prompt in order to generate images. What is particular for autonomous and decentralised AI image generation is that the interfaces have many more parameters and ways to interact with the models that generate the images. It functions more like an 'expert' interface.

In pixel space one finds many objects of visual culture. Apart from the interface itself, this includes both all the images generated by AI, and all the images used to train the models behind. These images are, as described above, used to create datasets, compiled by crawling the internet and scraping images that all belong to different visual cultures – ranging, e.g., from museum collections of paintings to criminal records with mug shots.

Many users also have specific aesthetic requirements to the images they want to generate. Say, to generate images in a particular manga style or setting. The expert interfaces therefore also contains the possibility to combine different models and even to post-train one's own models, also known as a LoRA (Low-Rank Adaptation). When sharing the images on platforms like Danbooru (on of the first and largest image boards for manga and anime) images are typically well categorised – both descriptively ('tight boots', 'open mouth', 'red earrings', etc.) and according to visual cultural style ('genshin impact', 'honkai', 'kancolle', etc.). Therefore they can also be used to train more models.

A useful annotated and categorised dataset - be it for a foundation model or a LoRA – typically involves specialised knowledge of both the technical requirements of model training (latent space) and the aesthetics and cultural values of visual culture itself. For instance of common visual conventions, such as realism, beauty, horror, and also (in the making of LoRAs) of more specialised conventions – say a visual style that an artist, wants to generate (see e.g. the generated images of Danish Hiphop by Kristoffer Ørum).

An organisational plane

AI generated images as well as other objects of pixel space and latent space (like software, interfaces, datasets, or modesl involved in image generation) are not just products of a technical system, but also exist in a social realm, organised on platforms that are driven by the users themselves or commercial companies.

In mainstream visual culture the organisation is structured as a relation between a user and a corporate service. For example, users use Open AI's DALL-E to generate images, and may also share them afterwards on social media platfoms like Meta's Instagram. In this case, the social organisation is more or less controlled by the corporations who typically allow little interaction between their many users. For instance, DALL-E does not have a feature that allows one to build on or reuse the prompt of other users, or of users to share their experiences and insights with generative AI image creation. Social interaction between users only occurs when they share their images on platforms such as, say, Instagram. Rarely are users involved in the social, legal, technical or other conditions for making and sharing AI generated images.

Conversely, on the platforms for generating and sharing images in more autonomous AI involve users and communities are deeply involved in the conditions for AI image generation. LAION is a good example of this. It is run by a non-commercial organisation or 'team' of around 20 members, led by Christoph Shumann, but their many projects involve a wider community of AI specialists, professionals and researchers. They collaborate on principles of access and openness, and their attempt to 'democratise' AI stands in contrast to the policies of Big Tech AI corporations. In many ways, LAION resembles what the anthropologist Chris Kelty has also labelled a 'recursive publics' – a community that care for and self-maintain the means of its own existence.

However, such openness is not to be taken for granted, as also noted in debates around LAION. There are many platforms in the ecology of autonomous AI ( see also CivitAI and Hugging Face) that easily become valuable resources. The datasets, models, communities, and expertise they offer may therefore also be subject to value extraction. Hugging Face is a prime example of this - a community hub as well as a $4.5 billion company with investments from Amazon, IBM, Google, Intel, and many more; as well as collaborations with Meta and Amazon Web Services. This indicates that in the organisation of autonomous AI there are dependencies on not only communities, but often also on corporate collaboration and venture capital.

A material plane (GPU infrastructure)

Just like the objects of autonomous AI depend on a social organisation (and also one of capital and labour), they also depend on a material infrastructure – and are, so to speak, always suspended between many different planes. First of all on hardware and specifically the GPUs that are needed to generate images as well as the models behind. Like in the social organisation of AI image generation, infrastructures too are organised differently.

The mainstream commercial services are set up as what one might call a 'client-server' relation. The users of DALL-E or similar service access a main server (or a 'stack' of servers). Users have little control of the conditions for generating models and images (say, the way models are reused or their climate impact) as this happens elsewhere, in 'the cloud'.

Where autonomous AI distinguishes itself from mainstream AI is typically the decentralisation organisation of processing power. Firstly, people who generate images or develop LoRAs with Stable Diffusion can use their own GPU. Often a simple laptop will work, but individuals and communities involved with autonomous AI image creation will often have expensive GPUs with high processing capability (built for gaming). Secondly, there is a decentralised network that connects the community's GPUs. That is, using the so-called Stable Horde (or AI Horde), the community can directly access each other's GPUs in a peer-to-peer manner. Granting others access to one's GPU is rewarded with currencies that in turn can be used to skip the line when waiting to access other members' GPUs. This social organisation of a material infrastructure allows the community to generate images almost with the same speed as commercial services.

To be dependent on the distribution of resources, rather than a centralised resource (e.g., a platform in 'the cloud'), points to how dependencies are often deliberately chosen in autonomous AI. One chooses to be dependent on a community because, for instance, one wants to reduce the consumption of hardware, because it is more cost-effective than one's own GPU, because one cannot afford the commercial services, or simply because one prefers this type of organisation of labour (separated from capital) that offers an alternative to Big Tech. That is, simply because one wants to be autonomous.

At this material plane, there are many other dependencies. For instance, energy consumption, the use of expensive minerals for producing hardware, or the exploitation of labour in the production of hardware.

Mapping the many different planes and dependencies of generative AI

What is particular about the map of this catalogue of objects of interest and necessity, is that it purely attempts to map autonomous and decentralised generative AI, serving as a map for a guided tour and experience of autonomous AI. However, both Hugging Face' dependency in venture capital and Stable Diffusion's dependency on hardware and infrastructure point to the fact that there are several planes that are not captured in the above map of this catalogue, but which are equally important. For instance, The EU AI Act or laws on copyright infringement, which Stable Diffusion (like any other AI ecology) will also depend on, point to a plane of governance and regulation. AI, including Stable Diffusion, also connects to the depends on the organisation of human labour, or the extraction of resources.

In describing the plane of objects of interest and necessity, we attempt to describe how Stable Diffusion and autonomous AI image generation build on dependencies to these different planes, but an overview of the many planes of AI and how it 'stacks' can of course also be the centre of a map in itself. One example of this is Kate Crawford's Atlas of AI, a book that displays different maps (and also images) that link AI to 'Earth' and the exploition of energy and minerals, or 'Labour' and the workers who do micro tasks ('clicking' tasks) or the workers in Amazon's warehouses. In continuation, Crawford's book also contains chapters on 'Data', 'Classification', 'Affect', 'State' and 'Power'.

Also Gertraud Koch has drawn a map of all the different layers that she connects to "technological activity", and which would also pertain to AI. On top of a layer of technology (the 'data models and algorithms') one will find other layers that are interdependent, and which contribute to the political and technological qualities of AI. As such, the map is also meant for navigation – to identify starting points for rethinking its concepts or reimagining alternative futures (in their work, particularly in relation to a potential delinking from a colonial past, and reimagining a pluriversality of technology)

Within the many planes and stacks of AI one can find many different maps that build other types of overviews and conceptual models of AI – perhaps pointing to how maps themselves take part in making AI a reality.

The corporate landscape

The entrepreneur, investor and pod cast host Matt Turck has made the “ultimate annual market map of the data/AI industry”. Since 2012 hehas documented the corporate landscape of AI not just to identify key corporate actors, but also developments of trends in business. As Matt Turk also notes in his blog, the first map from 2012 has merely 139 logos, whereas the 2024 version has 2,011 logos. This reflects the massive investment in AI entrepreneurship, following first 'big data' and now 'generative AI' (and machine learning) - how AI has become a business reality. Comparing the 2012 version with the most recent map from 2024, one can see the corporate landscape of AI how the division of companies dealing with infrastructure, data analytics, applications, data sources, and open source AI becomes fine grained over the years, forking out into, for instance, applications in health, finance and agriculture; or how privacy and security become of increased concern in the business of infrastructures – clearly, AI reconfigures and intersects with many other realities.

Critical cartography in the mapping of AI

In mapping AI there are also 'counter maps' or 'critical cartography'. Conventional world maps are built on set principles of, for instance, North facing up, and Europe at the centre. The map is therefore not just a map for navigation, but also a map of more abstract imaginaries and histories originating in colonial times, where maps was the outset of Europe and an intrinsic part of the conquest of territories. In this sense, a map always also reflects hierarchies of power and control that can be inverted or exposed (for instance by turning the map upside down, letting the south be a point of departure). Counter-mapping technological territories would, following this logic, involve what the French research and design group Bureau d´Études has called "maps of contemporary political, social and economic systems that allow people to inform, reposition and empower themselves." They are maps that reveal underlying structures of social, political or economic dependencies to expose what ought to be of common interest, or the hidden grounds on which a commons rests. Félix Guattari and Gilles Deleuze' notion of 'deterritorialization' can be useful, here, as a way to conceptualise the practices that expose and mutate the social, material, financial, political, or other organisation of relations and dependencies. The aim is ultimately not only to destroy this 'territory' of relations and dependencies, but ultimately a 'reterritorialization' – a reconfiguration of the relations and dependencies.

Utilising the opportunities of info-graphics in mapping can be a powerful tool. At the plane of financial dependencies, one can map, as Matt Turck, the corporate landscape of AI, but one can also draw a different map that reveals how the territory of 'startups' does not compare to a geographical map of land and continents. Strikingly, The United States is double the size of Europe and Asia, whereas there are whole countries and continents that are missing (such as Russia and Africa). This map thereby not only reflects the number of startups, but also how venture capital is dependent on other planes, such as politics and the organisation of capital, or infrastructural gaps. In Africa, for instance, the AI divide is very much also a 'digital divide', as argued by AI researcher Jean-Louis Fendji.

Counter-mapping the organisation of relations and dependencies is also prevalent in the works of the Barcelona-based artist collective Estampa, which exposes how generative AI depends on different planes: venture capital, energy consumption, a supply chain of minerals, human labour, as well as other infrastructures, such as the internet, which is 'scraped' for images or other media, using e.g. software like Clip).

Epistemic mapping of AI

Maps of AI often also address how AI functions as what Celia Lury has called an 'epistemic infrastructure'. That is, AI is an apparatus that builds on knowledge, creates knowledge, but also shapes what knowledge is and we consider to be knowledge. To Lury, the question of 'methods' here becomes central - not as a neutral, 'objective' stance, as one typically regards good methodology in science, but as a cultural and social practice that help articulate the questions we ask and what we consider to be a problem in the first place. When one for, instance, criticises the social, racial or other biases in generative AI (such as all doctors being white males in generative AI image creation), we are not just dealing with bias in the dataset that can be fixed with 'negative prompts' or other technical means. Rather, AI is fundamentally – in its very construction and infrastructure – based in a Eurocentric history of modernity and knowledge production. For instance, as pointed out by Rachel Adams, AI belongs to a genealogy of intelligence, and one also ought to ask, whose intelligence and understanding of knowledge is modelled within the technology – and whose is left out?

There are several attempts to map this territory in the plane of knowledge production, and its many social, material, political or other relations and dependencies. Sharing many of the concerns of Lury and Adams, Vladan Joler and Matteo Pasquinelli's 'Nooscope' is a good example of this. In their understanding AI belongs to a much longer history of knowledge instruments ('nooscopes', from the Greek skopein ‘to examine, look’ and noos ‘knowledge’) that would also include optical instruments, but which in AI is a form of knowledge magnification of patterns and statistical correlations in data. The nooscope map is an abstraction of how AI functions as "Instrument of Knowledge Extractivism". It is therefore not a map of 'intelligence' and logical reasoning, but rather of a "regime of visibility and intelligibility" whose aim is the automation of labour, and of how this aim rests on (as other capitalist extractions of value in modernity) a division of labour – between humans and technology, between for instance historical biases in the selection and labelling of data, and their formalisation in sensors, databases and metadata. The map also refers to how selection, labelling and other laborious tasks in the training of models is done by "ghost workers" thereby referring to a broader geo-politics and body-politics of AI where human labour is often done by subjects of the Global South (although they might oppose being referred to as 'ghosts').

++++++++++++++++++++++++++++++++++++++++++++++++++++++

[CARD TEXT – possibly needs shortening]

A Map of 'Objects of Interest and Necessity'

There is little knowledge of what AI really looks like. This might explain the abundance of maps that in each their own way 'maps' AI. Like any of these, a map of 'Objects of Interest and Necessity', is not to be confused with the actual thing - 'the map is not the territory', as the saying goes. The map presented here, is an attempt to abstract the different objects that one may come across when entering the world of autonomous and decentralised AI image creation (and in particular Stable Diffusion). It can serve as a useful guide to experience what the objects of this world are called, how they connect each other, to communities or underlying infrastructures – perhaps also as an outset for one's own exploration.

There is a fundamental distinction between three territories.

Firstly, there is 'pixel space'. In this space one encounters, for instance, all the images that are generated by AI 'prompts') in one of the many user 'interfaces' to Stable Diffusion, but also the many other images of visual culture that serve as the outset (or, 'datasets') for generating AI models. Images are 'scraped' from the Internet or different repositories using software (such as 'Clip') that can automatically capture images and generate categories and descriptions of the images.

Secondly, there is a 'latent space'. Image latency refers to the invisible space in between the capture of images in datasets and the generation of new images. It is a algorithmic space of computational models where images are, for instance, encoded with 'noise', and the machine then learns how to how to de-code them back into images (aka 'image diffusion').

In autonomous and decentralised AI image generation, there is also a third space of objects that are usually not seen. As an intrinsic part of a visual culture communities, for instance, create a so-called 'LoRAs' – a 're-modelling' of latent space, in order to meet specific visual requirements. Both image-creations and LoRAs are usually shared on designated platforms (such as 'CivitAI'). There are many other objects in this space, too, such as 'Stable Horde' that is used to create a distributed network where users can use each other's hardware ('GPUs') for 'virtual currencies' in order to speed up the process of generating images.

The many objects that one would encounter in on or the other of the three 'territories' connect to many different planes. They deeply depend on (and sometimes also reconfigure) material infrastructures, capital and value, the automation and extraction of labour, the organisation of knowledge, politics, regulation, and much more.

[Images: 'Our' map(s) - perhaps surround by other maps]

++++

OLD MAPS