Latent space: Difference between revisions

| (3 intermediate revisions by 2 users not shown) | |||

| Line 4: | Line 4: | ||

It is useful to think about that space as a map. As Estelle Blaschke, Max Bonhomme, Christian Joschke and Antonio Somaini explain: | It is useful to think about that space as a map. As Estelle Blaschke, Max Bonhomme, Christian Joschke and Antonio Somaini explain: | ||

A latent space consists of vectors (series of numbers, arranged in a precise order) that represent data points in a multidimensional space with hundreds or even thousands of dimensions. Each vector, with n number of dimensions, represents a specific data point, with n number of coordinates. These coordinates capture some of the characteristics of the digital object encoded and represented in the latent space, determining its position relative to other digital objects: for example, the position of a word in relation to other words in a given language, or the relationship of an image to other images or to texts.[2] | A latent space consists of vectors (series of numbers, arranged in a precise order) that represent data points in a multidimensional space with hundreds or even thousands of dimensions. Each vector, with n number of dimensions, represents a specific data point, with n number of coordinates. These coordinates capture some of the characteristics of the digital object encoded and represented in the latent space, determining its position relative to other digital objects: for example, the position of a word in relation to other words in a given language, or the relationship of an image to other images or to texts.[2] | ||

The relation between datasets and latent space is a complex one. A latent space is a translation of a given training set. Therefore, in the process of model training, [[Dataset|datasets]] are central and various factors such as | The relation between datasets and latent space is a complex one. A latent space is a translation of a given training set. Therefore, in the process of model training, [[Dataset|datasets]] are central and various factors such as the curation method or scale have a great impact on the regularities that can be learned and represented into vectors. But a latent space is not a dataset's literal copy. It is a statistical interpretation. A latent space gives a model its own identity. As [https://www.youtube.com/@CutsceneArtist WetCircuit] (AKA Cutscene Artist), a prominent user and author of tutorials of the Draw Things app puts it, a model is not "bottomless." [3] This is due to the fact that the model's latent space is finite and therefore biased. This is an important argument for the defense of a decentralized AI ecosystem that ensures a diverse range of worldviews. | ||

The multiplication of models (and therefore their latent spaces) produces various kinds of relations between them. In current generation pipelines, | The multiplication of models (and therefore their latent spaces) produces various kinds of relations between them. In current generation pipelines, images are rarely the pure products of one latent space. In fact, there are different components intervening in image generation. Small models such as [[LoRA|LoRAs]] add capabilities to the model. The software CLIP is used to encode user input. Other components such as upscalers add higher resolution to the results. There are many latent spaces involved, each with their own inflections, tendencies and limitations. Another important form of relation occurs when one model is trained on top of an existing one. For instance, as Stable Diffusion is open source, many coders have used it as a basis for further development. In [[CivitAI]], on a model page, there is a field called Base Model which indicates the model's "origin", an important information for those who will use it as they are entitled to expect a certain similarity between the model's behaviour and its base. As models are retrained, their inner latent space is modified, amplified or condensed. Their internal map is partially redrawn. But the resulting model retains many of the base model's features. The tensions between what a new model adds to the latent space and what it inherits is explored further in the [[LoRA]] entry. | ||

The abstract nature of the latent space makes it difficult to grasp. The introduction of techniques of prompting | The abstract nature of the latent space makes it difficult to grasp. The introduction of techniques of prompting, ''text-to-image'', made the exploration of latent space using natural language possible. And the ability to use images as input to generate other images, ''image-to-image'', has opened a whole field of possibilities for queries that may be difficult to formulate in words. While in [[pixel space]], images and texts belong to different perceptual registers and relate to different modes of experience of the world, things change in latent space. Once encoded as latent representations, they are both treated as vectors and participate smoothly to the same space. This multi-modal quality of existing models is in part possible because other components such as the [[Variational Autoencoder, VAE|variational autoencoder]] and [[Clip|CLIP]] can transform various media such as texts and images into vectors. And it is the result of a decade of pre-existing work on classification and image understanding in computer vision where algorithms learned how a tree is different from a lamp post, or a photo is different from a 18th century naturalist painting. | ||

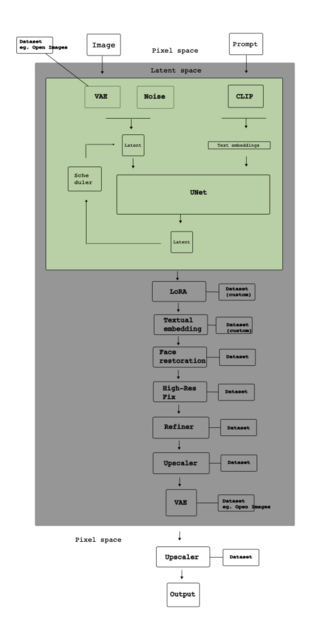

For a discussion of the implications of latent space, see also the entries [[diffusion]], [[LoRA]] and [[maps]]. [[File:Diagram-process-stable-diffusion-002.png|none|thumb|640x640px|A diagram of AI image generation (Stable Diffusion), separating 'pixel space' from 'latent space' - what you see, and what cannot be seen and with an overview of the inference process (by Nicolas Maleve)]] | |||

[[File:Diagram-process-stable-diffusion-002.png|none|thumb|640x640px|A diagram of AI image generation (Stable Diffusion), separating 'pixel space' from 'latent space' - what you see, and what cannot be seen and with an overview of the inference process (by Nicolas Maleve)]] | |||

| Line 28: | Line 18: | ||

[2] Blaschke, Estelle, Max Bonhomme, Christian Joschke, and Antonio Somaini. “Introduction. Photographs and Algorithms.” ''Transbordeur'' 9 (January 2025). <nowiki>https://doi.org/10.4000/13dwo</nowiki>. | [2] Blaschke, Estelle, Max Bonhomme, Christian Joschke, and Antonio Somaini. “Introduction. Photographs and Algorithms.” ''Transbordeur'' 9 (January 2025). <nowiki>https://doi.org/10.4000/13dwo</nowiki>. | ||

[3] | [3] wetcircuit. "as a wise man once said, there's not really ONE true model. They each have their 'look' and their quirks, they are not bottomless wells, so often we switch models to get fresh inspiration, or because one is very good at clean illustration while another is very cinematic..." ''Discord'', ''general-chat, Draw Things Official'', ''August 8, 2025''. | ||

[[Category:Objects of Interest and Necessity]] | [[Category:Objects of Interest and Necessity]] | ||

Latest revision as of 15:35, 26 August 2025

Latent space

In contrast to pixel space where users engage with AI images perceptually, the latent space is an abstract space internal to a generative algorithm such as Stable Diffusion. It can be represented as a transitional space between the collection of images in a datasets and the generation of new images. In the latent space, the dataset is translated into statistical representations that can be reconstructed back into images. As explained by Joanna Zylinska:

In Stable Diffusion, it was the encoding and decoding of images in so-called ‘latent space’, i.e., a simplified mathematical space where images can be reduced in size (or rather represented through smaller amounts of data) to facilitate multiple operations at speed, that drove the model’s success.[1]

It is useful to think about that space as a map. As Estelle Blaschke, Max Bonhomme, Christian Joschke and Antonio Somaini explain:

A latent space consists of vectors (series of numbers, arranged in a precise order) that represent data points in a multidimensional space with hundreds or even thousands of dimensions. Each vector, with n number of dimensions, represents a specific data point, with n number of coordinates. These coordinates capture some of the characteristics of the digital object encoded and represented in the latent space, determining its position relative to other digital objects: for example, the position of a word in relation to other words in a given language, or the relationship of an image to other images or to texts.[2]

The relation between datasets and latent space is a complex one. A latent space is a translation of a given training set. Therefore, in the process of model training, datasets are central and various factors such as the curation method or scale have a great impact on the regularities that can be learned and represented into vectors. But a latent space is not a dataset's literal copy. It is a statistical interpretation. A latent space gives a model its own identity. As WetCircuit (AKA Cutscene Artist), a prominent user and author of tutorials of the Draw Things app puts it, a model is not "bottomless." [3] This is due to the fact that the model's latent space is finite and therefore biased. This is an important argument for the defense of a decentralized AI ecosystem that ensures a diverse range of worldviews.

The multiplication of models (and therefore their latent spaces) produces various kinds of relations between them. In current generation pipelines, images are rarely the pure products of one latent space. In fact, there are different components intervening in image generation. Small models such as LoRAs add capabilities to the model. The software CLIP is used to encode user input. Other components such as upscalers add higher resolution to the results. There are many latent spaces involved, each with their own inflections, tendencies and limitations. Another important form of relation occurs when one model is trained on top of an existing one. For instance, as Stable Diffusion is open source, many coders have used it as a basis for further development. In CivitAI, on a model page, there is a field called Base Model which indicates the model's "origin", an important information for those who will use it as they are entitled to expect a certain similarity between the model's behaviour and its base. As models are retrained, their inner latent space is modified, amplified or condensed. Their internal map is partially redrawn. But the resulting model retains many of the base model's features. The tensions between what a new model adds to the latent space and what it inherits is explored further in the LoRA entry.

The abstract nature of the latent space makes it difficult to grasp. The introduction of techniques of prompting, text-to-image, made the exploration of latent space using natural language possible. And the ability to use images as input to generate other images, image-to-image, has opened a whole field of possibilities for queries that may be difficult to formulate in words. While in pixel space, images and texts belong to different perceptual registers and relate to different modes of experience of the world, things change in latent space. Once encoded as latent representations, they are both treated as vectors and participate smoothly to the same space. This multi-modal quality of existing models is in part possible because other components such as the variational autoencoder and CLIP can transform various media such as texts and images into vectors. And it is the result of a decade of pre-existing work on classification and image understanding in computer vision where algorithms learned how a tree is different from a lamp post, or a photo is different from a 18th century naturalist painting.

For a discussion of the implications of latent space, see also the entries diffusion, LoRA and maps.

[1] Joanna Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” Media Theory8, no. 1 (2024): 229–258, https://doi.org/10.70064/mt.v8i1.1075.

[2] Blaschke, Estelle, Max Bonhomme, Christian Joschke, and Antonio Somaini. “Introduction. Photographs and Algorithms.” Transbordeur 9 (January 2025). https://doi.org/10.4000/13dwo.

[3] wetcircuit. "as a wise man once said, there's not really ONE true model. They each have their 'look' and their quirks, they are not bottomless wells, so often we switch models to get fresh inspiration, or because one is very good at clean illustration while another is very cinematic..." Discord, general-chat, Draw Things Official, August 8, 2025.