Latent space: Difference between revisions

Created page with "== What is the network that sustains this object? == * How does it move from person to person, person to software, to platform, what things are attached to it (visual culture) * Networks of attachments * How does it relate / sustain a collective? (human + non-human) == How does it evolve through time? == Evolution of the interface for these objects. Early chatgpt offered two parameters through the API: prompt and temperature. Today extremely complex object with all..." |

|||

| (14 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== | == Latent space == | ||

In contrast to 'pixel space' where users engage with AI images perceptually, the latent space is an abstract space internal to a generative algorithm such as Stable Diffusion. It can be represented as a transitional space between the collection of images in a datasets and the generation of new images. In the latent space, the dataset is translated into statistical representations that can be reconstructed back into images. For a more complete discussion of latent space see therefore also [[diffusion]] and [[maps]]. As explained by Joanna Zylinska: | |||

In Stable Diffusion, it was the encoding and decoding of images in so-called ‘latent space’, i.e., a simplified mathematical space where images can be reduced in size (or rather represented through smaller amounts of data) to facilitate multiple operations at speed, that drove the model’s success.[1] | |||

Latent space is a highly abstract space where it can be difficult to explain the behaviour of the different computational models. As also explained in [[maps]], and very briefly put, the diffusion models function by encoding images with noise (using a [[Variational Autoencoder, VAE]]), and then learn how to de-code them back into images. In the process of model training, [[Dataset|datasets]] are central. Many of the datasets that are used to train models are made by 'scraping' the internet. [https://commoncrawl.org/ Common Crawl], as an example, is a non-profit organisation that has built a repository of 250 billion web pages. [https://storage.googleapis.com/openimages/web/index.html Open Images] and [https://www.image-net.org/ ImageNet] are also commonly used as the backbone of visually training generative AI. | |||

Contrary to common belief, there is not just one dataset used to make a model work, but multiple models and datasets to, for instance, reconstruct missing facial or other bodily details (such as too many fingers on one hand), 'upscale' images of low resolution or 'refine' the details in the image. Importantly, when it comes to autonomous AI image creation, there is typically an organisation and a community behind each dataset and training. [[LAION]] (Large-scale-Artificial Intelligence Open Network) is a good example of this. It too is a non-profit community organisation that develops and offers free models and datasets. Stable Diffusion was trained on datasets created by LAION. | |||

For a model to work, it needs to be able to understand the semantic relationship between text and image. Say, how a tree is different from a lamp post, or a photo is different from a 18th century naturalist painting. The software [[Clip|CLIP]] (by OpenAI) is widely used for this, and also by LAION. CLIP is capable of predicting what images that can be paired with which text in a dataset. | |||

Once trained, CLIP can compute representations of images and text, called embeddings, and then record how similar they are. The model can thus be used for a range of tasks such as image classification or retrieving similar images or text.[2] | |||

The annotation of images is central, here. That is, for the dataset to be useful there needs to be descriptions of what is on the images, what style they are in, their aesthetic qualities, and so on. Whereas ImageNet, for instance, crowdsources the annotation process, LAION uses Common Crawl to find html with <code><img></code> tags, and then use the Alt Text to annotate the images (Alt Text is a descriptive text acts as a substitute for visual items on a page, and is sometimes included in the image data to increase accessibility). This is a highly cost-effective solution, which has enabled its community to produce and make publicly available a range of datasets and models that can be used in generative AI image creation. | |||

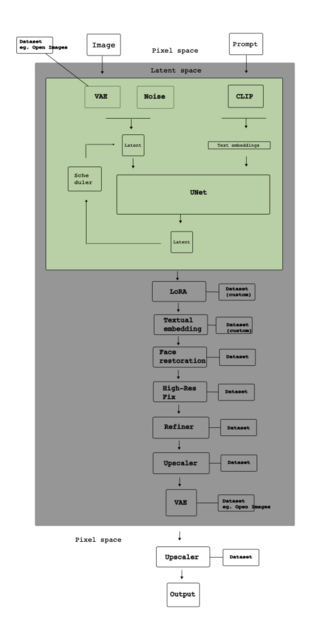

[[File:Diagram-process-stable-diffusion-002.png|none|thumb|640x640px|A diagram of AI image generation (Stable Diffusion), separating 'pixel space' from 'latent space' - what you see, and what cannot be seen and with an overview of the inference process (by Nicolas Maleve)]] | |||

[1] Joanna Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” ''Media Theory''8, no. 1 (2024): 229–258, <nowiki>https://doi.org/10.70064/mt.v8i1.1075</nowiki>. | |||

[2] Maximilian Schreiner, “New CLIP Model Aims to Make Stable Diffusion Even Better,” ''The Decoder'', September 18, 2022, <nowiki>https://the-decoder.com/new-clip-model-aims-to-make-stable-diffusion-even-better/</nowiki>. | |||

[[Category:Objects of Interest and Necessity]] | [[Category:Objects of Interest and Necessity]] | ||

Latest revision as of 16:10, 20 August 2025

Latent space

In contrast to 'pixel space' where users engage with AI images perceptually, the latent space is an abstract space internal to a generative algorithm such as Stable Diffusion. It can be represented as a transitional space between the collection of images in a datasets and the generation of new images. In the latent space, the dataset is translated into statistical representations that can be reconstructed back into images. For a more complete discussion of latent space see therefore also diffusion and maps. As explained by Joanna Zylinska:

In Stable Diffusion, it was the encoding and decoding of images in so-called ‘latent space’, i.e., a simplified mathematical space where images can be reduced in size (or rather represented through smaller amounts of data) to facilitate multiple operations at speed, that drove the model’s success.[1]

Latent space is a highly abstract space where it can be difficult to explain the behaviour of the different computational models. As also explained in maps, and very briefly put, the diffusion models function by encoding images with noise (using a Variational Autoencoder, VAE), and then learn how to de-code them back into images. In the process of model training, datasets are central. Many of the datasets that are used to train models are made by 'scraping' the internet. Common Crawl, as an example, is a non-profit organisation that has built a repository of 250 billion web pages. Open Images and ImageNet are also commonly used as the backbone of visually training generative AI.

Contrary to common belief, there is not just one dataset used to make a model work, but multiple models and datasets to, for instance, reconstruct missing facial or other bodily details (such as too many fingers on one hand), 'upscale' images of low resolution or 'refine' the details in the image. Importantly, when it comes to autonomous AI image creation, there is typically an organisation and a community behind each dataset and training. LAION (Large-scale-Artificial Intelligence Open Network) is a good example of this. It too is a non-profit community organisation that develops and offers free models and datasets. Stable Diffusion was trained on datasets created by LAION.

For a model to work, it needs to be able to understand the semantic relationship between text and image. Say, how a tree is different from a lamp post, or a photo is different from a 18th century naturalist painting. The software CLIP (by OpenAI) is widely used for this, and also by LAION. CLIP is capable of predicting what images that can be paired with which text in a dataset.

Once trained, CLIP can compute representations of images and text, called embeddings, and then record how similar they are. The model can thus be used for a range of tasks such as image classification or retrieving similar images or text.[2]

The annotation of images is central, here. That is, for the dataset to be useful there needs to be descriptions of what is on the images, what style they are in, their aesthetic qualities, and so on. Whereas ImageNet, for instance, crowdsources the annotation process, LAION uses Common Crawl to find html with <img> tags, and then use the Alt Text to annotate the images (Alt Text is a descriptive text acts as a substitute for visual items on a page, and is sometimes included in the image data to increase accessibility). This is a highly cost-effective solution, which has enabled its community to produce and make publicly available a range of datasets and models that can be used in generative AI image creation.

[1] Joanna Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” Media Theory8, no. 1 (2024): 229–258, https://doi.org/10.70064/mt.v8i1.1075.

[2] Maximilian Schreiner, “New CLIP Model Aims to Make Stable Diffusion Even Better,” The Decoder, September 18, 2022, https://the-decoder.com/new-clip-model-aims-to-make-stable-diffusion-even-better/.