Workshop on "Objects of Interest and Necessity", part 2

INTRO:

What is an "object of interest"?

With the notion of an ‘object of interest’ a guided tour of a place, a museum or collection, likely comes to mind. One may easily read this compilation of texts as a catalogue for such a tour in a social and technical system, where we stop and wonder about the different objects that, in one way or the other, take part in the generation of images with Stable Diffusion.

Perhaps a 'guided tour' also limits the understanding of what objects of interest are? Take for instance the famous Kepler telescope, whose mission was to search the Milky Way for exoplanets. Among all the stars, there is only a limited number of candidates for these so-called Kepler Objects of Interest (KOI).

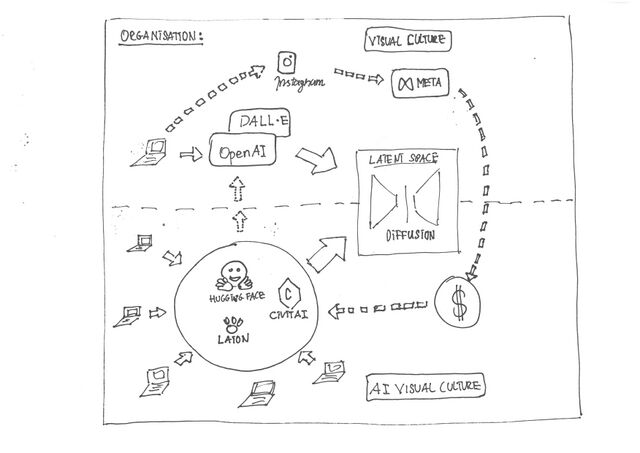

What makes AI livable? What are the underlying dependencies on relations between communities, models, capital, technical units, and more in these technical objects of interest?

Objects also contain an associative power, that literally can create memories and make a story come alive. These texts are therefore not just a collection of objects that makes generative AI images, but an exploration of an imaginary of AI image creation through the collection and exhibition of objects – and in particular, an imaginary of ‘autonomy’ from mainstream capital platforms.

Building an imaginary (in a Wiki)

What is 'autonomy'? What does it mean to separate from capital interest?

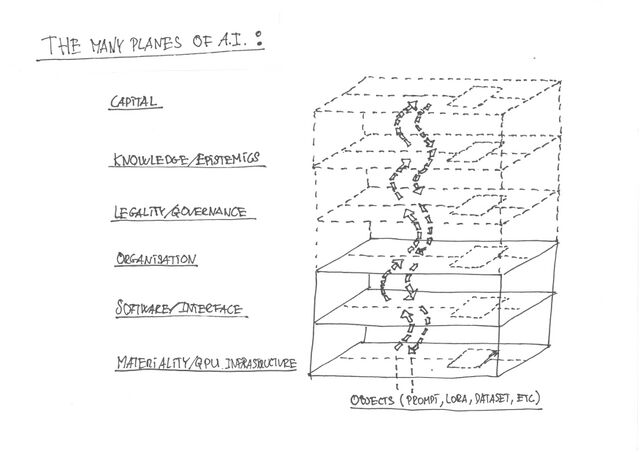

Not 'one thing' The range of agents, dependencies, flows of capital, and so on, can be difficult to comprehend and is in constant flux.

This, we have tried to capture in our description of the objects, guided by a set of questions that we address directly or indirectly in the different entries to our 'tour guide'

- What is the network that sustains this object?

- How does it evolve through time?

- How does it create value? Or decrease / affect value?

- What is its place/role in techno cultural strategies?

- How does it relate to autonomous infrastructure?

The wiki: https://ctp.cc.au.dk/w choose " Objects of interest and necessity"

Please leave comments, if you like

The booklet / guide

- is made without Adobe --- using 'web-to-print' techniques

Agenda

Visiting the different 'lands' of AI imaging - looking at the map & practical experimentation (what one can do in this land)

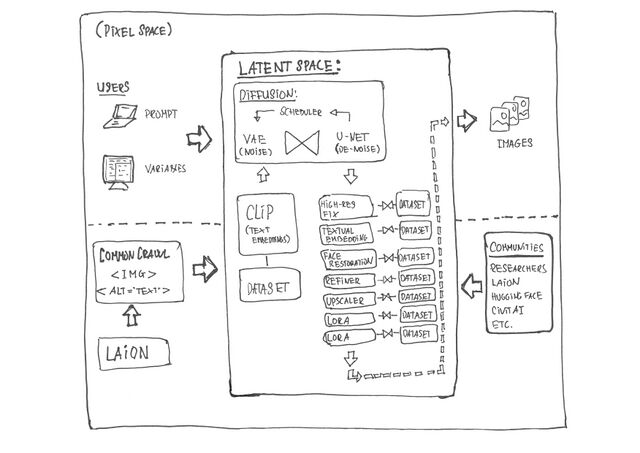

- The Land of Pixels (Pixel Space) // installing and playing with software

- The Land of Latents (Latent Space) // making 'LoRAs' on our own machines

- The Land of GPUs (The Different 'Planes' of AI) // making images using our own computer or Stable Horde (P2P), and spending 'kudos'.

PART ONE: 'The Land of Pixels'

Interfaces - 'out-of-the box prompting' vs. 'the expert interface'

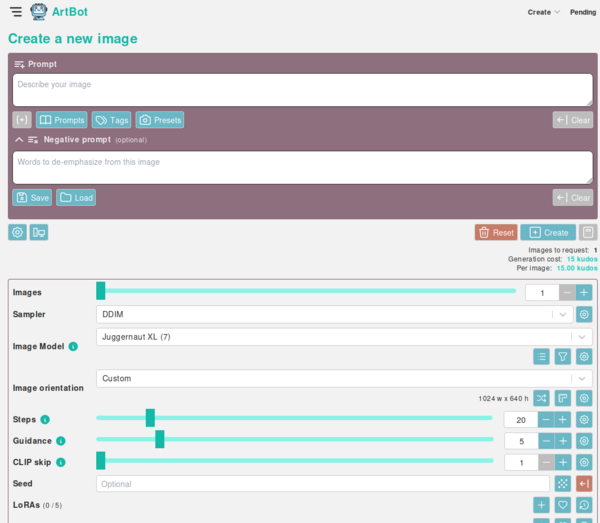

https://tinybots.net/artbot/create

https://huggingface.co/spaces/ChenDY/NitroFusion_1step_T2I

Experiment

We will be using:

1. Artbot

- Download from website (NOT app store)

- Do NOT use cloud version

3. NitroFusion

Play with models, prompts, negative prompts or perhaps other parameters.

NEXT: Where do the models come from? Creating annotated datasets from visual culture

Communities feeding generative AI

Annotation labour

Datasets as a site where visual cultures meet (and clash)

The cultures of image generation

https://makertube.net/w/7EZnBc7jpoZo9EwxUteSC9

PART TWO: 'The Land of Latents'

Diffusion

Reflexive prompting

Prompting for bias

Men washing the dishes

Women fixing airplanes

Men fixing airplanes (by comparison)

Fixing a street in Aarhus?

Letting the model "speak"

- Empty prompt, what is the threshold?

- Using generic words: someone, somewhere in summertime

- Using only negative prompting, what is a negative "man"?

Examples of LoRAs (making stories and 'fixing' things with LoRAs)

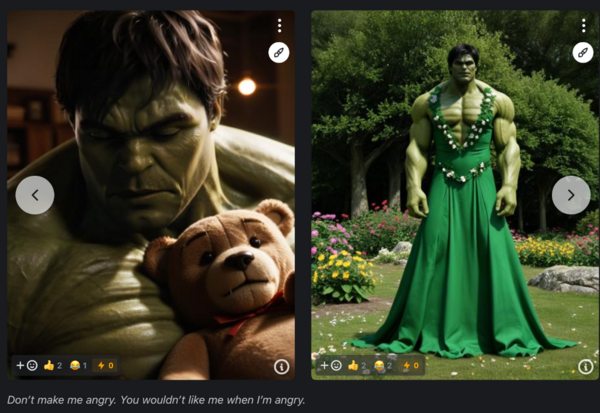

LoRA "The Incredible Hulk (2008)."

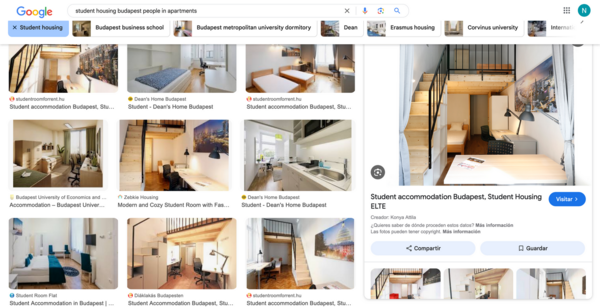

A kitchen in Budapest

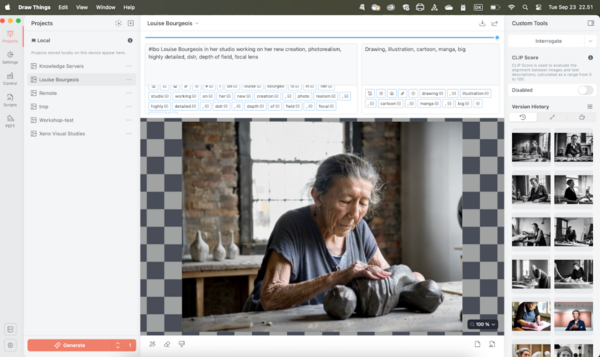

The representation of women artists, Louise Bourgeois

-

An image of Louise Bourgeois with the Real Vision model

-

Louise Bourgeois (Real Vision)

-

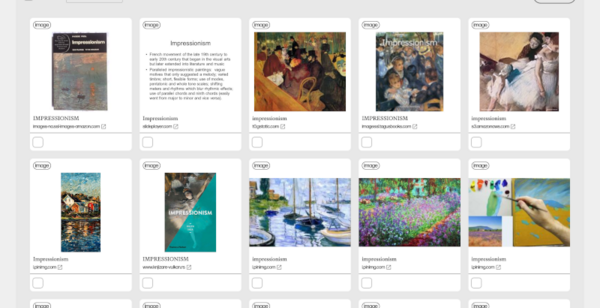

A screenshot of a search query for Louise Bourgeois

-

Selected images from the search results

-

Annotations for the dataset in the Draw Thing interface

-

An image generated by Real Vision with LoRA

-

An image generated by Real Vision with LoRA

Movie is on OneDrive

What are we going to fix? What stories are we going to tell?

Practical example: make a LoRA with Draw Things

- Open Draw Things -> Go to 'PEFT'

- Select a "Base Model" (to fine-tune) // maybe install a 'light' one first to speed up things

- Name your LoRA & Select a 'trigger word'n // select one you remember or note it down, and select one that is unique (e.g. "menwashingdishes" rather than "men" (as this is too generic and too prevalent in the foundation model)

- Define 'Training Steps' (scroll down to find), set til e.g., 1,000 // the more, the longer the wait

- Add images to train on. 12 images will work. We have prepared a folder as an option.

- Annotate the images // what to think about - when annotating? I

- Start training ..... WAIT and WAIT and WAIT (for more than an hour)

NOTE: Annotating images is like writing a caption - or, think of the promp you'd write yourself to generate this image. When prompting and enabling the LoRA, you can use the same words again. The prompt is the reverse annotation, and the annotation is the reverse prompt.

Prepared images:

Annotation software (optional):

- Get started (bottom right)

- When finished, Actions -> Export Annotations -> csv file

- Share this file with us together with the images if you want to train on one of our machines.

Discussion while 'baking' the LoRA

For example:

- How to think of images?

- How to work conceptually?

- How to annotate and what to think of?

- The relation between prompts and models?

- How to make pipelines of models/dataset?

- ?

PART THREE: 'The Land of GPUs (Materials/Organisations) ' ... on the different 'planes' of AI