Sami Itävuori - The Virtual Viewer: image aesthetic assessment and digitized museum art collections

When museums digitize their collections, they enable new "accidents"[1] or accidental images to emerge. Since the 1990s, major art museums have undertaken significant digitization programs of their collections, photographing hundreds of thousands of artworks which are then made publicly available on large online collection platforms such as Tate gallery’s Art and Artists page or Google Art and Culture just to name two [2] (Screenshot 1).

But the digital photograph of the artwork still stands as a substitute for the original haptic and context-specific object tied to a subjective experience of beauty, either through encountering the work in the gallery or being able to closely examine high-res images online through sophisticated zooming tools. The mainstreaming of text-to-image generative AI with platforms like Dall-E and Midjourney generating over 15 billion images in 2024 [3] has created both fascination and concern in museum circles. While this technology appears visually familiar, it challenges traditional concepts of artistic creation and experience, provoking widespread puzzlement and sensationalism across the Galleries, Libraries, Archives and Museums sector. [4] However, this apparent divide between AI and museums can be bridged by viewing the social and technological practices of both spheres within a borderzone where the same image is understood and treated differently rather than in opposition.This means looking at how the museum is already connected to AI production pipelines, rather than as something inherently outside of it.

For instance, a cursive search of a 8 million subset of LAION-5B[5], a widely used text-image pair dataset, shows the presence of 364 images directly scraped from Tate URL and many more second hand copies of Tate photographs from amateur blogs and websites. But the structure of this dataset illuminates the classification systems that make images machine-readable. Each image in LAION is attributed an aesthetic score by a program called CLIP, which provides a measurement of beautiful qualities or visual appeal of an image to determine its importance in the training of generative AI systems tasked with outputting pleasant and appealing images for their users.

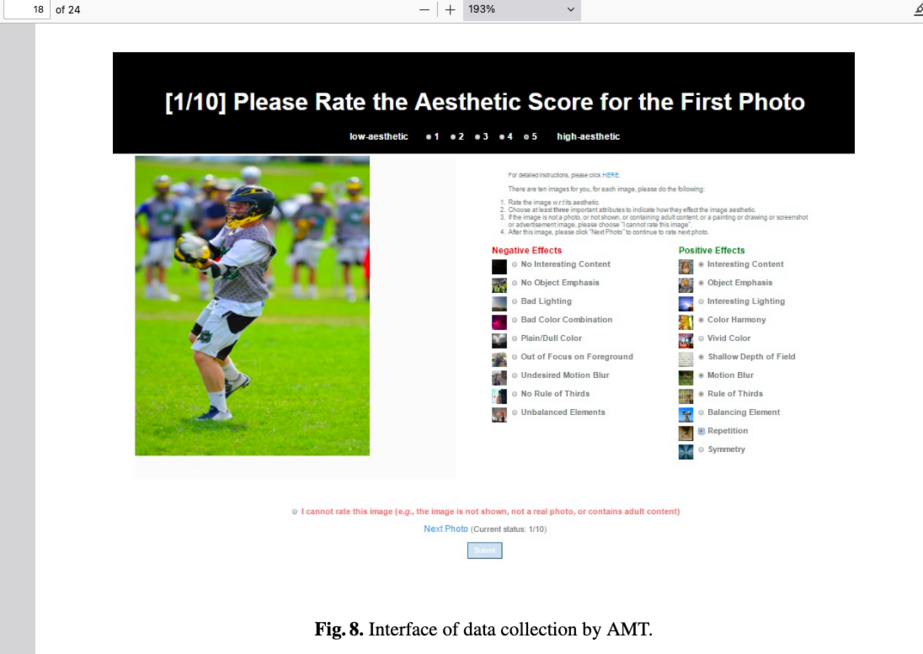

Aesthetic scoring requires Image Aesthetic Assessment (IAA) software that evaluates visual quality and aesthetic appeal of images on a scale of 0-10 (see screenshot 3). Computer scientists in IAA drawing from neurosciences, psychology, art theory or even photography manuals create quantifiable conceptions of beauty based either on qualities or the impact of this image on a viewer. These assessments can be based on a variety of formal qualities (composition, colour or lighting for example, see list of qualities used in IAA in annex 1) or trained on user-generated data from platforms like DP.Challenge or the /r/photocritique subreddit [6], where beauty emerges through statistical analysis of user preferences and feedback (Maleve and Sluis 2023, Palmer and Sluis 2024).[7][8]

This computational approach to beauty depends on artwork digitization and online circulation, while favoring theories of art and aesthetic experience that enable their statistical formalization and computability. The resulting computational formalism[9] creates an AI art connoisseur that exists alongside rather than outside traditional museum practices, valuing images for their utility in training generative AI systems. This computational paradigm is an unintended “accident” of collection digitization (and art theory) and as such shares the same border image, which are used and understood in different ways amongst the communities of practice. This particular positioning means that museums such as Tate are particularly well suited to make their collections “connect”[10] to emerging advanced technologies that utilize, operationalize and often privatize public and collective data with wide societal impacts. The museum’s societal role in continuous education and civic culture about cultural technologies can then be fully rethought in light of this museum → AI→ museum pipeline.

Annex 1

| Authors | Year | Article | Problem | Application | Features | |

| Tang, Luo and Wang | 2011 | Content-Based Photo Quality Assessment | Image Aesthetic Assessment using feature extraction based on a set of "generally accepted" compositional rules that make a good photo | Photography | -Composition

-Lighting-Color Arranagement-Camera Setting-Topic Emphasis |

Explicit modelling on human perception but no actual sources from neurobiology, neuroaesthetics or psychology (only paper is on emotion analysis in color and the paper cited for reference on human perception regarding backgrounds is a paper on feature extraction in computer vision that does not cite neuropsychology

Human Eye extracts subjects from Background Humans respond uniformily across cultures to color (emotion analysis) |

| Data, Joshi, Li and Wang | 2006 | Studying Aesthetics in Photographic Images

Using a Computational Approach |

Feature based assessment on low level information in photographic images. Both binary scale of low to high and a regression scale from 0 to 7 | Photography | -Exposure to Light

-Colorfulness-Saturation-Hue-Rule of Thirds (composition of plane)-Familiarity-Wavelet-based Texture (grain)-Size and Aspect Ration-Region Composition-Depth of Field-Shape Convexity |

Modeling of human perception based on Rudolf Arnheim's 1951/1974 psychology book "Art and Visual Perception: A Psychology of the Creative Eye" i.e. art is the subject of fundamental cognitive processes which produce and apprehend visual culture through fundamental visual features |

| Nishiyama, Okabe, Sato and Sato | 2011 | Aesthetic Quality Classification of Photographs Based on Color Harmony | Image Aesthetic Assessment using color harmony measurements following a bag of features method | Photography | -Chroma

-Hue-RGB-Blurs-edge definition-saliency |

no mention of the human |

| Dhar, Ordonez and Berg | 2011 | High Level Describable Attributes for Predicting Aesthetics and Interestingness | Automatic image classifier assessing aesthetics for image retrieval | Photography | -Composition and Layout

-content attributes related to scene type-sky-illumination attributes |

View preference theory dervied from psychology

Palmer, E. Rosch, and P. Chase. Canonical perspective and the perception of objects. In Attention and Performance, 1981. |

| Rossano Schifanella, Miriam Redi, Luca Maria Aiello | 2015 | An Image is Worth More than a Thousand Favorites: Surfacing the Hidden Beauty of Flickr Pictures | Use Flickr likes and comments to train an automatic image selector that brings visibility to beautiful images with low popularity i.e. democratize photographic visibility on social media. Use Crowdsourcing to annotate images from 1 to 5 and provide descriptors and build large dataset (9 million) | Photography | Regressed compositional features derived from common photographic rules

-Color patterns (contrast, hue, saturation, brightness)-Spatial arrangemenent features (rule of thirds)-Textual features (overall complexity and homogeneity of an image) |

No explicit articulation of a human subject position but quotes Machajdik, J., and Hanbury, A (2010) who develop a psychological model of art perception whcih relies a lot on the Itten diagram for the association of color composition with emotional response and how this may be computed. |

| Jana Machajdik and Allan Hanbury | 2010 | Affective Image Classification using Features Inspired by

Psychology and Art Theory |

Develop a computational method that is able to extract low level and high level features to predict the emotional response of an image based on psychological and art theory | Photography and Visual Art | -Color

-textures-composition-context |

Formal elements of a pictures (such as art) have an impact on the emotional response of a human |

| Kong, S., Shen, X., Lin, Z., Mech, R., & Fowlkes, C. | 2016 | Photo aesthetic ranking network with attributes and content adaptation. | AADB participating subjects asked to provide significant features themselves using Amazon Mechanical Turk micro workers. Sample limited to 5-6 raters per image | Photography | 1. “balancing element” – whether the image contains balanced elements;

2. “content” – whether the image has good/interesting content;3. “color harmony” – whether the overall color of the image is harmonious;4. “depth of field” – whether the image has shallow depth of field;5. “lighting” – whether the image has good/interesting lighting;6. “motion blur” – whether the image has motion blur;7. “object emphasis” – whether the image emphasizes foreground objects;8. “rule of thirds” – whether the photography follows rule of thirds;9. “vivid color” – whether the photo has vivid color, not necessarily harmonious color;10. “repetition” – whether the image has repetitive patterns;11. “symmetry” – whether the photo has symmetric patterns. |

Features determined "in consultation with professional photographers" and are based on "traditional photographic principles" |

| Chen Kang, Giuseppe Valenzise, and Frédéric Dufaux. | 2020 | EVA: An Explainable Visual Aesthetics Dataset | EVA as a dataset which is crowdsourced and contains subjective attributes for aesthetics ranging from low level features (color, light etc) to semantic preferences + asks for the certainty of voter to evaluate the reliability of votes for each image | Photography | -light and colour

-composition and depth-quality, and semantics of the image |

Preferences have been handcrafted and selected based on previous studies that used simplified features for naive users (anyone non professional). The usefulness of the proposed features was then tested on crowdsourced volunteers and the relative weight of each feature weighted for different cateogories |

| Luca Marchesotti · Naila Murray · Florent Perronnin | 2014 | Discovering beautiful attributes for aesthetic image analysis | Derive high quality aesthetic features from large image text datasets which contain detailed comments about aesthetic quality of dataset. Improving on their work on AVA, the authors propose to train a new model which has first a large language model trained to extract aesthetic features from DP challenge comments on photographs. Then train an image recognition system capable of extracting features from the image (supervised learning) and judge whether it is low or high quality | Photography and by extension painting | 200 features derived from comments on DP challenge

Main attributes with success on rating images though were realted to lighting, color and composition rather than semiotic factors |

no mention of the human |

| Wenshan Wang, Su Yang, Weishan Zhang, Jiulong Zhang | 2018 | Neural Aesthetic Image Reviewer | Produce an image aesthetic rater that is also capable of producing natural language reviews to provide insight into the reasoning behind the rating. Uses DP Challenge ratings and comments as ground truths | Photography | The point is not to produce a list of features that make an image beautiful. Instead features are drawn out from the corpus of reviews that amateur users have produced "in the wild" about these images | no mention of the human beyond that we need a system that reflects how human intelligence works. i.e. by articulating "aesthetic insights" with natural language . Human intelligence sort of emerges then from the spontaneous content generated online by users |

- ↑ Virilio, Paul L'accident originel, Paris, Galilée, 2005, p. 115

- ↑ Parry, Ross. Recoding the Museum: Digital Heritage and the Technologies of Change. London: Routledge, 2007

- ↑ Everypixel Journal, People Are Creating an Average of 34 Million Images Per Day. Statistics for 2024, https://journal.everypixel.com/ai-image-statistics (accessed 30th January 2025)

- ↑ Thiel, Sonja and Bernhardt, Johannes C. ed., AI in Museums, Reflections, Perspectives and Applications. Berlin: transcript Verlag, 2023

- ↑ Schuhmann, Christoph, Romain Beaumont, Richard Vencu, Cade Gordon, Ross Wightman, Mehdi Cherti, Theo Coombes, et al. "LAION-5B: An Open Large-Scale Dataset for Training Next Generation Image-Text Models." in 36th Conference on Neural Information Processing Systems (NeurIPS 2022), Track on Datasets and Benchmarks, arXiv, 2022.

- ↑ Vera Nieto, Daniel, Luigi Celona, and Clara Fernandez-Labrador. "Understanding Aesthetics with Language: A Photo Critique Dataset for Aesthetic Assessment." In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023.

- ↑ Maleve, Nicolas, and Katrina Sluis. "The Photographic Pipeline of Machine Vision; or, Machine Vision's Latent Photographic Theory." Critical AI (2023).

- ↑ Palmer, D., & Sluis, K.. The Automation of Style: Seeing Photographically in Generative AI . Media Theory, 8(1), 159–184. 2024 Retrieved from https://journalcontent.mediatheoryjournal.org/index.php/mt/article/view/1072

- ↑ Wasielewski, Amanda. Computational Formalism: Art History and Machine Learning. MA: MIT Press, 2023your reference

- ↑ Balshaw, Maria, Gathering of Strangers: Why Museums Matter, London: Tate Publishing, 2024

Annex 1 References

Datta, Ritendra, Dhiraj Joshi, Jia Li, and James Z. Wang. "Studying Aesthetics in Photographic Images Using a Computational Approach." In Computer Vision – ECCV 2006: 9th European Conference on Computer Vision, 288-301. Graz, Austria: Springer, 2006.

Dhar, Sagnik, Vicente Ordonez, and Tamara L. Berg. "High Level Describable Attributes for Predicting Aesthetics and Interestingness." In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, 1657-1664. Providence, RI: IEEE, 2011.

Kong, Kuang-Yu, Gao, Yang, Xu, Timothy M., and Jing, Xuan. "Understanding Aesthetics with Language: A Photo Critique Dataset for Aesthetic Assessment." IEEE/CVF Conference on Computer Vision and Pattern Recognition (2022): 2984-2993.

Li, Congcong, Guangyao Zhai, and Tianyu Liu. "Aesthetic Assessment of Paintings Based on Visual Balance." IEEE Transactions on Multimedia 21, no. 10 (2019): 2475-2487.

Marchesotti, Luca, Florent Perronnin, and Nicu Sebe. "Will the Machine Like Your Image? Automatic Assessment of Beauty in Images with Machine Learning Techniques." Paper presented at the First International Workshop on Social Signal Processing, Amsterdam, The Netherlands, 2009.

Nishiyama, Masashi, Takahiro Okabe, Imari Sato, and Yoichi Sato. "Aesthetic Quality Classification of Photographs Based on Color Harmony." In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, 33-40. Providence, RI: IEEE, 2011.

Park, Tae-Suh, and Byoung-Tak Zhang. "Consensus Analysis and Modeling of Visual Aesthetic Perception." IEEE Transactions on Systems, Man, and Cybernetics: Systems 49, no. 8 (2019): 1626-1636.

Tang, Xiaoou, Wei Luo, and Xiaokang Wang. "Content-Based Photo Quality Assessment." IEEE Transactions on Multimedia 13, no. 4 (2011): 589-602.