LoRA

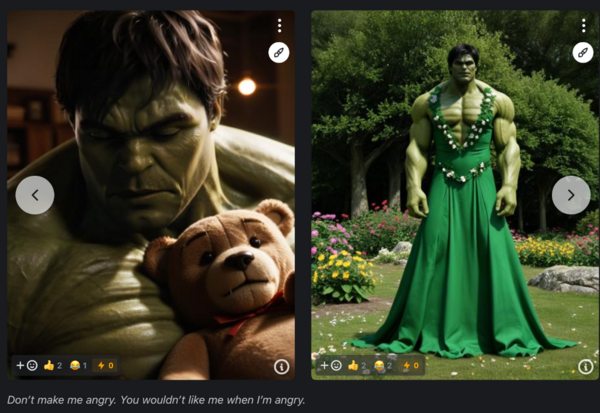

On his personal page on the CivitAI website, the user BigHeadTF promotes his recent creation, a small model called The Incredible Hulk (2008). Compared to earlier movies of the Hulk, the 2008 version shows a tormented Bruce Banner who transforms into a green creature with "detailed musculature, dark green skin, and an almost tragic sense of isolation". The model helps generate characters resembling this iconic version of Hulk in new images.

To demonstrate the capabilities of his model, BigHeadTF has selected a few pictures of his own. Hulk is in turn depicted as cajoling a teddy bear or crossdressing as Shrek's Princess Fiona. The images play with the contrast between Hulk's overblown virility and childlike or female connotations. The images demonstrate the model's ability to expand the hero's universe into other registers or fictional worlds. The Incredible Hulk (2008) doesn't just reproduce faithfully existing images of Hulk, it also opens new avenues for creation and combinations for the green hero.

This blend of pop and remix culture that strives on the blurring of boundaries between genres infuses a large number of creations made with generative AI. However what distinguishes BigHeadTF is that he doesn't share only images but the software component that makes his images distinctive. The model he distributes on his page is called a LoRA. The most famous models such as Stable Diffusion or Flux are rather general-purpose. These 'base' or 'foundation' models can be used to generate images in many styles and can handle a huge variety of prompts. But they may show limitations when a user wants a specific output such as a particular genre of manga, a style that emulates black and white film noir or when an improvement is needed for some details (specific hands positions, etc) or to produce legible text. This is where LoRAs come in. A LoRA is a smaller model created with a technique that makes it possible to improve the performance of a base model on a given task.

What is a LoRA?

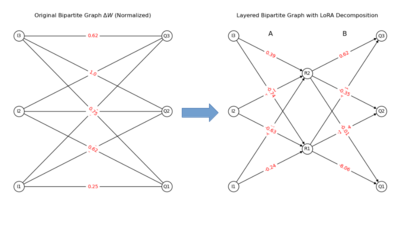

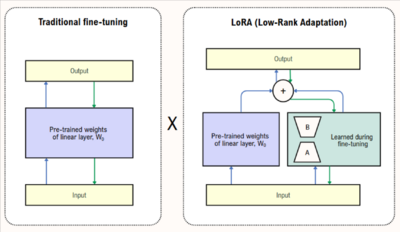

Initially developed for LLMLow-Rank Adaptation of Large Language Models. Technically the LoRA freezes an existing model and insert a smaller number of weights to adjust the model's behaviour to a particular need. Instead of a full retraining of the model, LoRAs only require the training of the weights that have been inserted. Therefore LoRAs are quite lightweight and able to leverage the capabilities of larger models. Users equipped with a consumer-grade GPU can train their own LoRAs reasonably fast (on a mac M3, a LoRA can be produced in 30 minutes). LoRAs are quite popular within communities of amateurs and developers alike. At the time of writing, the AI platform Hugging Face lists 71,312 LoRAs.

What is the network that sustains this object?

The process of LoRA training is very similar to training a model, but at a different scale. Even if it requires dramatically less compute, it still involves the same kind of highly complex technical decisions. Various layers of software libraries tame this complexity. A highly skilled user can train a LoRA locally with a series of scripts like kohya_ss and pore through the vertiginous list of options. Platforms like Hugging Face distribute software libraries (peft) that abstract away the complexity of integration of the various components such as LoRAs in the AI generation pipeline. And for those who don't want to fiddle with code or lack access to a local GPU, the option of training LoRA are offered by websites such as Runway ML, Eden AI, Hugging Face or CivitAI for different price schemes.

LoRA as a contact zone or interface between communities with different expertise and interests.

'Making a LoRA is like baking a cake', says an widely read tutorial, ' a lot of preparation, and then letting it bake. If you didn't properly make the preparations, it will probably be inedible.' To guide the wannabe LoRA creator in their journey, a wealth of tutorials and documentations in various forms are available from sources such as subreddits, Discord channels, YouTube videos, forums and the platforms that release the code or offer the training and hosting services. They are diverse in tone and they offer varying forms of expertise. A significant portion of this documentation effort consists in code snippets, detailed explanations of parameters and options, bug reports, detailed instructions for the installation of software, tests of hardware compatibility. By professionals, hackers, amateurs, newbies. With access to very different infrastructure. Users that take for granted unlimited access to compute and others with a struggling local installation. This diversity reflects the position of LoRA's in the AI ecosystem. Between expertise and informed amateurism and between highly resource hungry and consumer grade technology. Whereas foundational model training still remains in the hands of a (happy) few, LoRA training opens up a perspective of democratization of the means of production for those who have time, persistence and a small capital to invest.

- Free software libraries and apps

- Tutorials and documentations

- https://education.civitai.com/lora-training-glossary/

- https://civitai.com/articles/4/make-your-own-loras-easy-and-free

- https://civitai.com/articles/8310/lora-training-using-illustrious-report-by-a-beginner Since Illustrious learned on danbooru, she has memorized many of the Art Styles of the artists who have contributed to danbooru. Therefore, it is better to check if it is already learned Art Style you want to resemble, so that you do not have to waste your time. Note that I wasted several hours trying to create the Art Style of my favorite doujinshi and eroge illustrators

Curation as an operational practice

There is more to LoRA than the technicalities of installing libraries and training. LoRAs are objects of curation. Many tutorials begin with a discussion of dataset curation. Indeed, if a user decides to embark on the adventure of creating a LoRA, the reason is that a model fails to generate convincing images for a given subject or style. The remedy is to create a dataset that provides better samples for a given character, object or genre. Fans, artists and amateurs produce an abundant literature on the various questions raised by dataset curation: the identification of sources, the selection of images (criteria of quality, diversity, etc), the annotation (tagging), scale (LoRAs can be trained on datasets containing as little as one image and can include collections of thousands of images).

- Technical communities and Manga fans

- Dependency on base models: This LoRa model was designed to generate images that look more "real" and less "artificially AI-generated by FLUX". It achieves this by making the natural and artificial lighting look more real and making the bokeh less strong. It also adds details to every part of the image, including skin, eyes, hair, and foliage. It also aims to reduce somewhat the common "FLUX chin and skin" and other such issues. https://civitai.com/models/970862/amateur-snapshot-photo-style-lora-flux

How does it evolve through time?

- From the Microsoft lab to platforms and informed amateurs, diversification of offer

- Expansion of the image generation pipeline

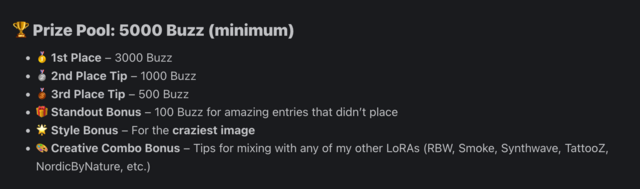

How does it create value? Or decrease / affect value?

- Adds value to the base model. Combined with the LoRA, its capabilities are expanded

- Different forms of value creation -> cultural

- Huge popularity, there are 66,846 LoRAs available on Hugging Face https://huggingface.co/models?sort=trending&search=lora

- The Mass-Produced LoRAs of Civitai https://civitai.com/articles/10068/the-mass-produced-loras-of-civitai

- 🌟 Do you want your own LoRA created by me? 🌟 https://civitai.com/articles/13480/do-you-want-your-own-lora-created-by-me

- Bounties and LoRAs https://civitai.com/articles/16214/new-bounties-and-loras

What is its place/role in techno cultural strategies?

- Curation, classification, defining styles

- Demystify the idea that you can generate anything

- Exploiting the bias, reversing the problem, work with it

- Conceptual labour

- Sharing

- Visibility in communities

- Needs are defined bottom-up

How does it relate to autonomous infrastructure?

- Regain control over the training, re-appropriation of the model via fine-tuning

- (Partial) Decentralization of model production

- The question of the means of production

- Ambivalence

Images