Common contexts

Common contexts

Devised by Nicolas Malevé

One-liner

Exploring the problem of classification in image datasets

Learning Objectives

- To sensitize the participants to the complexity of applying categories to images.

- To gain a sense of how computer scientists think about “context”.

Keywords

classification | image | keywords | context

Main image

Equipment/Materials Needed:

- Interactive whiteboard, digital projector

- Printed copies of the grids (see main image)

- All participants need access to a computer or iPad, or one between two or three.

Approximate Duration:

40 minutes

Activity:

1. Briefly introduce the COCO dataset. For a machine to identify an object, it needs to learn categories of objects. It also needs to have a sense of context. To do this, the scientists have been "gathering images of complex everyday scenes containing common objects in their natural context" (Here we presuppose that the participants already have an idea of what a dataset is)

2. Briefly discuss the problem. Computer scientist created COCO to teach machines that certain objects are more likely to appear together in an image. The teacher can add a little more information found on the website https://cocodataset.org/#home

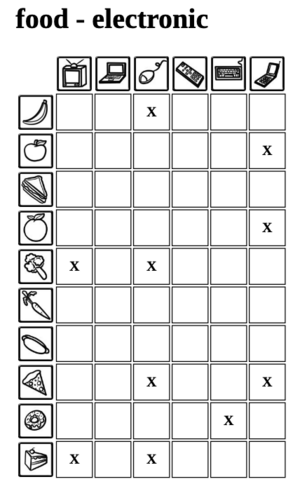

3. The teacher distributes the grid images to the participants (see above or more grid samples at the bottom of this document). A grid represents the intersection of two categories. For instance in this case, it shows all the elements of the category electronic (first row: TV, computer, mouse etc) and all the elements of the category food (first column: banana, apple, sandwich etc)

3. Using the search engine of the COCO website, search for the combinations of terms that are marked by a cross in the grid. For instance, the first cross indicates the combination of banana and computer mouse. By pairs of students, search for these two terms together in the search engine and observe the results.

Prompts for discussion could be displayed:

Representation and identity:

- Are these objects familiar to you? Do you think some of them should be included? Do you eat different food products?

- What do you think about these images? Do the kitchens look like yours? In which country are they taken?

Provenance:

- Do you think these images have been taken recently? Where do you think they come from? Where would you take recent images from?

Image within image:

- Often two terms are used in the same image but they are not really depicting objects. For instance we can find a mouse and a giraffe in the same image because the giraffe is represented in a poster hanging next to a computer. What do you think about that? Do you think a real giraffe and a real computer mouse can be easily found together? In which circumstances?

4. Feedback to whole class. Ask pairs to share interesting results, both similarities and differences.

Extension Activity:

5. Propose a new category with its icon and look for images online that fit that category.

6. Compare and discuss the selections. What have you included and what have you excluded?

Notes

This activity is best conducted after a general intro to computer vision and datasets.

Download this Activity

I have already a series of pdfs with pairs of categories that can be distributed with the exercise.