Prompt

Prompt

In a nutshell, a prompt is a string of words meant to guide an image generator in the creation of an image. In our guided tour, this object belongs to what we call pixel space. In theory, a user who writes a prompt can dispense with the whole machinery involved in the image generation process. But, as we will see, in reality, prompting also resonates with other layers of the AI image generation ecosystem.

What is the network that sustains this object?

Prompts can be shared or kept private. But a search on prompting in any search engine yields an impressive amount of results. Among AI image creators, a prompt is an object of exchange as well as a source of inspiration or as a means of reproduction. There is an economy of sharing for prompts that encompasses lists of best prompts, tutorials and demos. In CivitAI, users post images together with the prompt they used to generate them, encouraging others to try them out.

How does it evolve through time?

Technically, the authors of the Weskill's blog identify the year 2017 as a watershed moment that came with the “Attention Is All You Need” paper that introduced the transformer architecture and the Zero Shot prompting technique: "You supply only the instruction, relying on the model’s pre‑training to handle the task." [2] The complexity of generating text or image is abstracted away from the user and supported by a huge computing infrastructure operating behind the scene. In that, prompt-based generators break from previous experiments with GANs which remained confined to a technically skilled audience and hold a promise of democratization in terms of accessibility and simplicity. They also benefit from the evolution of text generation models. For instance, prompting in the recent Flux models differ from the more rudimentary Stable Diffusion as they integrate the advances in LLMs to add a more refined semantic understanding of the prompt. For the users, this translates into an evolution from a form of writing that consisted in a list of tags to descriptions in natural language.

How does it create value? Or decrease / affect value?

Prompt adherence, a model's ability to respond to prompts coherently, is a major criteria to evaluate its quality. Yet prompt adherence can be understood in different ways. The interfaces designed for prompting oscillate between two opposite poles reflecting diverging tendencies in what users value in AI systems: a desire of simplicity "just type a few words and the magic happens" and a desire of control. In the first case, the philosophy is to ask as little as possible from the user. For example, early chatgpt offered two parameters through the API: prompt and temperature. With only a few words, the user gets what they supposedly want. And the system has to make up for all the bits that are missing. This apparent simplicity involves "prompt augmentation" (the automatic amplification of the prompt) on the server side as well as a lot of implicit assumptions. At the opposite end of the spectrum, in interfaces like ArtBot, the prompt is surrounded by a vertiginous list of options where the user must make every choice explicitly. Here the user is treated as an expert. Prompt expansion is visible to them, providing tools to improve the prompt and offer context.

What is its place/role in techno cultural strategies?

By interpreting the prompt, the system supposedly "reads" what is in the user's mind. The interpreting the prompt involves much more than a literal translation of a string of words into pixels. It is the interpretation of the meaning of these words with all the cultural complexity this entails. As, historically, prompts were limited in size, this work of interpretation was performed on the basis of a very minimal description. Even often with a syntax reduced to a comma-separated list or a string of tags. Even now with extended descriptions, the model is still tasked to fill the blanks. As the model tries to make do, it inevitably reveals its own bias. If a prompt mentions an interior, the model generates an image of a house that reflects the dominant trends in its training data. Prompting is therefore half ideation and half search: the visualisation of an idea (what the user wants to see) and the visualisation of the model's worldview. When prompting regularly, a user understands that each model has its own singularity. The model is trained with particular data. Further, it synthesizes these data in its own way. Through prompting, the user gradually develops a feel for the model's singularity. They elaborate semantics to work around perceived limitations and devise targeted keywords to which a particular model responds.

One strategy adopted by users who want to expose the models limitations, faults or biases is what we could call reflexive prompting. For instance here are two images generated with the model EpicRealism, with a prompt which inverts the traditional gender roles and asks for the picture of a man washing the dishes. The surreal result testifies to the degree to which the model internalizes the division of labour in the household.

-

Image generated with the prompt Man washing the dishes

-

Image generated with the prompt Man washing the dishes

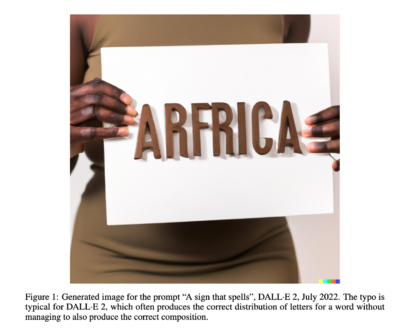

A more elaborate example of reflexive prompting is discussed in A Sign That Spells where Fabian Offert and Thao Phan analyze an experiment made by Andy Baio after the anouncement of a new Dall-e release that was intended to fix many thorny issues including racial bias. [3] Baio prompted Dall-e with an incomplete sentence such as "a sign that spells" without specifying what was the content to be spelled. The image generator produced images with people holding signs with words such as "woman", "black", "Africa", etc. The experiment demonstrated that the new Dall-e release consisted in a "quick fix" where instead of fixing the model's bias, Dall-e simply appended words to the user's prompt to orient the result towards a more "diverse" output. As Offert and Phan put it, Baio's experiment revealed that OpenAI was not improving the model, but instead fixing the user.

This experiment testifies to the fact that nobody prompts alone. Prompts are rarely interpreted directly by the model. They go through a series of checks before being rendered. They are treated as sensitive and potentially offending. This has motivated different forms of censorship by mainstream platforms and it has propelled in return the development of many strategies aiming at gaming the model. In a workshop conducted by the artist Ada Ada Ada , they explained the poetic trick devised by users trying to bypass Midjourney censorship in order to generate an image of two vikings kissing on the mouth.[4] After many unsuccessful attempts, they circumvented the censorship mechanism with the prompt "The viking telling a secret in the mouth of another" .

How does it relate to autonomous infrastructure?

One strong motivation to adopt an autonomous infrastructure is avoiding censorship. Even if models are carefully trained to stay in the fold, working with a local model allows to do away with many layers of platforms censorship. For better or for worse, prompting the model locally means deciding individually what to censor. Interestingly, in distributed infrastructures such as Stable Horde, censorship is not absent. The developers go to great length to prevent prompts that would generate CSAM content. [5] Indeed the question of defending one's values and remaining open to a plural use of the platform remains a difficult techno-political issue even in alternative platforms. And the prompt is one of the component of the image generation pipeline that reveals this issue most directly.

[1] Heaven, Will Douglas. “This Avocado Armchair Could Be the Future of AI.” MIT Technology Review, May 1, 2021. https://www.technologyreview.com/2021/01/05/1015754/avocado-armchair-future-ai-openai-deep-learning-nlp-gpt3-computer-vision-common-sense/.

[2] Weskill. “History and Evolution of Prompt Engineering.” Weskill, April 23, 2025. https://blog.weskill.org/2025/04/history-and-evolution-of-prompt.html.

[3] Offert, Fabian, and Thao Phan. “A Sign That Spells: DALL-E 2, Invisual Images and The Racial Politics of Feature Space.” 2022. https://arxiv.org/abs/2211.06323.

[4] AIIM. “AIIM Workshop: Ada Ada Ada.” AIIM - Centre for Aesthetics of AI Images, June 1, 2025. https://cc.au.dk/en/aiim/events/view/artikel/aiim-workshop-ada-ada-ada.

[5] DB0. “AI-Powered Anti-CSAM Filter for Stable Diffusion.” A Division by Zer0, October 3, 2023. https://dbzer0.com/blog/ai-powered-anti-csam-filter-for-stable-diffusion/.