Diffusion

Reference to Latent space.

What is the network that sustains this object?

Diffusion here not treated as a single object but a network of meanings that binds together a technique from physics, an algorithm for image generation, an operative metaphor relevant to cultural analysis and by extension a company and its founder with roots in hedge fund investment.

In her text Diffused Seeing Joanna Zylinska aptly captures the multivalence of the term:

... the incorporation of ‘diffusion’ as both a technical and rhetorical device into many generative models is indicative of a wider tendency to build permeability and instability not only into those models’ technical infrastructures but also into our wider data and image ecologies. Technically, ‘diffusion’ is a computational process that involves iteratively removing ‘noise’ from an image, a series of mathematical procedures that leads to the production of another image. Rhetorically, ‘diffusion’ operates as a performative metaphor – one that frames and projects our understanding of generative models, their operations and their outputs. [1]

- How does it move from person to person, person to software, to platform, what things are attached to it (visual culture)

- Networks of attachments

- How does it relate / sustain a collective? (human + non-human)

From "Maps": _Secondly, there is a 'latent space'. Image latency refers to the space in between the capture of images in datasets and the generation of new images. It is a algorithmic space of computational models where images are, for instance, encoded with 'noise', and the machine then learns how to how to de-code them back into images (aka 'image diffusion')._

How does it evolve through time?

Evolution of size

How does it create value? Or decrease / affect value?

With its material form: the weights. Gains value with adoption.

Gains value by comparison. Ability to do what cannot be done by others or less well.

What is its place/role in techno cultural strategies?

How does it relate to autonomous infrastructure?

The diffusion algorithm

As Russakovsky et al put it:

"In physics, the diffusion phenomenon describes the movement of particles from an area of higher concentration to a lower concentration area till an equilibrium is reached [1]. It represents a stochastic random walk of molecules"

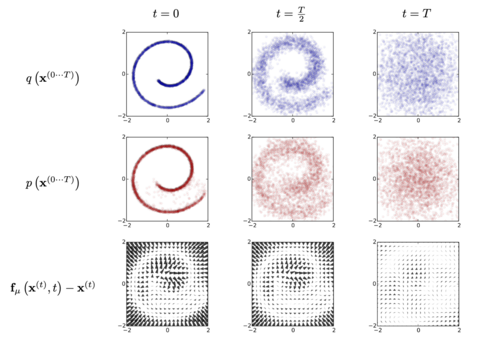

To understand how this idea has been translated in image generation, it is worth looking at the example given by Sohl-Dickstein and colleagues (2015) who authored the seminal paper on diffusion. The author propose the following experiment: take an image and gradually apply noise to it until it becomes totally noisy; then train an algorithm to “learn” all the steps that have been applied to the image and ask it to apply them in reverse to find back the image (see figure 1). By introducing some movement in the image, the algorithm detects some tendencies in the noise. It then gradually follows and amplifies these tendencies in order to arrive to a point where an image emerges. When the algorithm is able to recreate the original image from the noisy picture, it is said to be able to de-noise. When the algorithm is trained with billions of examples, it becomes able to generate an image from any arbitrary noisy image. And the most remarkable aspect of this process is that the algorithm is able to generalise from its training data: it is able to de-noise images that it never “saw” during the phase of training.

Metaphorically this can be compared to a process of looking for faces in clouds (or reading signs in tea leaves). We do not see immediately a face in a cumulus, but the faint movement of the mass stimulates our curiosity until we gradually delineate the nascent contours of a shape we can begin to identify. Notice the emphasis on the virtual in the image theory underlying image generation. Noise is the precondition for the generation of any image because it virtually contains all images that can be actualised through a process of decryption or de-noising. To generate an image is not a process of creation but of actualisation (of an image that already exists virtually).

To learn how to generate images, algorithms such as Stable Diffusion or Imagen need to be fed with examples. These images are given to the algorithm one by one. Through its learning phase, the algorithm treats them as one moment of an uninterrupted process of variation, not as singular specimens. At this level, the process of image generation is radically anti-representational. It treats the image as a mere moment (“quelconque”[2]), a variation among many.

- ↑ https://mediatheoryjournal.org/2024/09/30/joanna-zylinska-diffused-seeing/

- ↑ For a discussion of the difference between privileged instants and “instants quelconques” see Deleuze’s theory of cinema, in particular https://www.webdeleuze.com/textes/295 (find translation)