Diffusion

Diffusion

Rather than a mere scientific object, diffusion is treated here as a network of meanings that binds together a technique from physics (diffusion), an algorithm for image generation, a model (Stable Diffusion), an operative metaphor relevant to cultural analysis and by extension a company (Stability AI) and its founder with roots in hedge fund investment.

In her text "Diffused Seeing", Joanna Zylinska aptly captures the multivalence of the term:

... the incorporation of ‘diffusion’ as both a technical and rhetorical device into many generative models is indicative of a wider tendency to build permeability and instability not only into those models’ technical infrastructures but also into our wider data and image ecologies. Technically, ‘diffusion’ is a computational process that involves iteratively removing ‘noise’ from an image, a series of mathematical procedures that leads to the production of another image. Rhetorically, ‘diffusion’ operates as a performative metaphor – one that frames and projects our understanding of generative models, their operations and their outputs.[1]

In complement to Zylinska's understanding of diffusion as a term operating at different levels with an emphasis on permeability, we inquire into the dialectical relation that opposes it to stability (as interestingly emphasized in the name Stable Diffusion), where the permeability and instability enclosed in the concept constantly motivates strategies of control, direction, capitalization or democratization that leverage the unstable character of diffusion dynamics.

What is the network that sustains this object?

From physics to AI, the diffusion algorithm

Our first move in this network of meanings is to follow the trajectory of the concept of diffusion from the 19th century laboratory to the computer lab. If diffusion had been studied since antiquity, Adolf Fink published the first laws of diffusion" based on his experimental work in 1855. As Wuhan and Princeton AI researchers Yuhan et al put it:

In physics, the diffusion phenomenon describes the movement of particles from an area of higher concentration to a lower concentration area till an equilibrium is reached. It represents a stochastic random walk of molecules.[2]

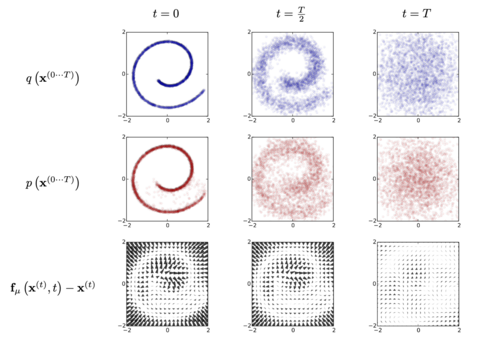

To understand how this idea has been translated in image generation, it is worth looking at the example given by Sohl-Dickstein and colleagues who authored the seminal paper on diffusion in image generation.[3] The authors propose the following experiment: take an image and gradually apply noise to it until it becomes totally noisy; then train an algorithm to 'learn' all the steps that have been applied to the image and ask it to apply them in reverse to find back the image (see illustration). By introducing some movement in the image, the algorithm detects some tendencies in the noise. It then gradually follows and amplifies these tendencies in order to arrive to a point where an image emerges. When the algorithm is able to recreate the original image from the noisy picture, it is said to be able to de-noise. When the algorithm is trained with billions of examples, it becomes able to generate an image from any arbitrary noisy image. And the most remarkable aspect of this process is that the algorithm is able to generalise from its training data: it is able to de-noise images that it never “saw” during the phase of training.

Another aspect of diffusion in physics is of importance in image generation can be seen at the end of the definition of the concept as stated in Wikipedia (emphasis is ours):

diffusion is the movement of a substance from a region of high concentration to a region of low concentration without bulk motion.[4]

Diffusion doesn't capture the movement of a bounded entity (a bulk, a whole block of content), it is a mode of spreading that flexibly accommodates structure. Diffusion is the gradual movement/dispersion of concentration within a body with no net movement of matter."[5] This characteristics makes it particularly apt at capturing multi level relations between image parts without having to identify a source that constraints these relations. It gives it access to an implicit structure. Metaphorically, this can be compared to a process of looking for faces in clouds (or reading signs in tea leaves). We do not see immediately a face in a cumulus, but the faint movement of the mass stimulates our curiosity until we gradually delineate the nascent contours of a shape we can identify.

Stabilising diffusion

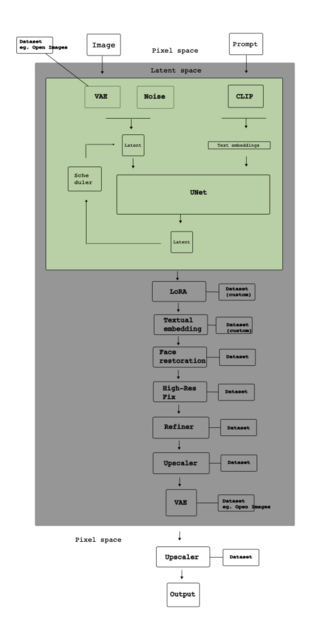

Diffusion as presented by Sohl-Dickstein and colleagues is at the basis of many current models for image generation. However, no user deals directly with diffusion as demonstrated in the paper.[7] It is encapsulated into software and a whole architecture mediates between the algorithm and its environment (see diagram of the process). For instance, Stable Diffusion is a model that encapsulates the diffusion algorithm and makes it tractable at scale. Rombach et al., the brains behind the Stable Diffusion model, popularize the diffusion technique by porting it in the latent space.[8] Instead of working on pixels, the authors performed the computation on compressed vectors of data and managed to reduce the computational cost of training and inference. They thereby popularised the use of the technique, making it accessible to a larger community of developers, and also added important features to the process of image synthesis:

By introducing cross-attention layers into the model architecture, we turn diffusion models into powerful and flexible generators for general conditioning inputs such as text or bounding boxes and high-resolution synthesis becomes possible in a convolutional manner.[9]

Because of this, diffusion can be guided by text prompts and other forms of conditioning inputs such as images, opening it up to multiple forms of manipulation and use such as inpainting. It stabilises diffusion in the sense that it allows for different forms of control. The diffusion algorithm in itself doesn't contain any guidance. This is an important step to move the algorithm outside of the worlds of github and tech tutorials into a domain where image makers can experiment with it. The pure algorithm cannot move alone.

But if diffusion is relatively stabilized in technical terms (input control and infrastructure), its adoption by increasingly large circles of users and developers has contributed to different forms of disruption for the best and the worst: parodies and deepfakes, political satyre and revenge porn. Once in circulation, it moves both as a technical product and as images.

Furthermore, rhetorically, it becomes a metaphor within a set of nested metaphors that include the brain as computer, concepts such as 'hallucinations' or deep 'dreams' that respond to a more general cultural condition. As Zylinska notes:

We could perhaps suggest that generative AI produces what could be called an ‘unstable’ or ‘wobbly’ understanding – and a related phenomenon of ‘shaky’ perception. Diffusion [...] can be seen as an imaging template for this model.[10]

Still according to Zylinska, this metaphor posits instability as an organizing concept for the image more generally:

Indeed, it is not just the perception of images but their very constitution that is fundamentally unstable.[11]

As a concept, it is in line with a general condition of instability due to the extensive disruptions brought on by the flows of capital. The wobbly, risky, financial and logistical edifice that supports Stable Diffusion's development testifies to this. The company Stability AI, funded by former edge fund manager Emad Mostaque, helped finance the transformation of the "technical" product into a software available to users and powered by an expensive infrastructure. It also made it possible to sell it as a service. To access large scale computing facilities, Mostaque raised $100 millions in venture capital.[12] His experience in the financial sector helped convince donors and secure the financial base. The investment was sufficient to give a chance to Stability to enter the market. Moving from the computer lab to a working infrastructure required to ground the diffusion algorithm into another material environment comprising Amazon servers, the JUWELS Booster supercomputer, tailor made data centers around the world.[13] This scattered infrastructure corresponds to the global distribution of the company's legal structure: one leg in the UK and one leg in Delaware. The latter offering a welcoming tax environment for companies. Dense networks of investors and servers supplement code. In that perspective, the development of the Stable Diffusion algorithm is inseparable from risk investment. These risks take the concrete form of a long string of controversies and lawsuits, especially for copyright infringement and the eventual firing of Mostaque from his position of CEO after aggressive press campaigns against his management. Across all its dimensions, the shaky nature of this assemblage mirrors the physical phenomenon Stable Diffusion's models simulate.

In short, stabilising diffusion means attending a huge range of problems happening simultaneously that require extremely different skills and competences such as algorithmic design, statistical optimization, identifying faulty GPUs, decide on batch sizes in training, and the impact of different floating-point formats on training stability, securing investment and managing delays in payment, pushing against legal actions, and, last but not least, aligning prompts and images.

How does diffusion create value? Or decrease / affect value?

The question of value needs to be addressed at different levels as we have chosen to treat diffusion as a complex of techniques, algorithm, software, metaphors and finance.

First, we can consider diffusion as an object concretised in a material form: the model. The model is at the core of a series of online platforms that monetize access to the model. With a subscription fee, users can generate images. Its value stems from the model's ability to generate images in a given style (i.e., Midjourney), with a good prompt adherence, reasonably fast. It is a familiar value form for models: AI as a service that generates revenue and capitalize on the size of a userbase.

As the model is open source, it can also be shared and used in different ways. For instance, users can use the model locally without paying a fee to Stability AI. Alternatively, it can be integrated in peer-to-peer systems of image generation such as Stable Horde or shared installations through non-commercial APIs. In this case, the model gains value with adoption. And as interest grows, users start to build things with it as LoRAs, bespoke models, and other forms of conditioning. Through this burgeoning activity, the model's affordances are growing. Its reputation increases as it enters different economies of attention where users gain visibility by tweaking it, or generating 'great art'.

In scientific circles, the model's value is measured by different metrics. Here, the object of necessity that travels across platforms and individual computers becomes an object of interest. What is at stake is a competition for scientific relevance where diffusion is a solution to a series of ongoing intellectual problems. Yet, we should not forget that computer science lives in symbiosis with the field of production and that many scientists are also involved in commercial ventures. For instance the above mentioned Robin Rombach gained a scientific reputation that can be evaluated through a citation index, but he was also involved in the company Stability AI. In the constant movement from academic research to production, the ability to experiment emerges as a shared value. This is well captured by Patrick Esser, a lead researcher on diffusion algorithms, who defined the ideal contributor as someone who would “not overanalyze too much” and “just experiment” [14] The valorization of experimentation even justifies the open source ethos prevalent in the diffusion ecosystem:

“It’s not that we're running out of ideas, we’re mostly running out of time to follow up on them all. By open sourcing our models, there's so many more people available to explore the space of possibilities.” [15]

Finally, if we consider their impact on the currencies of images, diffusion-based algorithms contribute significantly to a decrease of the value of the singular image. If this trend started earlier and had been diagnosed several times (ie. Steyerl [16]), the capacity of models to churn out endless visual outputs has accelerated it substantially. As Munster and McKenzie wrote in their seminal piece "Platform Seeing," the value of the image ensemble (i.e., the model) grows at the expense of the singular image: "images both lose their stability and uniqueness yet gather aggregated force". [17] Their difference in value is implied in the algorithmic training process. To learn how to generate images, algorithms such as Stable Diffusion, Flux, Dall-e or Imagen need to be fed with examples. These images are given to the algorithm one by one. Through its learning phase, the algorithm treats them as one moment of an uninterrupted process of variation, not as a singular specimens. At this level, the process of image generation is radically anti-representational. It treats the image as a mere moment: a variation among many. Hence, it is the model that gains singularity.

What is its place/role in techno cultural strategies?

As a concept that traverses multiple dimensions of culture and technology, diffusion begs questions about strategies operating on different planes. In that sense, it constitutes an interesting lens to discuss the question of the democratization of generative AI. As a premise, we adopt the view set forth in the paper "Democratization and generative AI image creation: aesthetics, citizenship, and practices" [18] that the relation between genAI and democracy cannot be reduced either as one of apocalypse where artificial intelligence signals the end of democracy nor that we inevitably move towards a better optimized future where a more egalitarian world emerges out of technical progress. Both democracy and genAI are unaccomplished projects, and both are risky works in progress. Instead of simply lament genAI 's "use for propaganda, spread of disinformation, perpetuation of discriminatory stereotypes, and challenges to authorship, authenticity, originality", we should be see it as an opportunity to situate "the aestheticization of politics within democracy itself".[19] In short, we think that the relation between democracy and genAI should not be framed as one of impact (where democracy as a fully achieved project pre-exists; AI's impact on democracy), but one where democracy is still to come. And, in the same movement, we should firmly oppose the view that AI is a fully formed entity awaiting to be governed, to be democratized. That is, the making of AI should in itself be an experiment in democracy. In this view, both entities inform each other. Diffusion as a transversal concept is a device to identify key elements of this mutual 'enactment'. They pertain to different dimensions of experience, sociality, technology and finance; to different levels of logistics and different scales. The dialectics of diffusion and stability we tried to characterize is therefore marked by loosely coordinated strategies that include (in no particular order):

- providing concrete resources such as the model's weights and source code without fee and under a free license (democracy as equal access to resources)

- producing and disseminating different forms of knowledge about AI: papers, code, tutorials (democratization of knowledge)

- offering different levels of engagement: as a user of a service, as a dataset curator, as a LoRA creator, as a Stable Horde node manager (democratization as increase of participation)

- freedom of use in the sense that the platform's censorship is up for debate or can be bypassed locally (democracy as (individual) freedom of expression and deliberation)

And, more polemically, the dialectics of diffusion and stability so far teach us the hard challenge to do these things under the constraints of the capitalist mode of production and its financial attachments.

[1] Joanna Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” Articles, Media Theory 8, no. 1 (2024): 230, 1.

[2] Pei, Yuhan, Ruoyu Wang, Yongqi Yang, Ye Zhu, Olga Russakovsky, and Yu Wu. “SOWing Information: Cultivating Contextual Coherence with MLLMs in Image Generation.” 2024. https://arxiv.org/abs/2411.19182.

[3] Sohl-Dickstein, Jascha, Eric A. Weiss, Niru Maheswaranathan, and Surya Ganguli. “Deep Unsupervised Learning Using Nonequilibrium Thermodynamics.” Proceedings of the 32nd International Conference on International Conference on Machine Learning - Volume 37 (Lille, France), ICML’15, JMLR.org, 2015, 2256–65.

[4] Wikipedia, s.v. “Diffusion,” last modified August 12, 2025, https://en.wikipedia.org/wiki/Diffusion.

[5] “Diffusion.”

[6] Sohl-Dickstein et al., “Deep Unsupervised Learning Using Nonequilibrium Thermodynamics.”

[7] Sohl-Dickstein et al., “Deep Unsupervised Learning Using Nonequilibrium Thermodynamics.”

[8] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer, “High-Resolution Image Synthesis with Latent Diffusion Models,” arXiv preprint arXiv:2112.10752, last revised April 13, 2022, https://arxiv.org/abs/2112.10752.

[9] Rombach et al., “High-Resolution Image Synthesis with Latent Diffusion Models,” 1.

[10] Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” 244.

[11] Zylinska, “Diffused Seeing: The Epistemological Challenge of Generative AI,” 247.

[12] Kyle Wiggers, “Stability AI, the Startup behind Stable Diffusion, Raises $101M,” Tech Crunch, October 17, 2022, https://techcrunch.com/2022/10/17/stability-ai-the-startup-behind-stable-diffusion-raises-101m/.

[13] Jülich Supercomputing Centre, JUWELS Booster Overview, accessed August 12, 2025, https://apps.fz-juelich.de/jsc/hps/juwels/booster-overview.html> .

[14] Sophia Jennings, “The Research Origins of Stable Diffusion,” Runway Research, May 10, 2022, https://research.runwayml.com/the-research-origins-of-stable-difussion.

[15] Jennings, “The Research Origins of Stable Diffusion.”

[16] Hito Steyerl, “In Defense of the Poor Image,” in The Wretched Of The Screen (Sternberg Press, 2012).

[17] Adrian MacKenzie and Anna Munster, “Platform Seeing: Image Ensembles and Their Invisualities,” Theory, Culture & Society 36, no. 5 (2019): 3–22, https://doi.org/10.1177/0263276419847508.

[18] Maja Bak Herrie et al., “Democratization and Generative AI Image Creation: Aesthetics, Citizenship, and Practices,” AI & SOCIETY 40, no. 5 (2025): 3495–507, https://doi.org/10.1007/s00146-024-02102-y.

[19] Bak Herrie et al., “Democratization and Generative AI Image Creation: Aesthetics, Citizenship, and Practices,” 3497.

Guestbook