Maps: Difference between revisions

| Line 1: | Line 1: | ||

== Mapping | == Mapping objects of interest and necessity == | ||

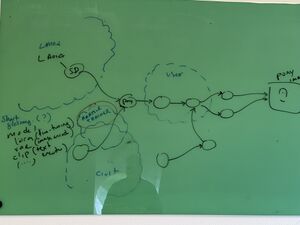

If one considers generative AI as an object, there is also a world of ‘para objects’ (surrounding AI and shaping its reception and interpretation) in the form of maps or diagrams of AI. They are drawn by both amateurs and professionals who need to represent processes that are otherwise sealed off in technical systems, but more generally reflect a need for abstraction – a need for conceptual models of how generative AI functions. However, as Alfred Korzybski famously put it, one should not confuse the map with the territory: the map is not how reality is, but a representation of reality [reference]. | If one considers generative AI as an object, there is also a world of ‘para objects’ (surrounding AI and shaping its reception and interpretation) in the form of maps or diagrams of AI. They are drawn by both amateurs and professionals who need to represent processes that are otherwise sealed off in technical systems, but more generally reflect a need for abstraction – a need for conceptual models of how generative AI functions. However, as Alfred Korzybski famously put it, one should not confuse the map with the territory: the map is not how reality is, but a representation of reality [reference]. | ||

Following on from this, mapping the objects of interest in autonomous AI image creation is not to be understood as a map of what it 'really is'. Rather, it is a map of encounters of objects; encounters that can be documented and catalogued, but also positioned in a spatial dimension – representing a 'guided tour', and an experience of what objects are called, how they look, how they connect to other objects, communities or underlying infrastructures (see also [[Objects of interest and necessity]]). Perhaps, the map can even be used by others to navigate autonomous generative AI and create their own experiences. | Following on from this, mapping the objects of interest in autonomous AI image creation is not to be understood as a map of what it 'really is'. Rather, it is a map of encounters of objects; encounters that can be documented and catalogued, but also positioned in a spatial dimension – representing a 'guided tour', and an experience of what objects are called, how they look, how they connect to other objects, communities or underlying infrastructures (see also [[Objects of interest and necessity]]). Perhaps, the map can even be used by others to navigate autonomous generative AI and create their own experiences. But, importantly to note, what is particular about the map of this catalogue of objects of interest and necessity, is that it purely maps autonomous and decentralised generative AI. It is therefore not to be considered a plane that necessarily reflects what happens in, for instance, Open AI og Google's generative AI systems. The map is in other words not a map of what is otherwise concealed in generative AI. In fact, we know very little of how to navigate proprietary systems, and one might speculate if there even exists a map of relations and dependencies. | ||

== A map of autonomous and decentralised AI image generation == | |||

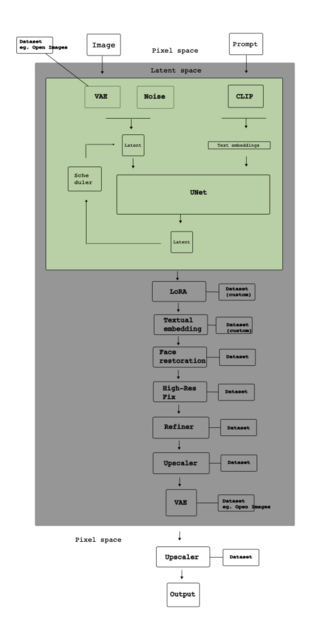

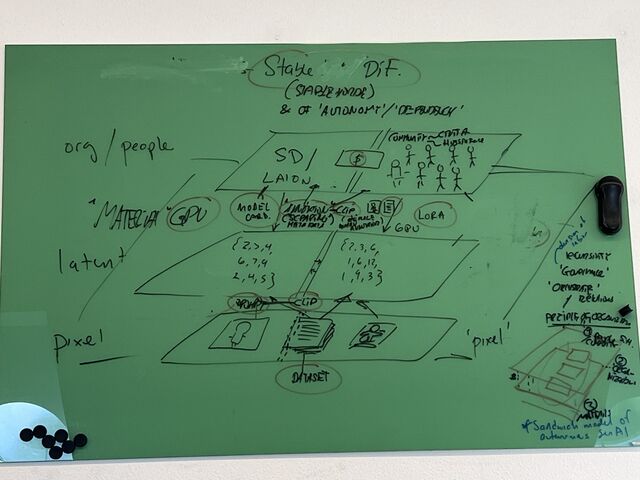

To enter the world of autonomous AI image generation a map of how the territories of ‘pixel space’ from ‘latent space’ – the objects you see, from those who cannot be seen – is fundamental. In a pixel space, you would find both the images that are generated by a prompt (a textual input), as well as the many images that are used to compile the textually annotated data set used for training the image generation models (the foundation models). | To enter the world of autonomous AI image generation a map of how the territories of ‘pixel space’ from ‘latent space’ – the objects you see, from those who cannot be seen – is fundamental. In a pixel space, you would find both the images that are generated by a prompt (a textual input), as well as the many images that are used to compile the textually annotated data set used for training the image generation models (the foundation models). | ||

| Line 10: | Line 11: | ||

[[File:Diagram-process-stable-diffusion-002.png|none|thumb|640x640px|A diagram of AI image generation separating 'pixel space' from 'latent space' - what you see, and what cannot be seen.]]Apart from pixel space and latent space, there is also a territory of objects that can be seen, but you typically as a user do not. For instance, in Stable Diffusion you find [[LAION]], a non-profit organization that uses the software [[Clip]] to scrape the internet for textually annotated images to generate a free and open-source data set for training models in latent space. You would also find communities who contribute to LAION, or who refine the models of latent space using so-called [[LoRA]]<nowiki/>s, but also models and datasets to, for instance, reconstruct missing facial or other bodily details (such as too many fingers on one hand) – often with both specialised knowledge of the properties of the foundational diffusion models, and of the visual culture they feed into (for instance manga or gaming). These communities are also organized on different platforms, such as [[CivitAI]] or [[Hugging Face]], where communities can exhibit their specialised image creations or share their LoRAs, often with the involvement of different tokens or virtual [[currencies]]. What is important to realise is that behind every dataset, model, software, and platform lies also a specific community. | [[File:Diagram-process-stable-diffusion-002.png|none|thumb|640x640px|A diagram of AI image generation separating 'pixel space' from 'latent space' - what you see, and what cannot be seen.]]Apart from pixel space and latent space, there is also a territory of objects that can be seen, but you typically as a user do not. For instance, in Stable Diffusion you find [[LAION]], a non-profit organization that uses the software [[Clip]] to scrape the internet for textually annotated images to generate a free and open-source data set for training models in latent space. You would also find communities who contribute to LAION, or who refine the models of latent space using so-called [[LoRA]]<nowiki/>s, but also models and datasets to, for instance, reconstruct missing facial or other bodily details (such as too many fingers on one hand) – often with both specialised knowledge of the properties of the foundational diffusion models, and of the visual culture they feed into (for instance manga or gaming). These communities are also organized on different platforms, such as [[CivitAI]] or [[Hugging Face]], where communities can exhibit their specialised image creations or share their LoRAs, often with the involvement of different tokens or virtual [[currencies]]. What is important to realise is that behind every dataset, model, software, and platform lies also a specific community. | ||

[[File:Map objects and planes.jpg|none|thumb|640x640px|This map reflects the separation of pixel space from latent space, and adds a third layer of objects that are visible, but not seen by typical users. Underneath the three layers one finds a second plane of material infrastructures (such as processing power and electricity), and one can potentially also add more planes, such as for instance regulation or governance of AI]] | |||

A map of this territory would therefore necessarily contain technical objects (such as models, software, and platforms) that are deeply intertwined with communities that care for, negotiate, and maintain the means of their own existence (what the anthropologist Chris Kelty has also labelled a '[https://www.dukeupress.edu/two-bits recursive publics]'), but which may potentially also (at the same time) be subject to value extraction. For instance, Hugging Face, which is a platform sharing tools, models, datasets, focused on democratizing AI (a sort of collaborative hub for an AI community) is also registered as a private company [https://finance.yahoo.com/news/meta-collaboration-launches-ai-accelerator-151500146.html?guccounter=1&guce_referrer=aHR0cHM6Ly9lbi53aWtpcGVkaWEub3JnLw&guce_referrer_sig=AQAAAMhKfmbYYL-KGU4CIme2y6QNWh4b5k2NCthphkuvBCYjXM0gzxAeQdwb2dhELN3NBaWvp2Fnp5PexCj2Ubf4YMcRpPvML0z5bCQmW1_SgMNVjzUvLNaLjnpdIqAABwB0aQjYd-GIsmKZBFTdVUvoHV5nLQmFGo8xYcD_m6kdDbmd teaming up with, for instance, Meta] to boost European startups in an "AI Accelerator Program". The company also [https://huggingface.co/amazon collaborates] with Amazon Web Services to allow users to deploy the trained and publicly available models in Hugging Face through [https://aws.amazon.com/sagemaker/ Amazon SageMaker]. [https://www.nasdaqprivatemarket.com/company/hugging-face/ Nasdaq Private Market] lists a whole range of investors in Hugging Face (Amazon, Google, Intel, IBM, NVIDIA, etc.), and its estimated market value was [https://sacra.com/c/hugging-face/ $4.5 billion in 2023], which of course also reflects the high expertise the company has in managing models, hosting them, and making them available. | |||

In every level, there is also a dependency on a material infrastructure of computers with heavy processing | In every level, there is also a dependency on a material infrastructure of computers with heavy processing capability to generate images as well as develop and refine the diffusion models. This relies on energy consumption, and not least on [[GPU]]<nowiki/>s. In Stable Diffusion, people who generate images or develop LoRAs can have their own GPU (built into their computer or specifically acquired), but they can also benefit from a distributed network, allowing them to access other people’s GPUs, using the so-called [[Stable Horde]]. That is, there is a different plane of material infrastructure underneath. | ||

== Mapping other planes of AI == | == Mapping other planes of AI == | ||

What is particular about the map of this catalogue of objects of interest and necessity, is that it purely maps autonomous and decentralised generative AI. Both Hugging Face' dependency in venture capital and Stable Diffusion's dependency on hardware and infrastructure point to the fact that there are several planes that are not captured in the map of autonomous and decentralised AI image generation, but which are equally important – which reflect a number of dependencies that can be explored and mapped. One might also add, a plane of governance and regulation. For instance, The EU AI Act, laws on copyright infringement, which Stable Diffusion (like any other AI ecology) will also depend on. The anthropologists and AI researcher Gertraud Koch has drawn a map (or diagram) of all the planes that she identifies in generative AI. | |||

[Gertraud's map] | [Gertraud's map] | ||

There are, as such, many other maps each in their own way build conceptual models of generative AI. In this sense, maps and cartographies are not just shaping the reception and interpretation of genrative AI, but can also be regarded as objects of interest in themselves and intrinsic parts of AI’s existence: generative AI depends on cartography to build, shape, navigate, and also negotiate and criticise its being in the world. The collection of cartopgraphies and maps is therefore also what makes AI a reality. | There are, as such, many other maps each in their own way build conceptual models of generative AI. In this sense, maps and cartographies are not just shaping the reception and interpretation of genrative AI, but can also be regarded as objects of interest in themselves and intrinsic parts of AI’s existence: generative AI depends on cartography to build, shape, navigate, and also negotiate and criticise its being in the world. The collection of cartopgraphies and maps is therefore also what makes AI a reality. | ||

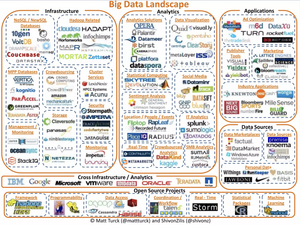

There are for instance maps of the corporate plane of AI, such as entrepreneur, investor and pod cast host Matt Turck’s “ultimate annual market map of the data/AI industry” (<nowiki>https://firstmark.com/team/matt-turck/</nowiki>). Since 2012 Matt Turck has documented the ecosystem of AI not just to identify key corporate actors, but also developments of trends in business. One can see how the division of companies dealing with infrastructure, data analytics, applications, data sources, and open source becomes fine grained over the years, and forking out into, for instance, applications in health, finannce and agriculture; or how privcy and security become of increased concern in the business of infrastructures. | There are for instance maps of the corporate plane of AI, such as entrepreneur, investor and pod cast host Matt Turck’s “ultimate annual market map of the data/AI industry” (<nowiki>https://firstmark.com/team/matt-turck/</nowiki>). Since 2012 Matt Turck has documented the ecosystem of AI not just to identify key corporate actors, but also developments of trends in business. One can see how the division of companies dealing with infrastructure, data analytics, applications, data sources, and open source becomes fine grained over the years, and forking out into, for instance, applications in health, finannce and agriculture; or how privcy and security become of increased concern in the business of infrastructures. | ||

[[File:Big Data Landscape 2012.png|thumb|The corporate landscape of Big Data in 2012, by Matt Turck and Shivon Zilis. ]] | |||

Revision as of 11:03, 2 July 2025

Mapping objects of interest and necessity

If one considers generative AI as an object, there is also a world of ‘para objects’ (surrounding AI and shaping its reception and interpretation) in the form of maps or diagrams of AI. They are drawn by both amateurs and professionals who need to represent processes that are otherwise sealed off in technical systems, but more generally reflect a need for abstraction – a need for conceptual models of how generative AI functions. However, as Alfred Korzybski famously put it, one should not confuse the map with the territory: the map is not how reality is, but a representation of reality [reference].

Following on from this, mapping the objects of interest in autonomous AI image creation is not to be understood as a map of what it 'really is'. Rather, it is a map of encounters of objects; encounters that can be documented and catalogued, but also positioned in a spatial dimension – representing a 'guided tour', and an experience of what objects are called, how they look, how they connect to other objects, communities or underlying infrastructures (see also Objects of interest and necessity). Perhaps, the map can even be used by others to navigate autonomous generative AI and create their own experiences. But, importantly to note, what is particular about the map of this catalogue of objects of interest and necessity, is that it purely maps autonomous and decentralised generative AI. It is therefore not to be considered a plane that necessarily reflects what happens in, for instance, Open AI og Google's generative AI systems. The map is in other words not a map of what is otherwise concealed in generative AI. In fact, we know very little of how to navigate proprietary systems, and one might speculate if there even exists a map of relations and dependencies.

A map of autonomous and decentralised AI image generation

To enter the world of autonomous AI image generation a map of how the territories of ‘pixel space’ from ‘latent space’ – the objects you see, from those who cannot be seen – is fundamental. In a pixel space, you would find both the images that are generated by a prompt (a textual input), as well as the many images that are used to compile the textually annotated data set used for training the image generation models (the foundation models).

Latent space is more complicated to explain. It is, in a sense, a purely computational space that relies on models that can encode images with noise (using a 'Variational Autoencoder', VAE), and learn how to de-code them back into images, thereby giving the model the ability to generate new images using image diffusion. It therefore also deeply depends on both the prompt and the dataset in pixel space – to generate images, and to build or train models for image generation.

Apart from pixel space and latent space, there is also a territory of objects that can be seen, but you typically as a user do not. For instance, in Stable Diffusion you find LAION, a non-profit organization that uses the software Clip to scrape the internet for textually annotated images to generate a free and open-source data set for training models in latent space. You would also find communities who contribute to LAION, or who refine the models of latent space using so-called LoRAs, but also models and datasets to, for instance, reconstruct missing facial or other bodily details (such as too many fingers on one hand) – often with both specialised knowledge of the properties of the foundational diffusion models, and of the visual culture they feed into (for instance manga or gaming). These communities are also organized on different platforms, such as CivitAI or Hugging Face, where communities can exhibit their specialised image creations or share their LoRAs, often with the involvement of different tokens or virtual currencies. What is important to realise is that behind every dataset, model, software, and platform lies also a specific community.

A map of this territory would therefore necessarily contain technical objects (such as models, software, and platforms) that are deeply intertwined with communities that care for, negotiate, and maintain the means of their own existence (what the anthropologist Chris Kelty has also labelled a 'recursive publics'), but which may potentially also (at the same time) be subject to value extraction. For instance, Hugging Face, which is a platform sharing tools, models, datasets, focused on democratizing AI (a sort of collaborative hub for an AI community) is also registered as a private company teaming up with, for instance, Meta to boost European startups in an "AI Accelerator Program". The company also collaborates with Amazon Web Services to allow users to deploy the trained and publicly available models in Hugging Face through Amazon SageMaker. Nasdaq Private Market lists a whole range of investors in Hugging Face (Amazon, Google, Intel, IBM, NVIDIA, etc.), and its estimated market value was $4.5 billion in 2023, which of course also reflects the high expertise the company has in managing models, hosting them, and making them available.

In every level, there is also a dependency on a material infrastructure of computers with heavy processing capability to generate images as well as develop and refine the diffusion models. This relies on energy consumption, and not least on GPUs. In Stable Diffusion, people who generate images or develop LoRAs can have their own GPU (built into their computer or specifically acquired), but they can also benefit from a distributed network, allowing them to access other people’s GPUs, using the so-called Stable Horde. That is, there is a different plane of material infrastructure underneath.

Mapping other planes of AI

What is particular about the map of this catalogue of objects of interest and necessity, is that it purely maps autonomous and decentralised generative AI. Both Hugging Face' dependency in venture capital and Stable Diffusion's dependency on hardware and infrastructure point to the fact that there are several planes that are not captured in the map of autonomous and decentralised AI image generation, but which are equally important – which reflect a number of dependencies that can be explored and mapped. One might also add, a plane of governance and regulation. For instance, The EU AI Act, laws on copyright infringement, which Stable Diffusion (like any other AI ecology) will also depend on. The anthropologists and AI researcher Gertraud Koch has drawn a map (or diagram) of all the planes that she identifies in generative AI.

[Gertraud's map]

There are, as such, many other maps each in their own way build conceptual models of generative AI. In this sense, maps and cartographies are not just shaping the reception and interpretation of genrative AI, but can also be regarded as objects of interest in themselves and intrinsic parts of AI’s existence: generative AI depends on cartography to build, shape, navigate, and also negotiate and criticise its being in the world. The collection of cartopgraphies and maps is therefore also what makes AI a reality.

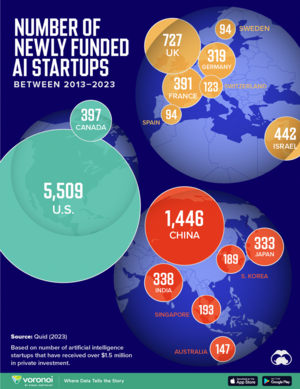

There are for instance maps of the corporate plane of AI, such as entrepreneur, investor and pod cast host Matt Turck’s “ultimate annual market map of the data/AI industry” (https://firstmark.com/team/matt-turck/). Since 2012 Matt Turck has documented the ecosystem of AI not just to identify key corporate actors, but also developments of trends in business. One can see how the division of companies dealing with infrastructure, data analytics, applications, data sources, and open source becomes fine grained over the years, and forking out into, for instance, applications in health, finannce and agriculture; or how privcy and security become of increased concern in the business of infrastructures.

Maps and hierarchies

"counter maps"

Conceptual mapping / maps of maps

Atlas of AI

Joler and Pasquinelli

Other maps…. And a map of the process of producing a pony image