Interfaces: Difference between revisions

| Line 19: | Line 19: | ||

[[File:Comfyui interface.jpg|none|thumb|A screen shot of ComfyUI's interface, displaying a node based workflow where tasks can be ordered in pipelines. ]] | [[File:Comfyui interface.jpg|none|thumb|A screen shot of ComfyUI's interface, displaying a node based workflow where tasks can be ordered in pipelines. ]] | ||

Secondly, in autonomous AI image creation you find a great variety of settings and configurations. To generate an image, there is naturally a [[prompt]], but also the option of adding a negative prompt. One can also combine models, e.g., use a 'base model' of Stable Diffusion, and add LoRA's from [[CivitAI]] or [[Hugging Face]]. There is also the option of determine how much weight the added models should have in the generation of image, the size of the image, or a 'seed' that allows for variations (of style, for instance) while maintaining some consistency in the image. | Secondly, in autonomous AI image creation you find a great variety of settings and configurations. To generate an image, there is naturally a [[prompt]], but also the option of adding a negative prompt (instructions on what not to include in the generated image). One can also combine models, e.g., use a 'base model' of Stable Diffusion, and add LoRA's from [[CivitAI]] or [[Hugging Face]]. There is also the option of determine how much weight the added models should have in the generation of image, the size of the image, or a 'seed' that allows for variations (of style, for instance) while maintaining some consistency in the image. | ||

Thirdly, like the commercial platforms, also interfaces to autonomous AI offer integration into other services, but with much less restriction. Stability AI, for instance, offers an 'API' (an Application Programming Interface), meaning a more command-line interface that allows developers to integrate image generation capabilities into their own applications. Likewise, Hugging Face (a key hub for AI developers and innovation) provides an array of models that are released with different licences (some more open, some more restricted for, say, commercial use) and which can be integrated into new tools and services. | Thirdly, like the commercial platforms, also interfaces to autonomous AI offer integration into other services, but with much less restriction. Stability AI, for instance, offers an 'API' (an Application Programming Interface), meaning a more command-line interface that allows developers to integrate image generation capabilities into their own applications. Likewise, Hugging Face (a key hub for AI developers and innovation) provides an array of models that are released with different licences (some more open, some more restricted for, say, commercial use) and which can be integrated into new tools and services. | ||

Revision as of 15:14, 10 July 2025

What is an interface to autonomous AI?

A computational model is not visible as such, therefore when wanting to work with Stable Diffusion a first encounter is an interface that renders the flow of data tangible in one form or the other. Interfaces to generative AI come in many forms. There is Hugging Face, which offers an interface to the many models of generative AI, usually via a command-like interface. There are interfaces between the different types of software, for instance an API (Application Programming Interface) where one can integrate a model into other software). On a material plane, there are also interfaces to the racks of servers that run the models, or between them (i.e., GPUs).

What is of particular interest here – when navigation the objects of interest and necessity – is, however, the user interface to autonomous AI image generation: the ways in which a user (or developer) accesses the 'latent space' of computational models (see Maps).

How does one access and experiment with Stable Diffusion and autonomous AI?

What is the network that sustains the interface to AI image creation?

Most people who have experience with AI image creation will have used 'flagship' generators, that are often also integrated into other services. Such as for instance Microsoft Bing, OpenAI's DALL-E, Adobe Firefly or Google Gemeni. Microsoft Bing, for instance, is not merely a search engine, but also integrates all the other services offered by Microsoft, including Microsoft Image Creator. The Image Creator is, as expressed in the setting made to be "surprised", "inspired", or "explore ideas". There is in other words an expected affective smoothness in the interface – a simplicity, and low entry threshold for the user that perhaps also explains the widespread preference for the commercial platforms. What is noticeable in this affective smoothness (besides the integration into the platform universes of Microsoft, Google, and nowadays also OpenAI), is that users are offered very few parameters in the configuration; basically just a prompt. Interfaces to autonomous AI vary significantly from this in several ways.

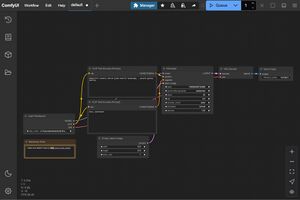

First of all, many of them do not offer a webbased interface. The interfaces for generating images with Stable Diffusion therefore also vary, and there are many options depending on the configuration of one's own computer. ComfyUI, for instance, is designed specifically for Stable Diffusion and employs a node based workflow (as may also be encountered in other softwares, such as MaxMSP), making it particularly suitable to reproduce 'pipelines' of models (see also Hugging Face). It works for both Windows and Ubunty (Linux) users. Draw Things is suitable for MacOS users. ArtBot is another graphical user interface, that has a web interface as well as integration with Stable Horde, allowing users to generate images in a peer-based infrastructure of GPUs (as an alternative to the commercial platforms' cloud infrastructure).

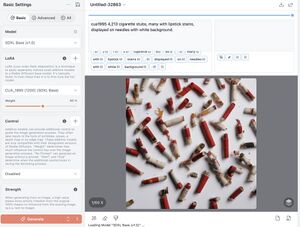

Secondly, in autonomous AI image creation you find a great variety of settings and configurations. To generate an image, there is naturally a prompt, but also the option of adding a negative prompt (instructions on what not to include in the generated image). One can also combine models, e.g., use a 'base model' of Stable Diffusion, and add LoRA's from CivitAI or Hugging Face. There is also the option of determine how much weight the added models should have in the generation of image, the size of the image, or a 'seed' that allows for variations (of style, for instance) while maintaining some consistency in the image.

Thirdly, like the commercial platforms, also interfaces to autonomous AI offer integration into other services, but with much less restriction. Stability AI, for instance, offers an 'API' (an Application Programming Interface), meaning a more command-line interface that allows developers to integrate image generation capabilities into their own applications. Likewise, Hugging Face (a key hub for AI developers and innovation) provides an array of models that are released with different licences (some more open, some more restricted for, say, commercial use) and which can be integrated into new tools and services.

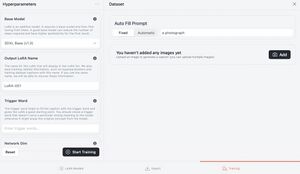

Fourthly, many of the interfaces are not just for generating images using the available models and settings. The visual cultural preferences for, say, a particular style of manga also leads to further complexity on the user interface. That is, interfaces like Draw Things and ComfyUI are simultaneous also interfaces for training one's own models (i.e., to build LoRAs), and possibly making them available to others (e.g., on CivitAI), so that others can use them if they have the same affinity for this particular visual style.

On short, interfaces to autonomous AI are open-ended in multiple ways, and are typically not only between use ('pixel space') and model ('latent space'), but simultaneously also between models and a space for developers that ordinary users typically do not see – allowing users to distribute the necessary material means and processing powers, to combine models, or even build their own.

[IMAGE MAP - INTERFACE IN TWO INTERSECTIONS OR SPACES IN THE MAP]

How do interfaces to autonomous AI create value?

Using a commercial platform, one quickly experience a need for 'currencies'. In Microsoft Image Creator, for instance, there are 'credits' that allow users a front pocket to the GPU to speed up an otherwise slow process of generating an image. These credits are called Microsoft Reward points, and are basically earned by either waiting (as a punishment) or being a good customer, who regularly uses Microsoft's other products. One earns, for instance, points for searching through Bing, using Windows search box, buying Microsoft products, and playing games in Microsoft Xbox. Use is in other words intrinsically related a plane of business and value creation that capitalises on the use of energy and GPU on a material plane (see Maps).

Like the commercial platform interfaces, also the interfaces for Stable Diffusion relies on 'business plane' that organises the access to a material infrastructure, but they do so in very different ways. For instance, Draw Things allows users to generate images on their own GPU (on a material plane), without the need for currencies. And, with ArtBot it is possible to connect to Stable Horde, accessing the processing power of a distributed network. Here, users are also allowed a front pocket, but this is not granted on their loyalty as 'customers', but in their loyalty to the peer-network. Allowing other users to access one's GPU will be rewarded with 'Kudos' that can then be used to skip the waiting line when accessing other GPUs. A free ride is in this sense only available if the network makes it possible.

What is their role in techno cultural strategies?

The commercial platform interfaces for AI image creation are sometimes criticised for their biases or copyright infringements, but many people see them as useful tools. They can be used by, for instance, creatives to test out ideas and get inspired. Frequently, they are also used in teaching and communication. This could be for illustration – as an alternative to, say, an image found in the internet, where use might otherwise violate copyrights. It is increasing also used to display complex ideas in an illustrative way. Often, the model will fail or reveal its cultural biases in this attempt, and at times (perhaps even as a new cultural genre), presentations also include the failed attempts to ridicule the AI model and laugh at how it perceives the complexity of illustrating an idea.

[EXAMPLES / IMAGES FROM ACADEMICS - MY OWN + MAI'S]

By its many settings, interfaces to autonomous AI accommodate a much more fine grained visual culture. As previously mentioned, this can be found on sites such as CivitAI or Danbooru. Here one finds a visual culture that not only is invested in, say, manga, but often also in LoRAs. That is, on CivitAI there are images created with the use of LoRAs to generate a specific stylistic outputs, but also requests to use specific LoRAs to generate images.

The complex use of interfaces testifies how highly skilled the practitioners are within the interface culture of autonomous AI image creation: when generating images, one has to understand how to make visual variations using 'seed' values, or how to make use of Stable Horde using Kudos (currencies) to speed up the process; when building and annotating datasets for LoRAs, and creating 'trigger words', one has to understand how this ultimately relates to how one prompts when generating images using the LoRA; when setting 'learning rates' (in training LoRAs), one has to understand the implications for the use of processing power and energy; and much more. In other words, to operate the interface does not only demand a high knowledge of visual culture, but also deep insights into how models work, and the complex interdependencies of different planes of use, computation, social organisation, value creation, and material infrastructure.

How do interfaces relate to autonomous infrastructures?

To conclude, interfaces to autonomous AI image generation seem to rely on a need for parameters and configurations that accommodates particular and highly specialised visual expressions, but also gives rise to a highly specialised interface culture that possesses deep insights into not only visual culture, but also the technology. Such skills are rarely afforded in the smooth commercial platforms that overflows visual culture with an abundance of AI generated images. Interfaces to autonomous AI sometimes also build in a decentralisation of processing power (GPU), either by letting users process the images in their own computers, or by accessing a peer-network of GPUs. Despite this decentralisation, interfaces to autonomous AI is not detached from commercial interests and centralised infrastructures. The integration of and dependency on a platform like Hugging Face is a good example of this.

++++++++++++++++++++++++

[CARD TEXT]