Hugging Face: Difference between revisions

| Line 5: | Line 5: | ||

=== What is the network that sustains Hugging Face? === | === What is the network that sustains Hugging Face? === | ||

Hugging Face is a platform, but what it offers is more resembling an infrastructure for, in particular, training models. As such, Hugging Face is an object that operates in a space that is not typically seen by ordinary users. It is a space for developers (amateurs or professionals) to use and interact with the computational models of a latent space (see Maps). | Hugging Face is a platform, but what it offers is more resembling an infrastructure for, in particular, training models. As such, Hugging Face is an object that operates in a space that is not typically seen by ordinary users. It is a space for developers (amateurs or professionals) to use and interact with the computational models of a latent space (see [[Maps]]). | ||

Companies involved in training foundation models would typically have their own infrastructures, but they may make them available on Hugging Face. This includes both Stability AI, but also the Chinese DeepSeek, and others. Users may, for instance, upload their datasets to experiment with the many models in Hugging Face. Typically, the uploaded datasets are freely available on the platform. But users also experiment in other ways. They ‘post train’ the models and create [[LoRA|LoRAs]] for instance. Others create 'pipelines' of models, meaning that the outcome of one model can become the input for another model, At the time of writing there are nearly 500,000 datasets and 2,000,000 models freely available. | Companies involved in training foundation models would typically have their own infrastructures, but they may make them available on Hugging Face. This includes both Stability AI, but also the Chinese DeepSeek, and others. Users may, for instance, upload their [[Dataset|datasets]] to experiment with the many models in Hugging Face. Typically, the uploaded datasets are freely available on the platform. But users also experiment in other ways. They ‘post train’ the models and create [[LoRA|LoRAs]] for instance. Others create 'pipelines' of models, meaning that the outcome of one model can become the input for another model, At the time of writing there are nearly 500,000 datasets and 2,000,000 models freely available. | ||

=== How has Hugging Face evolved through time? === | === How has Hugging Face evolved through time? === | ||

Hugging Face initially set out in 2016 by French entrepreneurs Clément Delangue, Julien Chaumond, and Thomas Wolf. Already in 2017 they received their first round of investment of $1,2 million, as it was stated in the press release, Hugging Face is a [https://techcrunch.com/2017/03/09/hugging-face-wants-to-become-your-artificial-bff/ "new chatbot app for bored teenagers. The New York-based startup is creating a fun and emotional bot. Hugging Face will generate a digital friend so you can text back and forth and trade selfies."] In 2021 they received a $40 million investment to develop its "[https://techcrunch.com/2021/03/11/hugging-face-raises-40-million-for-its-natural-language-processing-library/ open source library for natural language processing (NLP) technologies.]" There were "10,000 forks" (i.e., branches of development projects) and "around 5,000 companies [...] using Hugging Face in one way or another, including Microsoft with its search engine Bing." | Hugging Face initially set out in 2016 by French entrepreneurs Clément Delangue, Julien Chaumond, and Thomas Wolf. Already in 2017 they received their first round of investment of $1,2 million, as it was stated in the press release, Hugging Face is a [https://techcrunch.com/2017/03/09/hugging-face-wants-to-become-your-artificial-bff/ "new chatbot app for bored teenagers. The New York-based startup is creating a fun and emotional bot. Hugging Face will generate a digital friend so you can text back and forth and trade selfies."] In 2021 they received a $40 million investment to develop its "[https://techcrunch.com/2021/03/11/hugging-face-raises-40-million-for-its-natural-language-processing-library/ open source library for natural language processing (NLP) technologies.]" There were "10,000 forks" (i.e., branches of development projects) and "around 5,000 companies [...] using Hugging Face in one way or another, including Microsoft with its search engine Bing." | ||

This shows how the company has gradually moved from providing a service (a chatbot) to becoming a major (if not ''the'') platform for AI development – now, not only in language technologies, but also (as mentioned) in speech synthesis, text-to-video. image-to-video, image-to-3D and much more within generative AI. But it also shows an evolution of generative AI. Like today, early ChatGPT (developed by OpenAI and released in 2022) used large language models (LLMs), but offered very few parameters for experimentation: the prompt (textual input) and the temperature (the randomness and creativity of the model's output). Today, there are all kinds of components and parameters | |||

This trajectory shows how the company has gradually moved from providing a service (a chatbot) to becoming a major (if not ''the'') platform for AI development – now, not only in language technologies, but also (as mentioned) in speech synthesis, text-to-video. image-to-video, image-to-3D and much more within generative AI. But it also shows an evolution of generative AI. Like today, early ChatGPT (developed by OpenAI and released in 2022) used large language models (LLMs), but offered very few parameters for experimentation: the prompt (textual input) and the temperature (the randomness and creativity of the model's output). Today, there are all kinds of components and parameters that also explains the present-day richness of Hugging Face’ interface. Many of the commercial platforms do not offer this richness, and an intrinsic part of the delinking from them seems to be attached to a fascination of settings and advanced configurations. | |||

[[File:Huggingface-2017.jpg|alt=Screenshot of huggingface.co, 2017.|none|thumb|Screenshot of huggingface.co, 2017. At the time, the company was entirely focused on building a new chatbot app for teenagers.]] | [[File:Huggingface-2017.jpg|alt=Screenshot of huggingface.co, 2017.|none|thumb|Screenshot of huggingface.co, 2017. At the time, the company was entirely focused on building a new chatbot app for teenagers.]] | ||

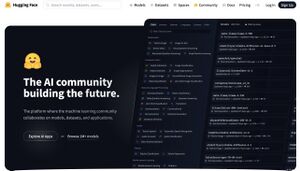

[[File:Huggingface-2025.jpg|none|thumb|Screenshot of huggingface.co, 2025. Today the company offers access to millions of models and datasets for its users to experiment and develop with.]] | [[File:Huggingface-2025.jpg|none|thumb|Screenshot of huggingface.co, 2025. Today the company offers access to millions of models and datasets for its users to experiment and develop with.]] | ||

| Line 34: | Line 35: | ||

Looking at Hugging Face, delinking from commercial interests in autonomous generative AI does not seem to be an either-or. Rather, dependencies on communities and attempts to decentralise generative AI seem to be in a constant movement, gravitating away from 'pee-to-peer' communities, towards platformisation and 'client-server relations'. This may be due to the high level of expertise and technical requirements involved in generative AI, but is not without consequence. | Looking at Hugging Face, delinking from commercial interests in autonomous generative AI does not seem to be an either-or. Rather, dependencies on communities and attempts to decentralise generative AI seem to be in a constant movement, gravitating away from 'pee-to-peer' communities, towards platformisation and 'client-server relations'. This may be due to the high level of expertise and technical requirements involved in generative AI, but is not without consequence. | ||

When, as noted by the European Business Review, most tech-companies in AI want to collaborate with Hugging Face, it is because the company offers an infrastructure for AI. Or, rather, it offers a platform that performs as an infrastructure for AI – an "linchpin" that keeps everything in the production in position. As also noted by [https://direct.mit.edu/books/edited-volume/5044/chapter-abstract/2983150/Platforms-Are-Infrastructures-on-Fire?redirectedFrom=fulltext Paul Edwards], a platform seems to be, in a more general view, the new mode of handling infrastructures in the age of data and computation. Working with AI models is a demanding task that requires both expertise, hardware and organisation of labour, and what Hugging Face offers is speed, reliability, and not least agility in a world of AI that is in constant flux, and where new models and techniques are introduced almost at a monthly basis. | When, as noted by the [https://www.europeanbusinessreview.com/hugging-face-why-do-most-tech-companies-in-ai-collaborate-with-hugging-face/ European Business Review], most tech-companies in AI want to collaborate with Hugging Face, it is because the company offers an infrastructure for AI. Or, rather, it offers a platform that performs as an infrastructure for AI – an "linchpin" that keeps everything in the production in position. As also noted by [https://direct.mit.edu/books/edited-volume/5044/chapter-abstract/2983150/Platforms-Are-Infrastructures-on-Fire?redirectedFrom=fulltext Paul Edwards], a platform seems to be, in a more general view, the new mode of handling infrastructures in the age of data and computation. Working with AI models is a demanding task that requires both expertise, hardware and organisation of labour, and what Hugging Face offers is speed, reliability, and not least agility in a world of AI that is in constant flux, and where new models and techniques are introduced almost at a monthly basis. | ||

With their 'linchpin status' Hugging Face builds on existing infrastructures: they depend on already existing infrastructures, such as the flow of energy or water, necessary to make the platform run, and they also build on social and organisational infrastructures, such as those of both start-ups and cultural communities. At the same time, however, they also reconfigure these relations – creating social and commercial dependencies on Hugging Face as a new 'platformed' infrastructure for AI. | With their 'linchpin status' Hugging Face builds on existing infrastructures: they depend on already existing infrastructures, such as the flow of energy or water, necessary to make the platform run, and they also build on social and organisational infrastructures, such as those of both start-ups and cultural communities. At the same time, however, they also reconfigure these relations – creating cultural, social and commercial dependencies on Hugging Face as a new 'platformed' infrastructure for AI. | ||

++++++++++++++++++++++++++ | ++++++++++++++++++++++++++ | ||

| Line 43: | Line 44: | ||

== Hugging Face == | == Hugging Face == | ||

Hugging Face | Hugging Face initially stated in 2016 out as a chatbot for teenagers, but is now a (if bot ''the'') collaborative hub for AI development – not specifically targeted AI image creation, but generative AI more broadly (including speech synthesis, text-to-video. image-to-video, image-to-3D, and much more). It attracts amateur developers who use the platform to experiment with AI models, as well as professionals who typically use the platform as an outset for entrepreneurship. By making AI models and datasets available it can also be labelled as an attempt to democratise AI and delink from the key commercial platforms, yet at the same time Hugging Face is deeply intertwined with these companies and various commercial interests. | ||

Hugging face (as of 2023) an estimated market value of $4,5 billion. It has received high amounts of venture capital from Amazon, Google, Intel, IBM, NVIDIA and other key corporation in AI and has, because of the company's expertise in handling AI models at large scale, collaborations with both Meta and Amazon Web Services. Yet, at the same time, it also remains a platform for amateur developers and entrepreneurs who use the platform as an infrastructure for experimentation with advanced configurations that the conventional platforms do not offer. | |||

Hugging Face is a key example of how generative AI - also when seeking autonomy – depends in a technical infrastructure. In a constantly evolving field reliability, security, scalability and adaptability become important parameters, and Hugging Face offers this in the form of a platform. | |||

IMAGES: Business + interface/draw things + mgane + historical/contemporary interfaces. | IMAGES: Business + interface/draw things + mgane + historical/contemporary interfaces. | ||

Revision as of 10:41, 9 July 2025

What is Hugging Face?

Hugging Face is, simply put, a collaborative hub for AI development – not specifically targeted AI image creation, but generative AI more broadly (including speech synthesis, text-to-video. image-to-video, image-to-3D, and much more).. It attracts amateur developers who use the platform to experiment with AI models, as well as professionals who use the expertise of the company or use the platform as an outset for entrepreneurship. By making AI models and datasets available it can also be labelled as an attempt to democratise AI and delink from the key commercial platforms, yet at the same time Hugging Face is deeply intertwined with these companies and various commercial interests.

What is the network that sustains Hugging Face?

Hugging Face is a platform, but what it offers is more resembling an infrastructure for, in particular, training models. As such, Hugging Face is an object that operates in a space that is not typically seen by ordinary users. It is a space for developers (amateurs or professionals) to use and interact with the computational models of a latent space (see Maps).

Companies involved in training foundation models would typically have their own infrastructures, but they may make them available on Hugging Face. This includes both Stability AI, but also the Chinese DeepSeek, and others. Users may, for instance, upload their datasets to experiment with the many models in Hugging Face. Typically, the uploaded datasets are freely available on the platform. But users also experiment in other ways. They ‘post train’ the models and create LoRAs for instance. Others create 'pipelines' of models, meaning that the outcome of one model can become the input for another model, At the time of writing there are nearly 500,000 datasets and 2,000,000 models freely available.

How has Hugging Face evolved through time?

Hugging Face initially set out in 2016 by French entrepreneurs Clément Delangue, Julien Chaumond, and Thomas Wolf. Already in 2017 they received their first round of investment of $1,2 million, as it was stated in the press release, Hugging Face is a "new chatbot app for bored teenagers. The New York-based startup is creating a fun and emotional bot. Hugging Face will generate a digital friend so you can text back and forth and trade selfies." In 2021 they received a $40 million investment to develop its "open source library for natural language processing (NLP) technologies." There were "10,000 forks" (i.e., branches of development projects) and "around 5,000 companies [...] using Hugging Face in one way or another, including Microsoft with its search engine Bing."

This trajectory shows how the company has gradually moved from providing a service (a chatbot) to becoming a major (if not the) platform for AI development – now, not only in language technologies, but also (as mentioned) in speech synthesis, text-to-video. image-to-video, image-to-3D and much more within generative AI. But it also shows an evolution of generative AI. Like today, early ChatGPT (developed by OpenAI and released in 2022) used large language models (LLMs), but offered very few parameters for experimentation: the prompt (textual input) and the temperature (the randomness and creativity of the model's output). Today, there are all kinds of components and parameters that also explains the present-day richness of Hugging Face’ interface. Many of the commercial platforms do not offer this richness, and an intrinsic part of the delinking from them seems to be attached to a fascination of settings and advanced configurations.

How does Hugging Face affect the creation of value?

Hugging Face has an estimated market value of $4.5 billion in 2023. What does this exorbitant value of a platform that is little publicly known reflect?

On the one hand, the company has capitalised on the various communities of developers in, for instance, visual culture who experiment on the platform and share their datasets and LoRAs, but this is only a partial explanation.

Hugging Face is not only for amateur developers. On the platform one also finds an 'Enterprise Hub' where Hugging Face offers, for instance, advanced computing at higher scale with a more dedicated hardware setup ('ZeroGPU', see also GPU), and also 'Priority Support'. In these cases of commercial purposes, access is typically more restricted. In this sense, the platform has become innately linked to a plane of business innovation and has, for instance, also teamed up with Meta to boost European startups in an "AI Accelerator Program".

Notably, Hugging Face also collaborates with other key corporations in the business landscape of AI. For instance, Amazon Web Services, allowing users to make the trained models in Hugging Face available through Amazon SageMaker. Nasdaq Private Market also lists a whole range of investors in Hugging Face (Amazon, Google, Intel, IBM, NVIDIA, etc.).

The excessive (and growing) market value of Hugging Face reflects, in essence, the hight degree of expertise that has accumulated within a company that consistently has sought to accommodate a cultural community, but also a business and enterprise plane of AI. Managing an infrastructure of both hardware and software for AI models at this large scale is highly sought expertise.

What is the role of Hugging Face in techno-cultural strategies?

Regardless of the Enterprise Hub, Hugging Face also remains a hub for amateur developers who do experimentation with generative AI beyond what the commercial platforms conventionally offer. An example is the user 'mgane' who has shared a dataset of "76 cartoon art-style video game character spritesheets." The images are "open-source 2D video game asset sites from various artists." mgane has used them on Hugging Face to build LoRAs on Stable Diffusion, that is "for some experimental tests on Stable Diffusion XL via LORA and Dreambooth training methods for some solid results post-training."

A user like mgane is arguably both embedded in a specific 2D gaming culture, and also has the developer skills necessary to access and experiment with models in the command line interface. However, users can also access the many models in Hugging Face through more graphical user interfaces like Draw Things that allows for accessing and combining models and LoRAs to generate images, and also to train one's own LoRAs.

How does Hugging Face relate to autonomous infrastructures?

Looking at Hugging Face, delinking from commercial interests in autonomous generative AI does not seem to be an either-or. Rather, dependencies on communities and attempts to decentralise generative AI seem to be in a constant movement, gravitating away from 'pee-to-peer' communities, towards platformisation and 'client-server relations'. This may be due to the high level of expertise and technical requirements involved in generative AI, but is not without consequence.

When, as noted by the European Business Review, most tech-companies in AI want to collaborate with Hugging Face, it is because the company offers an infrastructure for AI. Or, rather, it offers a platform that performs as an infrastructure for AI – an "linchpin" that keeps everything in the production in position. As also noted by Paul Edwards, a platform seems to be, in a more general view, the new mode of handling infrastructures in the age of data and computation. Working with AI models is a demanding task that requires both expertise, hardware and organisation of labour, and what Hugging Face offers is speed, reliability, and not least agility in a world of AI that is in constant flux, and where new models and techniques are introduced almost at a monthly basis.

With their 'linchpin status' Hugging Face builds on existing infrastructures: they depend on already existing infrastructures, such as the flow of energy or water, necessary to make the platform run, and they also build on social and organisational infrastructures, such as those of both start-ups and cultural communities. At the same time, however, they also reconfigure these relations – creating cultural, social and commercial dependencies on Hugging Face as a new 'platformed' infrastructure for AI.

++++++++++++++++++++++++++

CARD TEXT

Hugging Face

Hugging Face initially stated in 2016 out as a chatbot for teenagers, but is now a (if bot the) collaborative hub for AI development – not specifically targeted AI image creation, but generative AI more broadly (including speech synthesis, text-to-video. image-to-video, image-to-3D, and much more). It attracts amateur developers who use the platform to experiment with AI models, as well as professionals who typically use the platform as an outset for entrepreneurship. By making AI models and datasets available it can also be labelled as an attempt to democratise AI and delink from the key commercial platforms, yet at the same time Hugging Face is deeply intertwined with these companies and various commercial interests.

Hugging face (as of 2023) an estimated market value of $4,5 billion. It has received high amounts of venture capital from Amazon, Google, Intel, IBM, NVIDIA and other key corporation in AI and has, because of the company's expertise in handling AI models at large scale, collaborations with both Meta and Amazon Web Services. Yet, at the same time, it also remains a platform for amateur developers and entrepreneurs who use the platform as an infrastructure for experimentation with advanced configurations that the conventional platforms do not offer.

Hugging Face is a key example of how generative AI - also when seeking autonomy – depends in a technical infrastructure. In a constantly evolving field reliability, security, scalability and adaptability become important parameters, and Hugging Face offers this in the form of a platform.

IMAGES: Business + interface/draw things + mgane + historical/contemporary interfaces.

++++++++++++++++++++++++

NOTES

A platform > extraction

. In this sense, it can also be compared to platforms such as Github, and what it really offers is an infrastructure, and not least an expertise, in handling all of this at a very large scale, involving nearly 2 million models,

Complex pipelines .. . you have access meaning you can have one model - outpost for another -

Foundation models training - customised set up / aim for smth that cannot be replicated

… value of HF . Pipeline of models / experimentation with

For instance, one might find a dataset for creating 2-D, cartoon-line video game characters.

Access to, and not least integration of models is a complicated issue that demands high technical expertise. More than anything, what Hugging Face offers is therefore an infrastructure.

I had used this same image set for some experimental tests on Stable Diffusion XL via LORA and Dreambooth training methods for some solid results post-training

Upload datasets - train models

.... training models at large scale

+ customisation for clients / manually racks of machines

Upload a dataset / accesss for others to download

But also to access models on HF

https://huggingface.co/datasets/mgane/2D_Video_Game_Cartoon_Character_Sprite-Sheets

"mgane/2D_Video_Game_Cartoon_Character_Sprite-Sheets" - a "76 cartoon art-style video game character spritesheets"

"All images editted using Tiled image editting software as most assets are typically downloaded individually and not in sequence. I compiled each animation sequence into one img to display animations frame-by-frame evenly distributed across some common animations seen in 2D video game art (Idle, Attack, Walk, Running, etc). I had used this same image set for some experimental tests on Stable Diffusion XL via LORA and Dreambooth training methods for some solid results post-training."

https://huggingface.co/datasets/mgane/2D_Video_Game_Cartoon_Character_Sprite-Sheets

but it is also registered as a private company teaming up with, for instance, Meta to boost European startups in an "AI Accelerator Program". The company also collaborates with Amazon Web Services to allow users to deploy the trained and publicly available models in Hugging Face through Amazon SageMaker. Nasdaq Private Market lists a whole range of investors in Hugging Face (Amazon, Google, Intel, IBM, NVIDIA, etc.), and its estimated market value was $4.5 billion in 2023, which of course also reflects the high expertise the company has in managing models, hosting them, and making them available.

What is the network that sustains this object?

- How does it move from person to person, person to software, to platform, what things are attached to it (visual culture)

- Networks of attachments

- How does it relate / sustain a collective? (human + non-human)

How does it evolve through time?

Evolution of the interface for these objects. Early chatgpt offered two parameters through the API: prompt and temperature. Today extremely complex object with all kinds of components and parameters. Visually what is the difference? Richness of the interface in decentralization (the more options, the better...)