Latent space: Difference between revisions

From CTPwiki

| Line 7: | Line 7: | ||

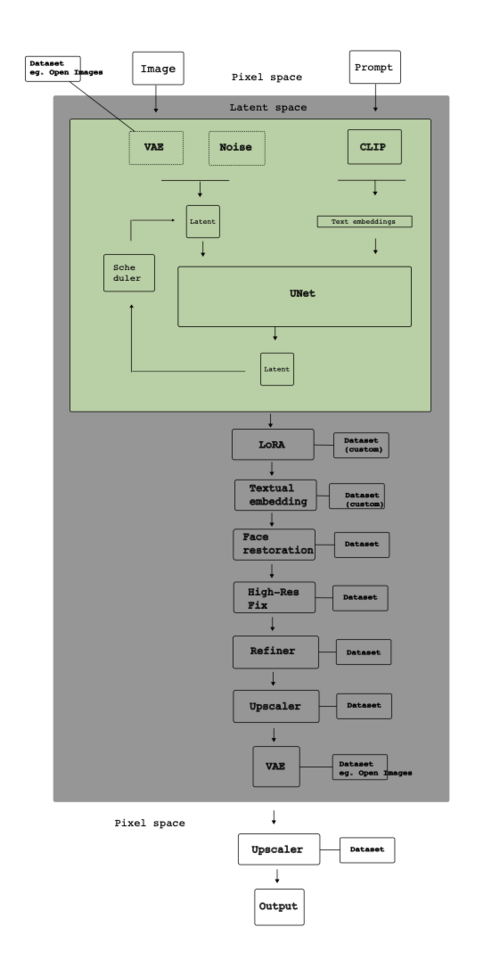

In Stable Diffusion, it was the encoding and decoding of images in so-called ‘latent space’, i.e., a simplified mathematical space where images can be reduced in size (or rather represented through smaller amounts of data) to facilitate multiple operations at speed, that drove the model’s success.<ref>https://mediatheoryjournal.org/2024/09/30/joanna-zylinska-diffused-seeing/</ref> | In Stable Diffusion, it was the encoding and decoding of images in so-called ‘latent space’, i.e., a simplified mathematical space where images can be reduced in size (or rather represented through smaller amounts of data) to facilitate multiple operations at speed, that drove the model’s success.<ref>https://mediatheoryjournal.org/2024/09/30/joanna-zylinska-diffused-seeing/</ref> | ||

Once trained, CLIP can compute representations of images and text, called embeddings, and then record how similar they are. The model can thus be used for a range of tasks such as image classification or retrieving similar images or text. | Once trained, CLIP can compute representations of images and text, called embeddings, and then record how similar they are. The model can thus be used for a range of tasks such as image classification or retrieving similar images or text. <ref>https://the-decoder.com/new-clip-model-aims-to-make-stable-diffusion-even-better/</ref> | ||

From "Maps": _Secondly, there is a 'latent space'. Image latency refers to the space in between the capture of images in datasets and the generation of new images. It is a algorithmic space of computational models where images are, for instance, encoded with 'noise', and the machine then learns how to how to de-code them back into images (aka 'image diffusion')._ | From "Maps": _Secondly, there is a 'latent space'. Image latency refers to the space in between the capture of images in datasets and the generation of new images. It is a algorithmic space of computational models where images are, for instance, encoded with 'noise', and the machine then learns how to how to de-code them back into images (aka 'image diffusion')._ | ||

Latest revision as of 12:28, 16 July 2025

Reference to Diffusion

What is the network that sustains this object?

- How does it move from person to person, person to software, to platform, what things are attached to it (visual culture)

- Networks of attachments

- How does it relate / sustain a collective? (human + non-human)

In Stable Diffusion, it was the encoding and decoding of images in so-called ‘latent space’, i.e., a simplified mathematical space where images can be reduced in size (or rather represented through smaller amounts of data) to facilitate multiple operations at speed, that drove the model’s success.[1]

Once trained, CLIP can compute representations of images and text, called embeddings, and then record how similar they are. The model can thus be used for a range of tasks such as image classification or retrieving similar images or text. [2]

From "Maps": _Secondly, there is a 'latent space'. Image latency refers to the space in between the capture of images in datasets and the generation of new images. It is a algorithmic space of computational models where images are, for instance, encoded with 'noise', and the machine then learns how to how to de-code them back into images (aka 'image diffusion')._

How does it evolve through time?

Evolution of size

How does it create value? Or decrease / affect value?

With its material form: the weights. Gains value with adoption.

Gains value by comparison. Ability to do what cannot be done by others or less well.